For recurrent neural networks (RNNs), Real-Time Recurrent Learning (RTRL) is a gradient-based learning algorithm. One of the most popular algorithms for teaching RNNs what to store in their short-term memory is this one.

Mechanism and Core Problem

Real-Time Recurrent Learning works by sending error signals “backwards in time” to modify network weights, as do Back-Propagation Through Time (BPTT) and other gradient-descent variations.

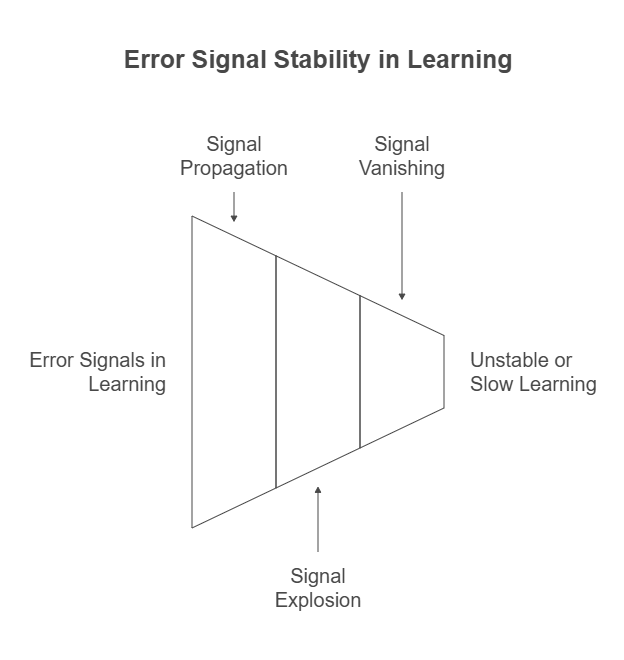

The tendency of these error signals to either “blow up” (explode) or “vanish” (decay exponentially) when they are carried backward through time presents a major difficulty for Real-Time Recurrent Learning. An important problem is its exponential dependence on weight size.

Unstable learning and fluctuating weights may result if the error blows up. Learning to bridge long time gaps becomes unfeasible or unreasonably slow if the error disappears. When weights have absolute values less than 4.0 and traditional logistic sigmoid activation functions are employed, this issue is very noticeable.

Limitations on Long Time Lags

Long-term dependencies are very difficult for Real-Time Recurrent Learning to learn. Compared to techniques like Long Short-Term Memory (LSTM), it “fails miserably” on challenges where there are long lags between inputs and matching instructor messages.

For instance, RTRL demonstrated 79% effectiveness with a brief 4-time-step delay (p=4) in studies involving noise-free sequences that required the network to remember a beginning element for p time steps in order to predict the final element.

- A 10-time-step delay yielded 0% success (p=10).

- With a 100-time-step delay (p=100), there is no success.

RTRL and BPTT “have no chance of solving non-trivial tasks with minimal time lags of 1000 steps,” according to the sources.

Computational Properties

Compared to BPTT and LSTM, Real-Time Recurrent Learning has a significantly higher computational complexity per time step and weight. The update complexity of RTRL is “much worse” than that of LSTM and BPTT, which have an O(W) update complexity, where W is the number of weights.

Real-Time Recurrent Learning is regarded as “local in time” since the length of the input sequence has no bearing on how much storage it needs. Its update complexity per time step and weight do, however, rely on the size of the network because it is not “local in space”

RTRL Comparison with Other Algorithms

BPTT: For long time lag issues, both Real-Time Recurrent Learning and BPTT experience exponential error degradation. The gradient calculated by untruncated BPTT is identical to that of offline RTRL.

Simple Weight Guessing: Random weight guessing can even perform better than RTRL and other algorithms for some easy tasks. This implies that some of the benchmark problems that were previously employed were too simple to adequately assess these algorithms’ capabilities.

Long Short-Term Memory (LSTM): LSTM was created especially to address the error back-flow issues that BPTT and RTRL encountered. Through “constant error carrousels” inside special units which are managed by multiplicative gate units that are trained to open and close access to this flow it does this. Although LSTM employs a version of RTRL, this version has been adjusted to appropriately take into consideration the changed, multiplicative dynamics brought about by its input and output gates. Experiments on challenging, artificial long time lag problems that had not been addressed by prior recurrent network algorithms demonstrate that LSTM learns “much faster” and accomplishes “many more successful runs” than RTRL.