What is Constant Error Carrousel?

The vanishing gradient problem, a major issue in training recurrent neural networks, is resolved by the Constant Error Carousel (CEC), a mechanism in Long Short-Term Memory (LSTM) networks that permits gradients (i.e., learning signals) to flow unmodified throughout time steps.

Fundamental Idea: The Constant Error Carrousel main goal is to maintain error flow through a self-connected unit. This guarantees that error signals that flow backward in time do not inflate or decrease exponentially, which is a major problem with traditional Real-Time Recurrent Learning (RTRL) and Back-Propagation Through Time (BPTT) algorithms. Because of its stability, the network can learn and retain information over long periods of time.

Implementation in a Single Unit (Naive Approach)

The activation function (f_j) of a single unit (let’s say unit j) with a self-connection must be linear, and its self-connection weight (w_jj) must be kept at 1.0 in order to guarantee continuous error flow through that unit. Because of this configuration, the unit’s activation (y_j(t)) stays constant over time (y_j(t+1) = y_j(t)) since f’_j(net_j(t)) * w_jj (the scaling factor for error propagation) equals 1.0.

- This “constant error carrousel” serves as the foundation for the more intricate LSTM architecture.

- The Naive Approach’s drawbacks For a single unit, the CEC resolves the vanishing/exploding gradient problem; however, when the unit is coupled to other network components.

Additional problems:

Input Weight Conflict: The CEC unit j must utilise an incoming weight (w_ji) for two opposing functions: (1) activating j to store particular inputs, and (2) safeguarding the stored input by preventing j from being turned off by unnecessary subsequent inputs. W_ji may receive contradictory update signals because to the linear nature of the CEC unit j, which makes learning challenging.

Output Weight Conflict: Similar problems arise when an outgoing weight (w_kj) from the CEC unit j has to (1) retrieve its material at specific periods and (2) keep j from disturbing other units (k) at other times. This conflict is particularly noticeable in long time lag circumstances, when stored data must be protected for extended periods of time and outputs that are already correct must be protected from disturbance.

LSTM’s Solution: Memory Cells and Gate Units

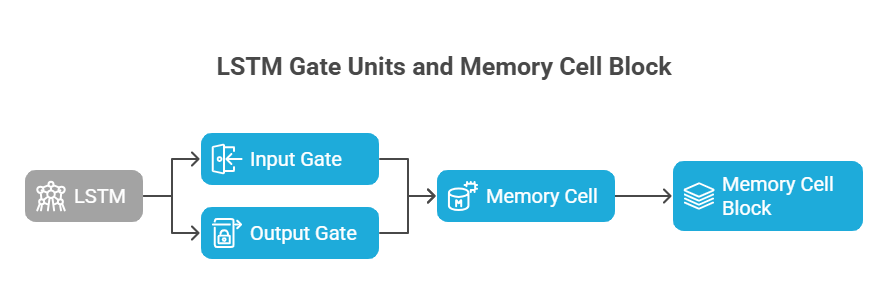

By adding more “gate units” to the basic Constant Error Carrousel that regulate access to the Constant Error Carrousel, LSTM gets around these issues.

Input Gate (inj): To prevent irrelevant inputs from disturbing the contents of the memory, a multiplicative input gate unit is created. It chooses when to store new data or overwrite old data by managing the error flow to the memory cell’s input connections.

Output Gate (outj): A multiplicative output gate unit shields other units from being disturbed by the CEC’s currently irrelevant memory contents. It keeps cj from disturbing other units and regulates when its content can be accessible.

By determining which mistakes to capture and when to release them, these gates are able to learn to open and close access to the continuous error flow through the Constant Error Carrousel.

A “memory cell block,” which allows for dispersed information storage, is created when several memory cells share input and output gates.

Flow of Errors in the Constant Error Carrousel in LSTM

Because LSTM uses a truncated backpropagation learning rule, errors that reach the memory cell’s net inputs (net_cj, net_inj, and net_outj) do not propagate further back in time.

But in the Constant Error Carrousel of the memory cell, faults are carried backward through earlier internal states without scaling, guaranteeing a steady flow of errors.

Only at the point of entry (via the input gate) or exit (through the output gate) is error flow scaled. This “truncation” keeps the error flow through the CEC constant by ensuring that there are no loops that could let the error flow to re-enter a cell it just left.

Advantages with CEC

Bridging Long Time Lags: LSTM can successfully handle noise, distributed representations, and continuous values by bridging very long time lags through persistent error backpropagation within memory cells.

Effectiveness: Like BPTT, the LSTM method is local in both space and time, meaning that storage needs are independent of input sequence length. Its update complexity per weight and time step is O(1).

As the perfect integrator that can retain data eternally, the CEC is essentially the constant, stable core of an LSTM memory cell. Recurrent neural networks’ long-term dependency learning problems are successfully resolved by the creative gating mechanisms, which then intelligently regulate when this data is updated .

Disadvantages of Constant Error Carousel

Risk of Exploding Gradients

CEC can lead to exploding gradients if the content of the memory cell grows infinitely, even while it helps avoid disappearing gradients.

To control the error flow and lessen this danger, LSTMs typically include gating devices (input, forget, and output gates).

Complexity of Gating Mechanisms

Complex gates are needed by LSTMs to regulate the continuous error flow. In comparison to simpler designs, this results in an increase in model complexity, training time, and memory utilisation.

Reduced Training Time

LSTMs with CECs can be more computationally costly and longer to train than more straightforward designs like GRUs or ordinary RNNs due to the numerous components and the requirement to compute and backpropagate across time using memory cells.