NLP Pipeline Architecture

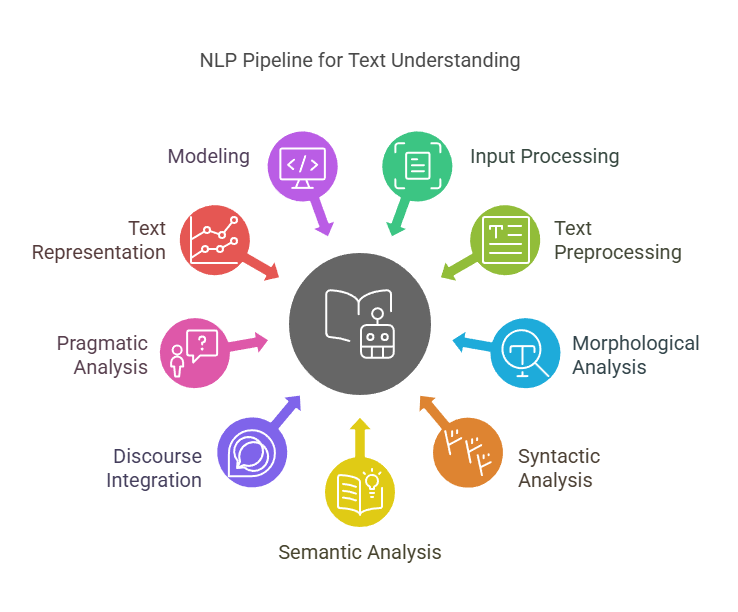

The idea of a pipeline, which describes the usual steps needed in processing natural language, can be used to understand NLP system architecture. For gradual enhancements, a sophisticated NLP system frequently has a modular design.

A general NLP pipeline architecture consists of the following essential elements:

Input Processing/Acquisition: The first step, known as input processing or acquisition, deals with receiving and getting ready the input, which could be text or speech. For text, this includes tasks like text extraction and cleanup (such as spelling correction, HTML parsing, and Unicode normalisation). Speech recognition is a part of it.

Text Preprocessing: Sentence segmentation, tokenisation (breaking the text up into words or sub-word units), noise and stop word removal, and normalisation (such as case folding, stemming, or lemmatisation) are all crucial phases in text preprocessing. In this stage, the text is separated into words, phrases, and paragraphs.

Morphological Analysis: This part of the study of word structure, which includes distinguishing lexemes and morphemes, is complicated for certain languages but very simple for many others. Here, finite-state methods are applicable.

Syntactic Analysis (Parsing): Syntactic analysis, or parsing, is the stage that establishes a sentence’s grammatical structure. Grammar and word order are checked, and word relationships are displayed. Dependency graphs and parse trees are examples of representations that can be produced via parsing. Two popular methods are dependency analysis and phrase structure analysis.

Semantic Analysis: In semantic analysis, meaning is gleaned from the text. For instance, in ontological semantics, meaning is extracted and represented using an ontology or world model. One type of this is word sense disambiguation.

Discourse integration: It is the process of addressing anaphora resolution and taking into account how a sentence’s meaning connects to the discourse or context as a whole.

Pragmatic Analysis: The last stage of the standard NLP pipeline, pragmatic analysis deals with figuring out the intended meaning in context.

Text Representation/Feature Engineering: Transforming text into numerical representations for machine learning models. Methods such as feature engineering for classical models or the creation of dense representations, such as word embeddings for neural networks, may be used in this process.

Modeling: From basic heuristics to intricate deep learning models, modelling is the process of creating the computational model. This entails creating and keeping up with the model.

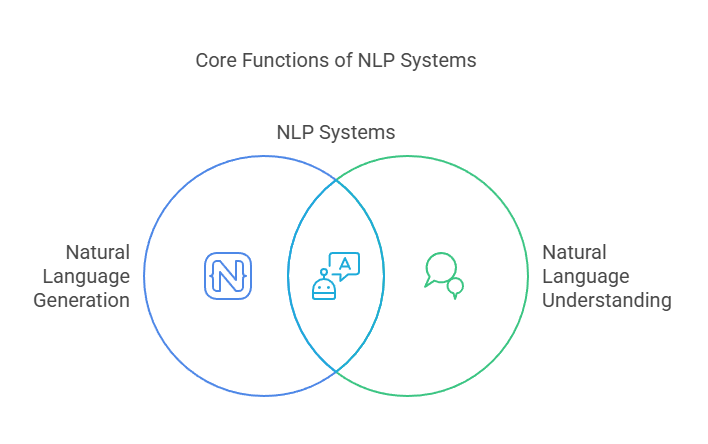

Natural language generation (NLG) and understanding (NLU) are the two primary functions that make up NLP systems

- Natural Language Understanding converts human language into a format that can be read by machines, extracting semantics, emotions, relationships, and keywords from text.

- NLG serves as a translator, translating digital information into representations in natural language. Text realisation (how to phrase it), sentence planning, and text planning are usually involved. Most people agree that NLU is harder than NLG.

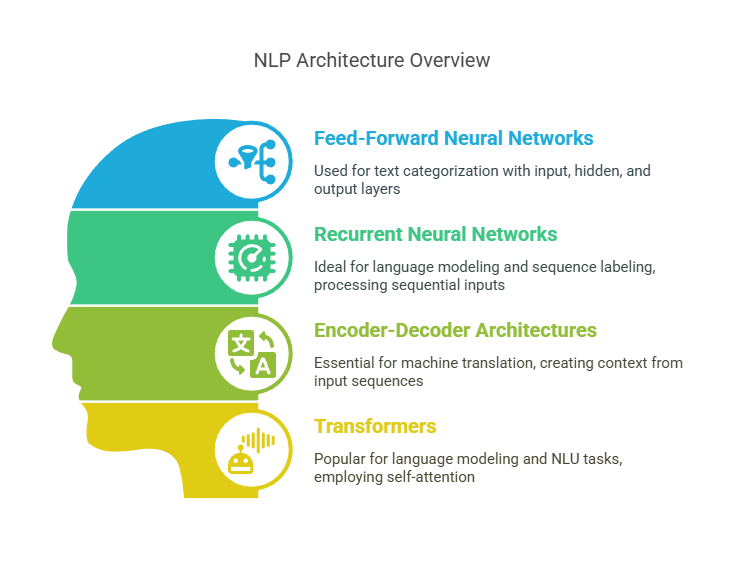

Various NLP methodologies have produced various architecture paradigms. Systems in the past were frequently rule-based and symbolic, but statistical machine learning is the main foundation of modern systems. As deep learning has grown, new architectures have emerged, including:

- For applications such as text categorisation, feed-forward neural networks (FFNNs) include layers for input, hidden processing, and output.

- Language modelling and sequence labelling are two applications that benefit greatly from the use of recurrent neural networks (RNNs) and their derivatives (LSTM, GRU) for processing sequential input. Fixed-size vectors can be used to represent sequential inputs.

- Encoder-Decoder Machine translation and other sequence-to-sequence tasks require architectures, which frequently use RNNs or Transformers. An intermediate context representation is created from an input sequence by the encoder, and an output sequence is created from the context by the decoder.

- Particularly for language modelling and attaining cutting-edge performance on NLU tasks, transformers including models like BERT and GPT are popular designs. They employ self-attention and other techniques.

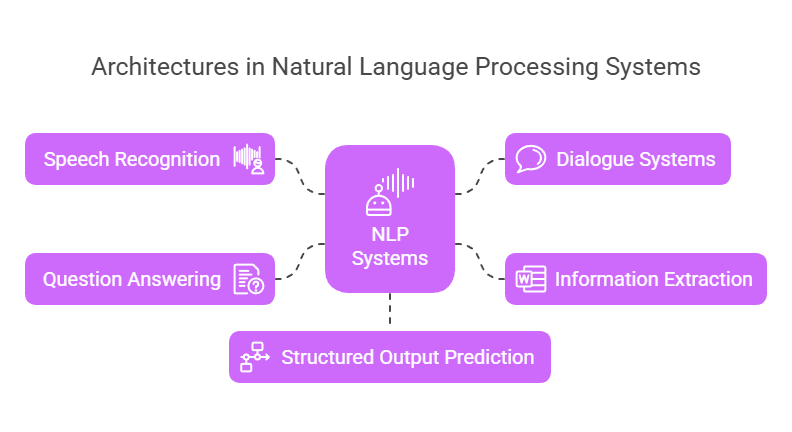

Applications use architectures that are specific to their tasks

- Generally, source-channel models serve as the foundation for statistical speech recognition systems. They are made up of parts such as linguistic and acoustic models, which are frequently combined using searchable unified graph forms.

- Dialogue systems frequently have a dialogue-state or belief-state architecture, particularly task-oriented ones. Important parts include a dialogue state tracker to keep the conversation going, a dialogue policy to determine the system’s reaction, an NLG component, and NLU/Spoken Language Understanding (SLU) to extract user intent and slot fillers. Older systems employed rules and templates, but some incorporate machine learning for things like slot filling.

- Systems that use pipelines to extract information (IE) can convert unstructured text into structured data, such as entity, relation, and entity tuples. They frequently entail different phases of language processing and pattern recognition that vary by domain. Commercial IE systems may incorporate supervised machine learning, lists, and rules.

- Question Answering (QA) systems frequently use a retriever-reader design, in which a reader extracts the answer from a retriever that locates pertinent documents. Traditional QA systems, such as IBM Watson, combined feature-based classifiers and rules in hybrid ways.

- Syntactic parsing, Named Entity Recognition (NER), part-of-speech tagging, and machine translation are all examples of structured output prediction tasks, which use models to predict sequences, trees, or graphs. Models of neural networks are used for these tasks. Sequence classification and tagging architectures (POS, NER) are widely used.

It is emphasized that modularity is a key component of successful Natural Language Processing system development. Syntax trees and other linguistic representations are still crucial, particularly when training data is scarce, even though current developments favor deep learning and occasionally end-to-end methods that may not overtly employ conventional linguistic structures internally. Additionally, hybrid systems that combine learning and rules are still applicable and in use currently.