Comparing Probabilistic vs Knowledge Based Methods vs Hybrid Models depends on the task complexity, data availability, and interpretability requirements.

Computers can comprehend and process human language using a variety of methods used in natural language processing (NLP). In general, these techniques fall into two categories: probabilistic (statistical or machine learning) and knowledge-based (often rule-based or symbolic). A change and continuous interplay between these paradigms are reflected in the history and present state of NLP.

Knowledge Based approaches

NLP techniques that are knowledge-based frequently depend on manually created rules and language expertise obtained from human specialists. These approaches make use of finite-state techniques like finite-state automata and transducers, formalisms like regular expressions, and formal grammars like context-free grammars (CFGs) and generative grammar. They construct computational models using intricate sets of linguistic component-specific rules and processes, occasionally derived from philosophical rationalism.

Important traits and methods include: • Assigning tags or analysing structures using manually created rules derived from language expertise.

- Semantic interpretation through the use of symbolic representations, such as predicate calculus or logic. In computational semantics, variants such as typed lambda calculus are occasionally employed.

- The 1980s saw the rise of important techniques like unification-based or feature-based grammar, which were predicated on the idea that rules are necessary for manipulating symbolic representations in natural language computers.

- For computational approaches to word structures and sound patterns (phonology and morphology), finite-state toolkits are commonly utilised. It is possible to extract information using cascaded finite-state transducers.

In knowledge-based question answering, databases of facts are queried by creating a semantic representation of the inquiry, such as a logical representation.

Although knowledge-based methods have their drawbacks, they can offer profound, organised representations. The ambiguity and unpredictability present in natural language can be difficult for them to handle.

Manually creating intricate rules causes a knowledge acquisition bottleneck and may lead to systems that don’t work well with text that appears naturally. Many languages have complicated morphological phenomena that defy straightforward prefix/suffix assumptions, which can be problematic for pure finite-state models. Linguistic structure, which is frequently linked to knowledge-based insights, appears to be especially significant in situations where training data is scarce.

Read more on NLP Knowledge Base Framework, Advantages And Drawbacks

Probabilistic approaches in nlp

The goal of probabilistic approaches is to learn from data. These include statistical natural language processing, machine learning, and deep learning techniques. By using statistical, pattern recognition, and machine learning approaches, this paradigm makes the assumption that language structure can be learnt from vast volumes of language use data. This supports an empiricist perspective by characterising language as it really happens (E-language) as opposed to as a theoretical mental module (I-language). By assigning probability to linguistic events, statistical methods avoid categorical judgements on uncommon sentences and instead concentrate on the associations and preferences present in the entirety of language use.

Using statistical models to assign tags or analyse structures based on the likelihood of components occurring is one of the key features and methods. Common probabilistic models for sequence prediction tasks, such as Part-of-Speech (POS) tagging, include Hidden Markov Models (HMMs) and Conditional Random Fields (CRFs).

- Language modelling and N-grams are two techniques that forecast the likelihood of sequences.

- supervised and unsupervised learning are two types of machine learning approaches for natural language processing that entail training models on data. A categorisation job is frequently used to describe supervised learning.

- Generative classifiers estimate the joint probability (P(input, label) or P(X|Y) and P(Y)), whereas discriminative classifiers explicitly model the conditional probability of labels given input (P(Y|X)). Support Vector Machines (SVMs), Logistic Regression, and Perceptrons are examples of discriminative techniques that have been used extensively and frequently perform better than generative methods in a variety of tasks.

The neural networks used in deep learning techniques include CNNs, LSTMs, GRUs, encoder-decoder models, and transformers (such as BERT and GPT). Neural networks do not require the Markov assumption that was common in previous statistical models in order to condition on complete phrases. Data-driven continuous vector representations are produced via neural word embeddings.

Probability distributions over potential parses are used in statistical parsing to determine the most likely structure. - A usage theory of meaning in statistical natural language processing holds that meaning is found in the distribution of circumstances in which words are employed.

Probabilistic methods behave well when errors and new data are present, are robust, and generalise effectively. Frequently, text corpora can be used to automatically estimate the parameters of statistical models, minimising the amount of human labour needed. To train efficiently, though, they usually need a large amount of data. Complex probabilistic models ca

n successfully manage uncertainty and incompleteness and can be just as explanatory as complex non-probabilistic models.

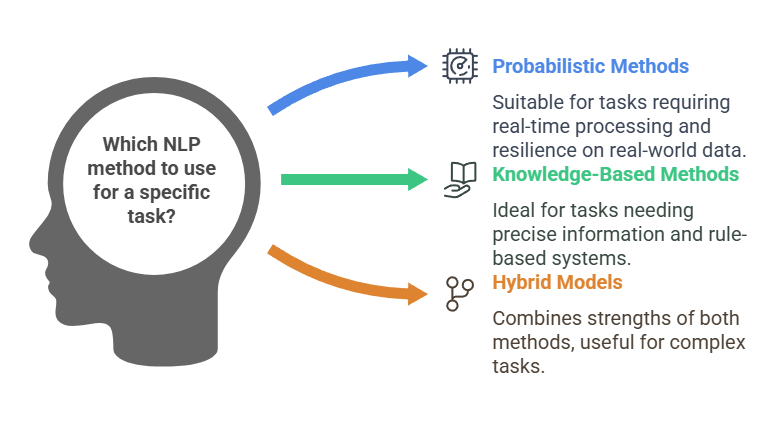

Comparing Probabilistic vs Knowledge Based Methods vs Hybrid Models

Probabilistic and Knowledge Based Methods are known as rule-based method Historically, rule-based, symbolic approaches were prevalent, especially during the 1980s. Nevertheless, difficulties in fields such as automatic speech recognition, where rule-based systems were found to be insufficient for processing in real-time, caused a change to data-intensive methods combined with machine learning and evaluation-led methodology. The requirement for improved efficiency and resilience on real-world data, as well as the challenge of learning precise information for rule-based systems, contributed to this shift towards statistical approaches.

The relative significance of machine learning versus linguistic understanding is still up for dispute. Even though practically every modern NLP approach includes machine learning, linguistic representations like syntax trees are still relevant. Certain strategies integrate linguistic insights into data-driven approaches by converting them into machine learning framework characteristics. The designs of machine learning models can also be influenced by linguistic ideas.

The hybrid methodologies used by many real-world NLP systems nowadays incorporate aspects of both rule-based and statistical/machine learning techniques. A rule-based system for easily identifiable things and a machine learning system for more complicated ones are two examples of hybrid approaches to information extraction. Lists, rules, and supervised machine learning are frequently strategically combined in commercial Named Entity Recognition (NER) systems. It’s also thought that a future path might involve combining dictionary-based learning with corpus-based learning. Results can be enhanced by using information sources such as online dictionaries and point-of-sale taggers, which represents a shift towards statistical methods that are rich in knowledge.