Natural language processing first steps include tokenization, which divides text into tokens. Punctuation, words or subword units, and other distinct objects can all be used as these tokens. This is the first stage in transforming unstructured text data into an analysis-ready format. Tokenization is basically dividing a string into recognizable linguistic units, or more broadly, identifying the boundaries between words to break up a text’s character sequence. It is common to refer to these units as tokens.

Challenges of tokenization

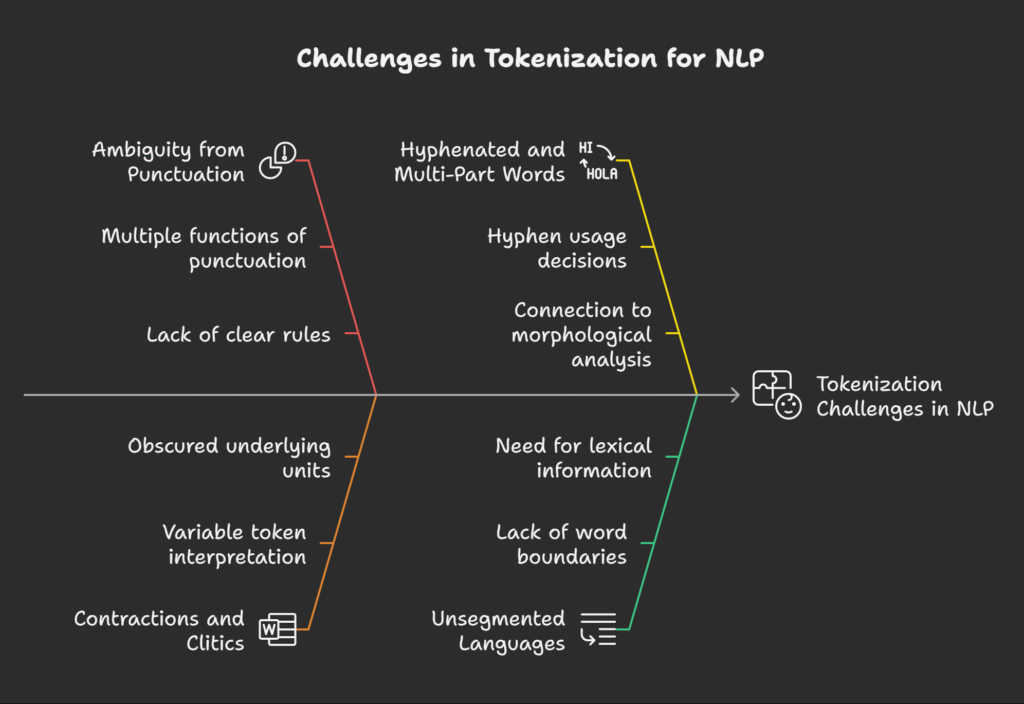

Ambiguity from Punctuation: Because periods, commas, quotation marks, apostrophes, and hyphens have several functions, they can create uncertainty even in space-limited languages like English. For each sign to be handled correctly for a particular language, tokenization rules are necessary.

Contractions and Clitics: Terms like as “don’t,” “I’d,” or “John’s” may be interpreted as one, two, or three tokens, depending on the context. Contractions and other clitics word parts that are unable to stand alone can affix to other words, obscuring the underlying units.

Learn more on What Is Language Detection In Natural Language Processing

The first phase involves parsing texts to identify its structure, which includes the title, abstract, section, and paragraphs. The term tokenization refers to the process by which the system divides sentences into word tokens for each pertinent logical structure. Though (a) the usage of abbreviations may cause the system to identify a sentence boundary when none exists, and (b) choices about capitalisation, hyphenation, special characters, and digits must be made, this process appears to be rather simple.

Do the phrases “don’t,” “I’d,” and “John’s” have one, two, or three tokens? Is the hyphen included in the tokenization of the phrase “Afro-American,” or is it treated as one or two tokens? There is no clear-cut rule that applies to numbers. It may either use them as indexing units or just disregard them. Alternatively, similar entities can be indexed by their type, meaning that the tags “date,” “currency,” etc. can be used instead of a specific date or amount of money. Lastly, lowercase letters are used for capital letters. Therefore, the words “export,” “of,” “cars,” “from,” and “France” are interpreted as the word sequence in the title “Export of cars from France.”

Hyphenated and Multi-Part Words: When dealing with hyphenation (such as in “Afro-American”), one must choose whether to use the hyphen or consider it as one or two tokens. Tokenization in unsegmented languages and morphological analysis are strongly connected to tokenizing multi-part words, such hyphenated or agglutinative words.

Multiword Expressions (MWEs): For NLP applications, spacing conventions may not always match the intended tokenization, therefore MWEs (such as “put off,” “by the way,” “New York,” “ice cream,” and “kick the bucket”) are crucial to take into account. For tokenization algorithms to treat them as single tokens, a multiword expression dictionary could be required. For such formulations, the definition of a “word” token is a far more difficult challenge.

Unsegmented Languages: For programming languages and other artificial languages, tokenization is well-known and understood. Artificial languages, on the other hand, may be precisely specified to remove lexical and structural ambiguities; natural languages, on the other hand, do not provide us this luxury, as a single character might have several meanings and their syntax is not precisely defined. A given natural language’s tokenization complexity can be influenced by a variety of variables. There is a primary distinction between tok-enization methods for languages with space boundaries and methods for languages without them.

Some word boundaries in space-delimited languages, including the majority of European languages, are signalled by the addition of whitespace. Because various applications require different tokenization protocols and because writing systems might be confusing, the character sequences delimited are not always the tokens needed for additional processing. Word boundaries are not indicated and words are written consecutively in unsegmented languages like Chinese and Thai. Unsegmented languages hence need more lexical and morphological information to be tokenized. Because character sequences can be split in a variety of ways, segmentation might be unclear.

Character Encoding: Tokenization may be impacted by multiple character encodings, which can make the same numeric values represent distinct characters. Tokenizers must understand the particular language and encoding being used. The fact that the same range of numeric values can represent various characters in different encodings might be a challenge for tokenization, even with Unicode’s increasing popularity. Both Spanish and English, for instance, are often encoded in the ubiquitous 8-bit encoding Latin-1 (also known as ISO-8859-1). An English or Spanish tokenizer would have to understand that punctuation marks and other characters (such “¡,” “¿,” “£,” and “c©”) are represented bytes in the range 161–191 in Latin-1.

For each symbol to be handled correctly for that language, tokenization rules would then be necessary. Thus, tokenizers need to be designed with a particular language and encoding in mind.

Application Dependence: The particular NLP application determines the necessary tokenization protocols. For example, voice recognition language modelling could need tokens that sound like speech, while other uses would need to normalize certain token types to a single canonical form.

Dependence on Later Processing Stages: Tokenization’s output may be affected by how further processing steps, such taggers or parsers, are made to deal with certain language events.