What is Tokenization in NLP?

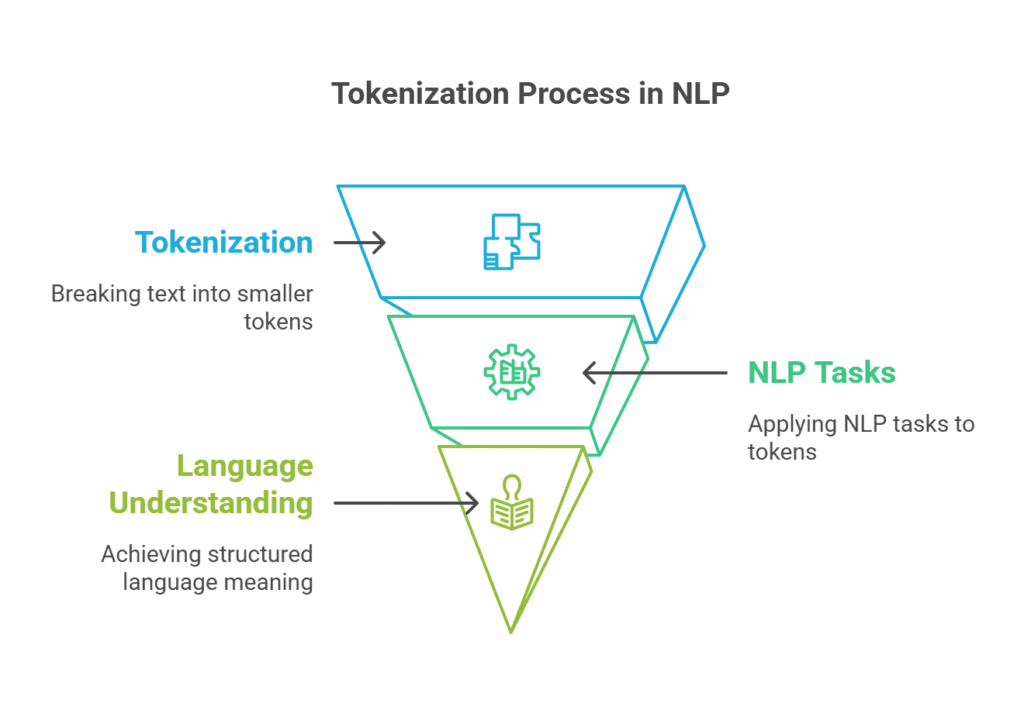

Tokenization breaks text into smaller, easier-to-manage tokens in natural language processing. Tokens may be words, sentences, letters, or other essential aspects. Tokenization is essential in many NLP activities since it helps the system digest and access text in smaller chunks. Tokenization allows NLP tasks like text, classification, praising, translation, and part-of-speech tagging. When tokenization is done incorrectly, it becomes much harder to understand the structure and meaning of language.

Why tokenization is important in NLP?

Tokenization is the first step in any NLP pipeline, and it has a big influence on the subsequent steps. The process of breaking up unstructured data and natural language text into separate parts is called asset tokenization. Token occurrences in a document can be used as a vector representation of the document.

This rapidly turns an unstructured text document into a numerical data structure that is ready for machine learning. They can also be directly used by a computer to start beneficial responses and actions. In a machine-learning pipeline, they might also be used as features that trigger more complex decisions or actions.

How Does Tokenization Work in NLP?

One of the fundamental steps in the NLP tokenization process is tokenization, as you should be aware. Tokens may be words, subwords, characters, or sentences, depending on the string’s density of analysis. The idea behind text segmentation enables improved computation for a deeper comprehension of the suggested NLP model. This is an explanation of how NLP tokenization operates:

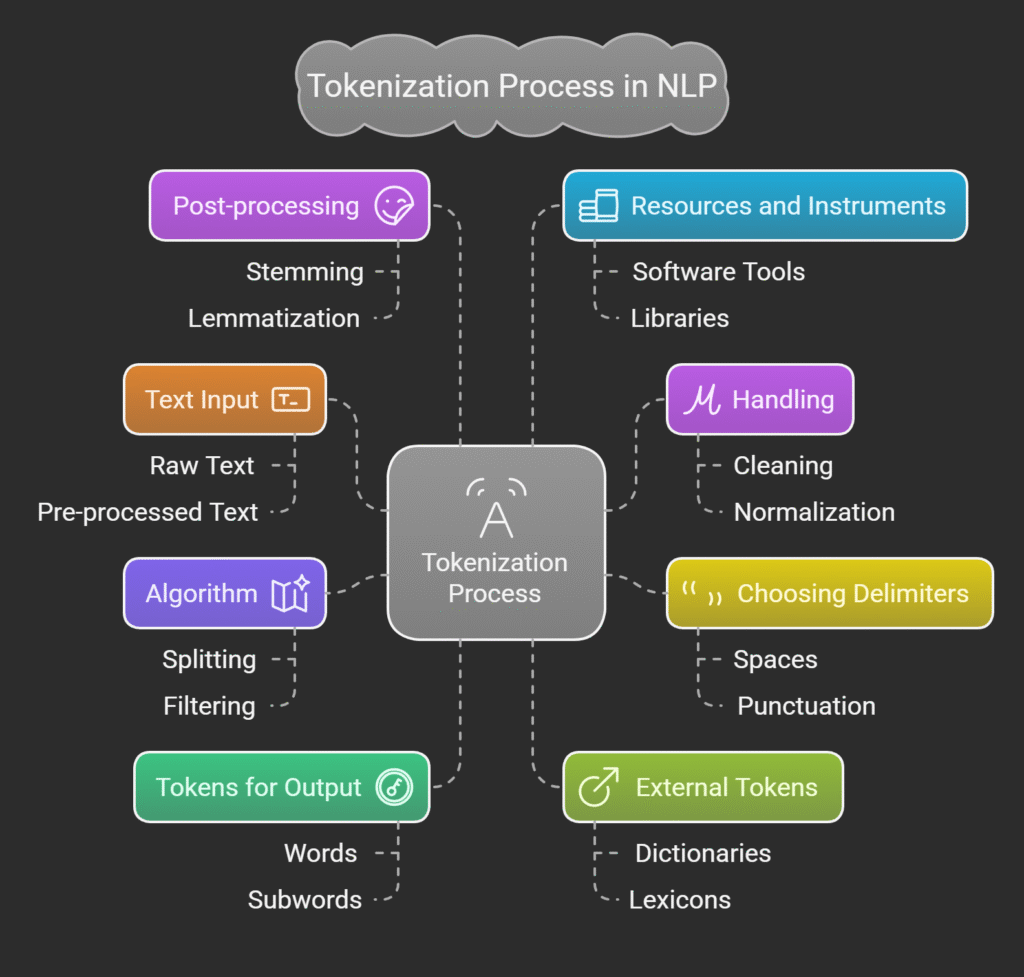

Text Input

Raw text is the initial input for a procedure. This could be a specific sentence or paragraph from the entire document being processed. For example: NLP encompasses a wide range of subtopics, however it’s crucial to remember that tokenisation is necessary for NLP activities.

Handling

Before tokenisation, text is frequently preprocessed to clean and normalise data in order to preserve order. A collection of words in lowercase characters is predictably changed by lowercase (for instance, “Tokenisation” becomes “tokenisation”).

- Particular Characters: These are “emoticons,” like @, #, &, and so forth.

- Managing Punctuation: Determines whether punctuation should remain as separate tokens or not.

Choosing Delimiters for Tokens

The process of tokenisation creates patterns or guidelines that specify where a token should begin and end. Word tokenisation, namely in English and related languages, is the process of dividing text using spaces. For instance, there is a division into [Tokenisation, is, significant] because tokenisation is crucial. Subword tokenisation uses BPE, a subword technology that is frequently or always utilised, to break words up into even smaller pieces. For example, [un, happiness] – Unhappiness. Every character is treated as a token using character tokenisation: [ “N”, “L”, “P” ] → “NLP.” They include sentence tokenisation, which is the process of identifying a period or other unique character to indicate a cognitive division between paragraphs.

The Algorithm for Tokenisation

Depending on the need, use distinct algorithms for different tokenisation strategies:

- Rule-Based Tokenisation: This method makes use of predefined rules, like punctuation or spaces.

- Statistical Methods: Token boundaries for languages like Chinese or Japanese are determined using models based on frequency or probability.

- Machine Learning Models: When applied to challenging languages or unique tasks, certain systems learn to identify the token border.

Tokens for Output

Tokenisation produces a series of tokens that can be further processed. For example:

- Tokenisation of the phrase “I adore NLP!”

- [“I”, “love”, “NLP”, “!” is the output.

- Tokenisation of the subword “unpredictable”

- [“un”, “predict”, “able”] is the output.

The Tokenization Algorithm

Several algorithms or tokenisation techniques are employed, depending on the needs:

- Rule-Based Tokenization: It uses pre-established rules, such as punctuation or spacing, to function.

- Statistical Methods: When dealing with distinct languages that lack spaces, such as Chinese or Japanese, token boundaries are established using frequency or probability-based models.

- Models for Machine Learning: Certain systems teach models to recognize token boundaries for specific tasks or challenging languages.

Learn more on Machine Learning in NLP Functions And Techniques

External Tokens

The output of tokenization is a list of tokens that can be used for additional processing. For instance:

The phrase “I adore NLP!” is tokenised.

[“I,” “love,” “NLP,” “!” Finish

The subword “unpredictable” is tokenised.

[“un,” “predict,” “able”] Finish

Post-processing

Following tokenization, the tokens may subsequently be processed if necessary to meet certain requirements:

- Uses stop word removal to eliminate common but uninformative terms like “is,” “the,” etc.

- Either stemming or lemmatization reduces words to their base or root forms (“running” → “run”).

Resources and Instruments

- Many libraries include built-in mechanisms for this process.

- NLTK: Simple tokenizers for words and sentences.

- SpaCy: Quick tokenisation for particular languages.

- Hugging Face Transformers: More attention was paid to subword tokenization for more complex NL models.

Through the use of structured tokens extracted from the text as the basis for tasks like sentiment analysis, translation, and text synthesis, this procedure will enable natural language processing (NLP) systems to analyze and comprehend language with ease.