The capacity of robots to recognize similarities between text in the ever-expanding realm of digital communication is essential to interactions with technology. Text similarity NLP is the technique of measuring how similar two text pieces are, either syntactically or semantically. It is a basic idea in Natural Language Processing (NLP). The foundation of many intelligent applications, including recommendation systems, chatbots, plagiarism checkers, and search engines, is text similarity.

What is Text Similarity NLP?

Text similarity measures semantic and syntactic similarity between two texts. Compare two words, phrases, sentences, or whole texts for similarities, depending on the goal. The fundamental problem is that human language is rich and ambiguous, meaning that two texts may have distinct appearances yet have the same meaning, or vice versa.

As an example:

- Sentence A: “The mat is where the cat is sitting.”

- Sentence B: “The rug is where a cat is sleeping.”

Despite using different terms, the meanings of these phrases are similar. It should be possible for a strong NLP model to identify this semantic resemblance.

Types of Text Similarity NLP

Lexical Similarity

Character sequences, n-grams, and matching words are examples of surface-level characteristics that are the focus of this method. It’s quick and easy, but it doesn’t convey the underlying idea.

- Jaccard Similarity: Determines how many words in two texts overlap.

- Cosine Similarity: Determines how similar term-frequency vectors are to one another.

- Levenshtein Distance (Edit Distance): Calculates the quantity of changes needed to change a string.

Semantic Similarity

Understanding the text’s meaning is the main focus here. Beyond word matching, semantic similarity entails context analysis.

- Word Embeddings (Word2Vec, GloVe): Words should be represented as dense vectors that capture context-based semantic meaning.

- Sentence Embeddings (InferSent, Universal Sentence Encoder): Use whole phrases or paragraphs to illustrate this idea.

- Transformers (BERT, RoBERTa, SBERT): Employ attention processes and contextual embeddings to identify semantic similarity with high accuracy.

Key Techniques and Tools

- TF-IDF (Term Frequency-Inverse Document Frequency): A statistical approach that illustrates a word’s significance in a document in relation to a group of documents. With cosine similarity, it is often employed.

- Word2Vec and GloVe: Based on the context of words in vast corpora, these models generate vector representations of those words.

- BERT (Bidirectional Encoder Representations from Transformers): Google developed BERT to better grasp phrase meaning by considering a word’s whole context by examining both its left and right neighbours.

- SBERT (Sentence-BERT): A BERT extension that is tailored for problems involving sentence similarity. The computation of similarity between sentence embeddings is made accurate and efficient using SBERT.

- SimHash and MinHash: These hashing approaches are helpful for near-duplicate detection or large-scale document deduplication because they compress data into fingerprints that enable fast similarity comparisons.

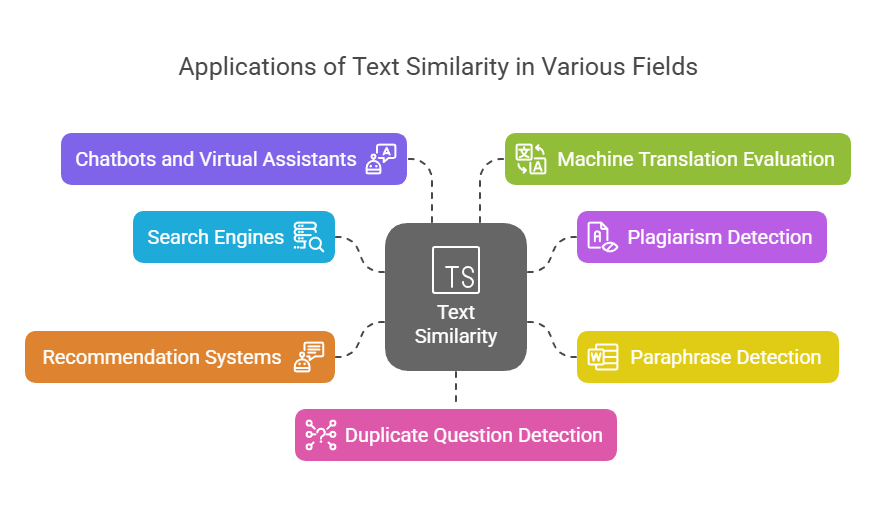

Applications of Text Similarity NLP

- Search Engines: In order to rank results, Google and other search engines compare user queries with indexed information using text similarity. Transformer model-powered semantic search has significantly increased search relevance.

- Plagiarism Detection: Turnitin and other similarity detection tools use large databases to find text that has been copied or rephrased.

- Paraphrase Detection: Systems utilise text similarity to detect if two phrases employ different words to communicate the same concept. This is crucial for content monitoring, journalism, and education.

- Recommendation Systems: Textual similarity analyses reviews, descriptions, and metadata to suggest comparable films, TV series, or music on services like Netflix and Spotify.

- Chatbots and Virtual Assistants: To comprehend user intent and obtain pertinent replies, natural language processing (NLP) engines powering assistants such as Alexa and Siri employ similarity matching.

- Machine Translation Evaluation: Machine-translated texts are compared to human references using similarity metrics in the BLEU and ROUGE metrics.

- Duplicate Question Detection: In order to improve user experience and content quality, platforms such as Quora and Stack Overflow employ semantic similarity to identify and combine duplicate questions.

Challenges in Text Similarity NLP

- Contextual Variance: Depending on the situation, a single word might have several meanings. For instance, the term “bank” might designate a riverbank or a financial organisation. Conventional models frequently fall short in capturing this subtlety.

- Domain Sensitivity: Legal materials may not be well-suited for a similarity model that was trained on medical texts. Fine-tuning and customisation are frequently necessary.

- Multilingual Complexity: It is difficult to compare texts between languages (cross-lingual similarity), therefore translation or specialised multilingual models like mBERT or XLM-R are needed.

- Computational Cost: Because sophisticated models like BERT need a lot of resources, real-time similarity matching is difficult for large-scale applications.

- Subjectivity: People’s perceptions of similarity differ. Determining ground truth in training datasets may be challenging since two people may differ on how similar two phrases are.

New Developments

Text similarity problems are now completely different because to the emergence of transformer-based models. Performance has been greatly enhanced by refining BERT on datasets such as STS-B (Semantic Textual Similarity Benchmark) and utilising contrastive learning techniques.

Models from OpenAI, like GPT, may now produce embeddings that are useful for information retrieval, grouping, and semantic similarity tasks. Likewise, Google’s Universal Sentence Encoder and Facebook’s LASER are advancing contextual and multilingual comprehension.

Conclusion

A fundamental problem in natural language processing (NLP), text similarity allows for a variety of clever applications that close the gap between machine comprehension and human language. Deep learning and contextual embeddings are the way of the future, even though conventional techniques still have a role in lightweight applications. More natural and human-like interactions with technology should be enabled by text similarity as models grow more effective and interpretable.