Unigrams in NLP

A unigram, often referred to as a 1-gram, is a string of one word. “I”, “am”, “learning”, and “Natural Language Processing” are the unigram that are contained in a statement such as “I am learning NLP.” The most basic type of n-grams are unigram, which are consecutive word sequences of a specific length.

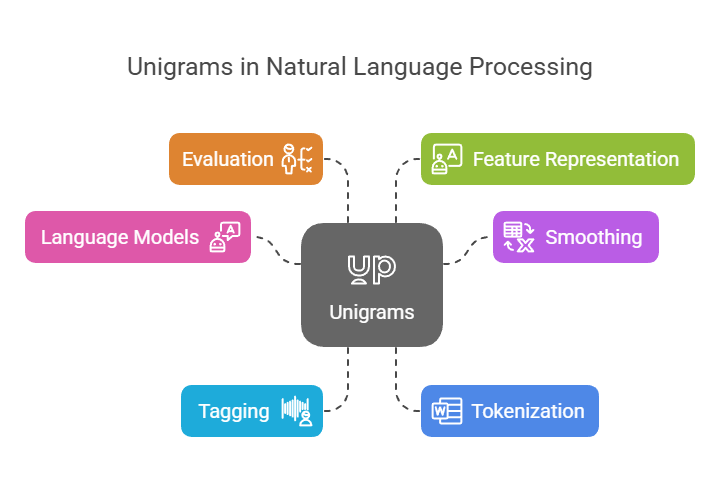

The sources describe and use unigrams as follows:

Language Models: Simplest language models are based on unigram. A unigram language model ignores the previous context entirely and forecasts a word’s likelihood based only on its unique frequency within a corpus. The Maximum Likelihood Estimate, which is calculated by dividing the word count by the total number of word tokens in the corpus, may be used to estimate this probability, P(w). The unigrams distribution is nevertheless helpful even if this model disregards word order since the majority of words in a phrase are common terms. Choosing words based on their frequency which is represented as words covering a probability space proportionate to their occurrence is known as sampling from a unigrams language model.

Smoothing: Smoothing methods for higher-order n-gram models (such as bigrams or trigrams) that cope with sparse data (events not encountered in the training data) include unigram probabilities. Backoff models, for instance, can “back off” to a lower-order model, such the unigram probability, if a higher-order n-gram probability cannot be accurately calculated. Unigrams probabilities can be subjected to Laplace smoothing. More complex smoothing techniques, such as Kneser-Ney discounting, employ unigrams probabilities in subtle ways, occasionally emphasizing a word’s “versatility” the number of situations in which it appears rather than merely its frequency.

Tagging: A straightforward statistical method for Part-of-Speech (POS) labelling is Unigram tagging. Without taking into account the surrounding words, a unigram tagger determines a token’s most probable tag based solely on the token itself (its a priori most likely tag). It works much like a lookup tagger. Words that were not included in the training data can also be given potential tags with the use of unigrams probabilities.

Tokenization: One technique for dividing text into subword units or tokens is called unigrams tokenization. Unigram tokenization, in contrast to unit-merging approaches, begins with a big initial vocabulary (containing individual characters and frequent sequences) and iteratively eliminates tokens according to how likely they are to attain a specified size in typical tokenizations. Compared to previous techniques like Byte Pair Encoding (BPE), this method is proposed to generate tokens that are more semantically relevant. Systems like T5 and ALBERT use it.

Evaluation: A statistic for assessing tasks such as machine translation is unigram precision. Unigram precision calculates the proportion of a candidate translation’s unigrams (single words) that are also found in a reference translation. By calculating their geometric mean, metrics such as BLEU combine unigram precision with bigram, trigram, and 4-gram precision.

Feature Representation: NLP models can employ unigrams, or single words, as features. Counting word occurrences basically, unigram counts is the foundation of the “bag-of-words” representation notion. In skipgram word embeddings, a unigram language model built from empirical word probabilities is sampled to produce negative examples used in training.

In conclusion, a unigram is a basic unit (a single word) in natural language processing (NLP) that may be utilized as a basic model in and of itself or as a component or feature in more sophisticated approaches for a variety of tasks, including language modelling, tagging, tokenization, and evaluation.