Extreme learning machine tutorial

Researchers are always looking for ways to make algorithms faster, simpler, and more effective in the quickly developing fields of machine learning (ML) and artificial intelligence (AI). One such method is the Extreme Learning Machines (ELM), a kind of artificial neural network that avoids many of the complications of conventional deep learning models while providing exceptional speed and performance. Extreme Learning Machines significantly reduce training time without compromising accuracy by analytically computing output weights and randomly initializing hidden layer weights, in contrast to traditional neural networks that need time-consuming backpropagation for training.

This Article examines the foundations of Extreme Learning Machines, their advantages over conventional neural networks, types, disadvantages, and future possibilities. By the conclusion, you’ll see why ELMs are becoming more and more popular in both academia and business as a potent substitute for tasks including regression, classification, and real-time learning.

Types of ELM

To improve stability, accuracy, efficiency, and generalization for certain applications, ELM has undergone numerous variations. These variations expand ELM’s capabilities across multiple learning paradigms and solve a variety of issues:

Robustness Improvement

Because random hidden nodes might cause fluctuations, researchers have concentrated on enhancing ELM’s stability and robustness.

Incremental ELM (I-ELM)

This method speeds up convergence with convex optimisation by gradually adding hidden nodes. Output weights are efficiently updated recursively by variants such as Incremental Regularized ELM (IR-ELM).

Ensemble ELM (EN-ELM)

Reduces overfitting and enhances generalisation by utilising cross-validation and ensemble learning.

Bayesian ELM

This method uses prior knowledge to compute confidence intervals by combining the Bayesian technique with ELM.

Effective ELM (EELM)

Prevents singularity and speeds up computation by choosing input weights and biases to guarantee the hidden layer output matrix is full column rank.

Voting Mechanism

A majority vote is used to determine the final classification outcome after training many separate ELMs with the same structure.

Multiple Kernel ELM (MK-ELM)

Addresses the empirical selection of kernel functions by optimizing both kernel combination weights and network weights.

Reduced Kernel ELM

This method reduces the amount of time spent by substituting a randomly selected subset from the dataset for the original kernel matrix.

Sample-based ELM (S-ELM)

This method computes distances in a sample-relevant feature space to handle missing data.

Also Read About Hopfield Networks and its Components and Architecture

Parameter Optimization

By optimizing the initially random weights and biases, parameter optimization improves efficiency and robustness.

- For regression issues, the Robust Regularised ELM (RELM-IRLS) mitigates the negative consequences of outliers.

- Robust AdaBoost.RT based Ensemble ELM (RAE-ELM): Determines the final output using weighted ensemble and assigns thresholds for weak learners.

- Swarm Intelligence Algorithms: To optimize hidden layer parameters, techniques such as the Firefly Algorithm, Memetic Algorithm (M-ELM), Improved Whale Optimization Algorithm (IWOA), Dolphin Swarm Optimization, and Grey Wolf Optimization (GWO) exist.

- By cloning weighted training samples closest to testing samples, Instance Clone ELM (IC-ELM) avoids overfitting on small datasets.

- Novel Ant Lion Algorithm (NALO): An enhanced genetic algorithm for multi-layer ELM applications such as bearing defect detection that optimizes input weight and bias.

Structure Modification

Adjusts the internal organisation of ELM to enhance performance or accomplish particular goals.

- ELM-LRF (Local Receptive Fields based ELM): This method uses convolution and pooling to extract features while adding local receptive fields for object recognition.

- SVD-Hidden-nodes based ELM: This method reduces the dimension of data and increases efficiency by combining ELM with Singular Value Decomposition (SVD) for large-scale data analysis.

- Hybrid Systems with SVD and Fuzzy Theory: This approach combines recursive SVD and fuzzy theory for online education.

- Self-organising Extreme Learning Machine (SOELM): This machine uses mutual information to alter structure, tunes input weights using Hebbian learning, and substitutes a Central Neural System (CNS) for the hidden layer.

- Multi-layer ELM: Innovative frameworks for multi-layer ELM that make use of multiple hidden layer output matrices and hybrid hidden node optimization.

ELM for Online Learning

This method avoids retraining on old data by learning from expanding datasets that gradually include new samples.

- Online Sequential ELM (OS-ELM): Trainable in blocks or one at a time, it modifies parameters successively for fresh data.

- By down-weighting out-of-date samples, OS-ELM with Forgetting Mechanism enhances performance on sequential data with time validity.

- Online Sequential Extreme Learning Machine with Weights: Reduces issues with class imbalance in online sequential learning.

- The issue of an unequal distribution of classes in training sets is addressed by ELM for Imbalanced Data.

- Weighted ELM (W-ELM): Assigns training samples weights according to the distribution of classes, giving minority classes bigger weights and majority classes lesser weights.

- ELM Autoencoder (ELM-AE) for Imbalance: This method balances the dataset by training an ELM-AE to produce more minority class examples using minority samples as seeds.

ELM for Big Data

Adapted to manage massive data sets.

- Two-stage ELM: For quicker convergence, high-dimensional data is reduced in dimension via spectral regression.

- Elastic ELM: Calculates matrix multiplications progressively, increasing efficiency for updated huge data.

- A concept drift detection module is integrated into the Ensemble ELM for Stream Data to identify both sudden and slow idea drift.

- Domain Adaptation Algorithm: This algorithm minimises domain distance to move training and testing data into the same feature space, which is helpful when distributions are different.

Also Read About

ELM for Transfer Learning

Effective in situations when labelled data is limited, this method moves information from a source domain to a target domain.

- Entails retraining with online sequential ELM after training an ELM on labelled source data and obtaining reliable data from the target domain.

- Domain Adaptation Frameworks ELM improves cross-domain performance for tasks such as visual knowledge adaptation and sensor drift compensation.

- Techniques for optimising ELM classifiers through the use of sparse representations from unlabelled data and the construction of penalty parameters.

ELM for Semi-supervised and Unsupervised Learning

This approach uses unlabelled data to expand ELM beyond supervised learning.

- Manifold Regularisation ELM: For extensive semi-supervised learning, this approach combines manifold regularisation and ELM.

- Hessian Semi-supervised ELM (HSS-ELM): Enhances extrapolation and maintains manifold structure by utilising Hessian regularisation.

- Low-rank kernel matrices are obtained from samples that lack a distinct manifold structure using Non-parametric Kernel Learning (NPKL) and ELM.

- Growing Self-organising Map (GSOM) with ELM: Improves classification and speed by resolving GSOM parameter calibration.

- Wavelet analysis is used for both unsupervised and semi-supervised learning in the USL/SSL ELM.

- ELM Autoencoder (ELM-AE): This tool is used to extract hierarchical representations from unlabelled pictures, learn features, and perform sparse representation. Orthonormal initialisation enhances performance when deep ELM-AE (DDELM-AE) is denoised.

- The Generalized ELM Autoencoder (GELM-AE) accelerates deep networks by supplementing ELM-AE with manifold regularisation.

- Multilayer ELM with Leaky Rectified Linear Unit (LReLU): This method uses LReLU as an activation function to create a multilayer ELM.

- Hybrid methods for semi-supervised learning: Use ELM as a classifier in conjunction with convolution and pooling for feature extraction.

- Hierarchical ELM for EEG: This method uses semi-supervised ELM for classification after learning characteristics from EEG signals.

- In online sequential contexts, the density-based semi-supervised OS-ELM learns patterns from unlabelled samples.

Advantages of ELM

Compared to other machine learning methods, ELM has the following advantages:

High Training Speed: Because ELM learns in a single step without iteratively adjusting hidden layer parameters, it is far faster at training than conventional neural network techniques like backpropagation.

Strong Generalization Performance: It is less likely to overfit and is renowned for its strong generalisation, especially when training data is sparse or noisy.

Ease of Implementation: Due to the abundance of open-source libraries, ELM is comparatively easy to comprehend and put into practice.

Global Optimal Solution: According to theoretical research, ELM has a higher chance than conventional networks of arriving at a global optimal solution with random parameters.

Less Optimization restrictions: ELM often produces better classification performance with less optimization restrictions than Support Vector Machines (SVM).

Less Manual Intervention: Less manual intervention is the goal of ELM research.

Robustness to Noisy/Incomplete Data: The Moore-Penrose generalised inverse allows it to handle noisy and incomplete training data with ease.

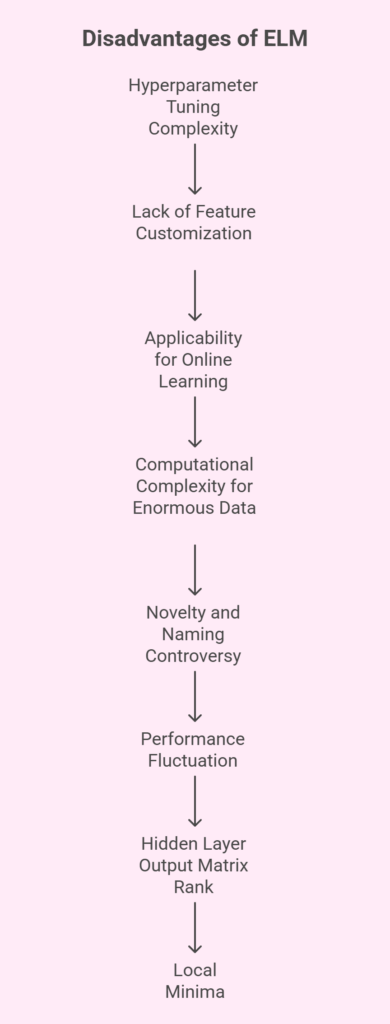

Disadvantages/Challenges of ELM

Notwithstanding its benefits, ELM has many disadvantages and has generated debates.

Hyperparameter Tuning Complexity: Because the weights in the hidden layer are initialized randomly, hyperparameter tuning might be difficult. Experimentation is necessary to choose the ideal hidden neuron and activation function combination.

Lack of Feature Customisation: ELM is unable to accurately tailor or customize features for particular issues because it mostly uses a random technique for input data transformation.

Applicability for Online Learning: Although there are OS-ELM variations, pure ELM is mostly made for batch learning, therefore it might not be the best option for applications needing online or sequential learning where data changes over time without particular adjustments.

Computational Complexity for enormous Data: Because of memory-residency and high space/time complexity, the standard ELM may not be able to train enormous data quickly and effectively, necessitating optimization techniques.

Novelty and Naming Controversy: ELM has been criticised for “improper naming and popularising” as well as “reinventing and ignoring previous ideas.” The concept of random hidden weights, according to critics, was already included in RBF networks and other models like SNN and RVFL before ELM. Proponents counter that ELM offers distinct theoretical proofs and a unified learning platform.

Performance Fluctuation: Researchers try to mitigate the impact of random hidden nodes on classification performance by using robustness techniques.

Hidden Layer Output Matrix Rank: Occasionally, a hidden layer output matrix that is not full column rank due to the random selection of input weights and biases will reduce the efficacy of ELM. Better algorithms like EELM, however, deal with issue.

Local Minima: Although ELM is said to solve the local minimum problem (by avoiding gradient-based techniques), neural networks in general may still have trouble locating global optima.

Additional Details and Prospects for the Future

ELM has a great deal of potential to become increasingly significant in big data. Even though deep learning performs remarkably well with large amounts of data, it frequently has lengthy training periods and great computing complexity. Combining deep learning and ELM may result in hybrid networks that provide outcomes comparable to deep learning but more efficiently, as evidenced by ELM’s ability to use random mapping to obtain comparable classification performance with less training time.

Theoretical support for ELM is essential, especially when it comes to defending the randomness of its hidden layer. It may be useful to compare with other random methods, such as random forests. Furthermore, as research suggests that neurons in the human brain may potentially use random methods for learning, a prospective avenue for ELM’s development is its relationship to biological learning. Given that artificial neural networks were initially inspired by the brain, examining this fundamental relationship may yield insightful information.