What is Perceptron ?

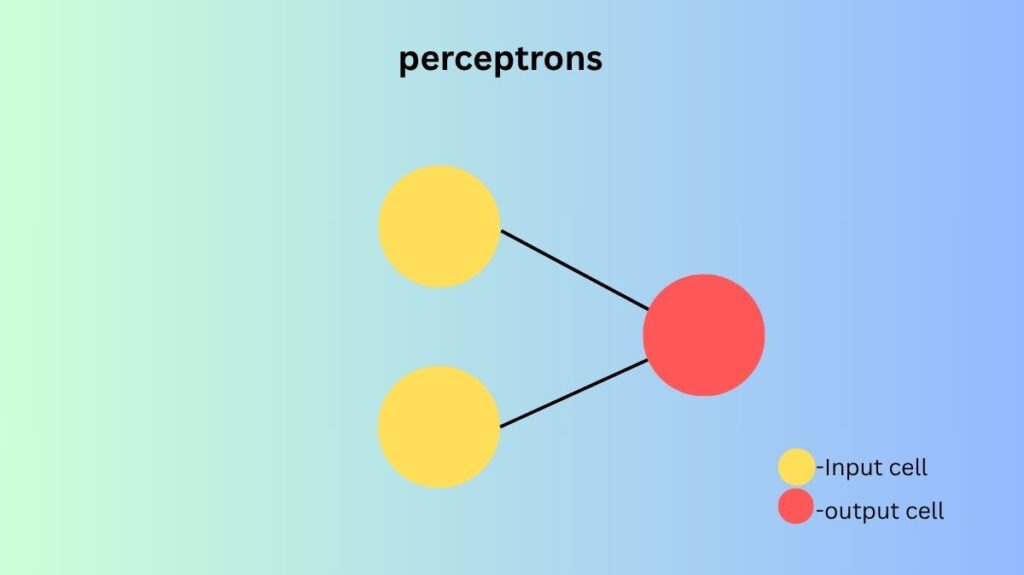

In artificial neural networks, the Perceptron is a fundamental algorithm mostly used for binary classification. It was first presented by Frank Rosenblatt in 1957, expanding on McCulloch and Pitts’ 1943 mathematical model of a neural network. Perceptrons are designed to replicate the fundamental functions of biological neurons.

Definition and Biological Inspiration

A perceptron is an algorithm that accurately classifies samples by learning the weights of a decision boundary from training data. Iteratively, it modifies this boundary until every sample is appropriately categorized.

Perceptrons, which are artificial neurons, mimic the function of biological neurons by processing incoming impulses and converting them into output signals. Through dendrites, biological neurons gather input impulses, process them in the soma, and then send out output signals through synapses and an axon. A perceptron does the same thing, gathering input signals, calculating a linear combination, and then generating an output signal through an activation function.

You can also read Complete Guide to Introduction to Neural Networks

Key Components of a Perceptron

Several essential components come together to produce a perceptron, which processes data and generates predictions:

Input Features (x): Each of these attributes has a number value and represents the input data. For instance, the weight and colour of a fruit could be inputs in its classification.

Weights (w): Each attribute in the input is given a weight that establishes how much of an impact it has on the output. The ideal values are found by adjusting these weights throughout training.

Bias (b): In order to provide the perceptron more flexibility in learning, it uses a constant input value or a fixed numerical value that allows it to make adjustments regardless of the inputs. It’s frequently thought of as an extra weighted input.

Summation Function: This function adds the bias and combines the inputs with their corresponding weights to determine the weighted sum of the inputs. This is sometimes referred to as the net input function.

z = ∑(x_i * w_i) + b or a = ∑(x_i * w_i) + b. are common mathematical representations.

Activation Function: After passing through the weighted sum, this function generates a binary output (0 or 1) by comparing it to a threshold. Often, the Heaviside step function is used for this, with an output of 1 if the sum is larger than or equal to a threshold (usually 0 after bias is taken into account) and 0 otherwise.

Output: The activation function’s binary output, which is employed for binary classification problems, is either 1 or 0.

How the Perceptron Works

An essential component of neural networks, the perceptron is made to replicate the way a biological neuron performs binary classification tasks. The following steps comprise its operation:

Initialization: Setting a bias value and giving each input starting weights which are frequently random is the first step in the procedure. While the bias permits altering the threshold of the activation function, these weights indicate the significance or impact of each input on the ultimate choice.

Weighted Sum Calculation: The Perceptron determines a “net input” or “weighted sum” for a given set of inputs. Each input value is multiplied by its matching weight to do this, and the products are then added up. This total is then increased by the bias term. This can be expressed mathematically as:

Code

Net Input = (Input_1 * Weight_1) + (Input_2 * Weight_2) + ... + (Input_n * Weight_n) + BiasActivation Function Application: After that, an activation function usually a step function for a simple Perceptron is applied to the computed net input. Whether or whether the net input exceeds a predetermined threshold affects the output for this function. The output is normally 1 if the net input is above the threshold and 0 otherwise. The classification is shown by this binary output.

Weight Update (Learning): For a given input, the Perceptron compares its expected output with the desired output during training. In the event of a disparity (an error), the Perceptron modifies its bias and weights to minimize the mistake in subsequent predictions. A learning rule like the Perceptron Learning Rule adjusts weights based on input values and errors. This iterative computation, comparison, and adjustment continues until a set number of iterations or the model’s predictions match the training data outputs.

Training and Learning Rule

A supervised learning procedure known as the perceptron learning rule is used to teach the perceptron by modifying its weights and biases. The true class (label) and the expected class are compared using this rule. If a sample is incorrectly classified, the current decision boundary’s weights are adjusted appropriately. To complete a training set, these procedures are repeated for every sample, or ‘epoch’. Usually, it takes a few epochs to properly classify every sample.

The weight update formula is: w_i,j = w_i,j + η (y_j – ŷ_j)x_i Where:

- w_i,j is the weight between the ith input and jth output neuron.

- x_i is the ith input value.

- y_j is the actual (target) value, and ŷ_j is the predicted value.

- η (eta) is the learning rate, controlling how much the weights are adjusted.

This procedure modifies the weights to reduce the error between the neural network output and the intended output. A finite number of epochs will be needed for the perceptron to identify the answer if the data is linearly separable.

Types of Perceptrons

Single-Layer Perceptron: A single layer of input nodes that are completely connected to an output layer makes up this type. Learning linearly separable patterns that is, data that can be separated into discrete categories by a straight line (or hyperplane in higher dimensions) is its bound. Complicated, non-linear issues such as the XOR rule are difficult for it to solve.

Multi-Layer Perceptron (MLP): Specifically, MLPs were created to address the shortcomings of single-layer perceptrons. One or more hidden layers, an input layer, and an output layer are the two or more layers that make up an MLP. MLPs can now handle more intricate, non-linear patterns and relationships in data with this architecture, which essentially boosts the neural network’s expressive capacity. An MLP has connections between every unit and every unit in the layer below, and each connection has a weight that shows how much of an impact it has. A technique known as backpropagation is frequently used in MLP training.

Limitations of Perceptrons

Although the single-layer perceptron is a major advancement, it has some noteworthy drawbacks:

- Limited to linearly separable problems: XOR problems and other data that are not linearly separable cannot be classified using it.

- Struggles with convergence: In cases when the data is not separable, it might not converge.

- Requires labeled data for training: It uses a supervised learning framework.

- Sensitive to input scaling: The magnitude of the features that are input can have an impact on performance.

- Lacks hidden layers for complex decision-making: Multilayer Perceptrons provide a solution to this constraint.

Code Example

Building and Training a Single-Layer Perceptron Model

Code examples for a single-layer perceptron that makes use of PyTorch and NumPy (pure Python).

Python (NumPy-based) Implementation

The perceptron model is created from scratch in this implementation.

Steps Involved:

Step 1: Set up the learning rate and weights first. All of the weights, including the bias term’s additional weight, are initialised at random.

Step 2: Establish the linear layer. In this case, Z = XW + b is the weighted sum of the inputs.

Step 3: Describe the function that activates. When the weighted sum is >= 0, the Heaviside Step function is utilised, and if not, it returns 0.

Step 4: Describe the prediction methodology. The linear function and activation function are applied in this way.

Step 5: Explain the loss function. The loss can be defined as the difference between the target and the projected output.

Step 6: Give the training step a definition. Based on the computed error, this applies the perceptron learning rule to update weights and bias.

Step 7: Fit the model. The model is trained on each input-target pair as it iterates over the dataset for a predetermined period of epochs.

Example Usage (Binary Classification on Linearly Separable Dataset)

Using StandardScaler() for feature scaling and make_blobs() for dataset generation, this section shows how to train and assess the perceptron.

import numpy as np

from sklearn.datasets import make_blobs

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

# Generate a linearly separable dataset

X, y = make_blobs(n_samples=1000, n_features=2, centers=2, cluster_std=3, random_state=23)

# Split data

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=23, shuffle=True)

# Scale features

scaler = StandardScaler()

X_train = scaler.fit_transform(X_train)

X_test = scaler.transform(X_test)

np.random.seed(23)

# Initialize and train perceptron

perceptron = Perceptron(num_inputs=X_train.shape[13])

perceptron.fit(X_train, y_train, num_epochs=100)

# Predict and evaluate

pred = perceptron.predict(X_test)

accuracy = np.mean(pred != y_test)

print("Accuracy:", accuracy)

# Visualize results

plt.scatter(X_test[:, 0], X_test[:, 1], c=pred)

plt.xlabel('Feature 1')

plt.ylabel('Feature 2')

plt.title('Scatter Plot of Classified Data Points')

plt.show()The output shows a high accuracy (e.g., 0.975) and a scatter plot of the classified data points.

Binary Classification using Perceptron with PyTorch

The nn.Module in PyTorch is used in this part to re-implement the perceptron for binary classification on linearly separable data.

Actions Took:

Data Preparation: Convert a synthetic dataset to PyTorch tensors after scaling and dividing it into training and test sets.

Perceptron Model Definition: An nn represents a single-layer perceptron.A linear layer module with a unique Heaviside step activation function.

Training: A basic learning rate and a manual weight update rule are used to train the perceptron over a predetermined number of epochs.

Evaluation and Visualization: Predictions are colour-coded on the test dataset, and accuracy on the test set is calculated to assess the model’s performance.

Additionally, the PyTorch example achieves good accuracy (e.g., 0.975) on the linearly separable dataset.