Advantages Of Neural Machine Translation of language conversion explore its advantages, from natural phrasing to real-time performance.

Introduction

Language is one of humanity’s most powerful tools. Cross-linguistic communication is tough with over 7,000 languages spoken worldwide. This is where the automatic method of translating speech or text between languages, known as machine translation (MT), enters the picture. Neural Machine Translation (NMT) is now the most popular and successful approach to machine translation (MT) among the different methodologies.

By utilizing neural networks to capture long-range dependencies and modeling complete sentences as sequences, NMT a byproduct of the deep learning revolution has greatly enhanced translation quality. The fundamental ideas, architecture, training methods, benefits, drawbacks, and uses of NMT in natural language processing (NLP) are examined in this article.

History of Neural Machine Translation

Understanding NMT’s evolution is crucial before delving into it.

The conventional technique known as Rule-Based Machine Translation (RBMT) made use of dictionaries and pre-established grammar rules. Despite offering respectable accuracy for particular areas, it has issues with nuance and scalability.

Statistical Machine Translation (SMT): Phrase-based models like Moses were prominent in the early 2000s. Using probabilistic models, these systems learned translations from massive parallel corpora. Although SMT was more adaptable than RBMT, it was less capable of managing context.

Introduced in 2014 or thereabouts, neural machine translation (NMT) views translation as a learning problem that involves moving from one sequence to another. More fluent and contextually accurate outcomes are provided by using deep learning models to learn a mapping from source to target language.

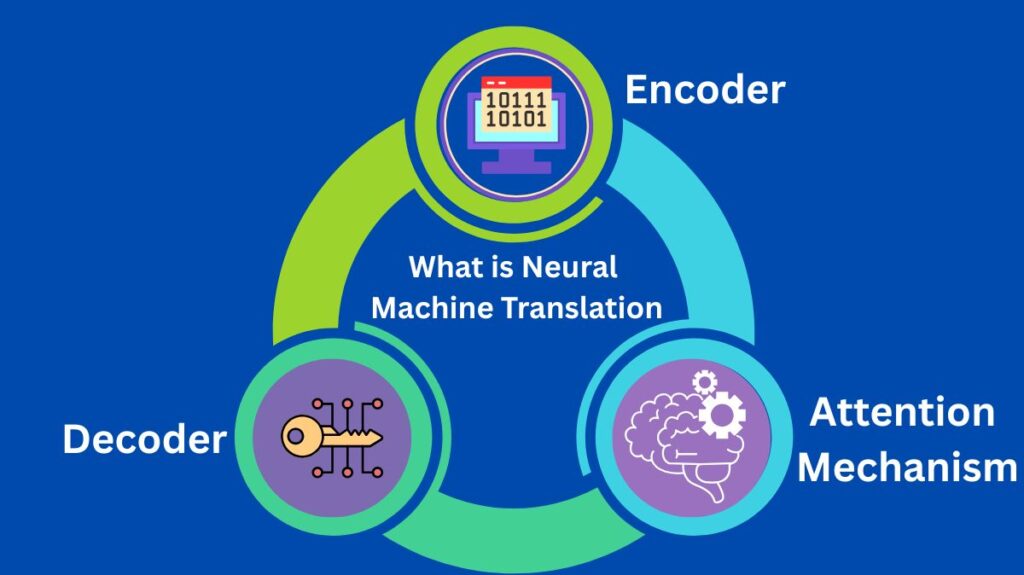

What is Neural Machine Translation?

Neural Machine Translation uses neural networks to translate text between languages. To increase translation accuracy, it frequently uses an attention mechanism and mainly depends on sequence-to-sequence (seq2seq) models.

Three primary parts make up the translation process in NMT:

- Encoder: After reading the input sentence, the encoder converts it into a context vector of a predetermined length.

- Decoder: Produces the translated output sentence using the context vector.

- Attention Mechanism: During translation, the attention mechanism enables the decoder to concentrate on pertinent portions of the original sentence.

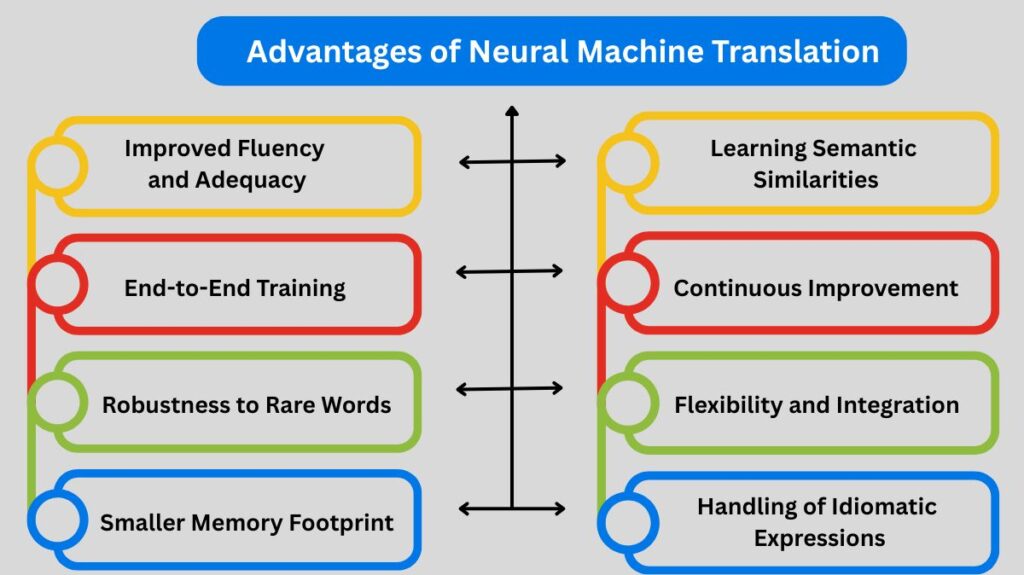

Advantages of Neural Machine Translation

Natural language processing (NLP) has advanced significantly with neural machine translation (NMT), which has various benefits over more conventional techniques like statistical machine translation (SMT):

Improved Fluency and Adequacy

- More grammatically accurate and natural-sounding translations are produced by NMT systems. By taking into account the complete input sentence and capturing the contextual links between words, they improve the output’s flow and coherence.

- NMT produces more accurate translations by comprehending the context, which helps it express the meaning of the original text.

End-to-End Training

One big neural network is used to train NMT models. The system may jointly learn all the translation-related elements (such as alignment and language modeling) with this end-to-end method, which maximizes translation performance throughout the process. SMT systems, on the other hand, are composed of several independently tuned sub-components.

Robustness to Rare Words

In contrast to SMT, which depends on discrete word representations and may experience data sparsity, NMT models frequently represent words as continuous vectors (embeddings), which enables them to generalize better to unseen or rare words.

Smaller Memory Footprint

In general, NMT systems are more efficient in terms of storage because they use a lot less memory than the massive n-gram models used in SMT.

Learning Semantic Similarities

Neural networks in NMT learn distributed representations that enable the model to comprehend word semantic similarity. To produce more relevant translations, the model can learn, for instance, that “car,” “automobile,” and “vehicle” are linked.

Continuous Improvement

As NMT models are exposed to more training data, they can consistently enhance the quality of their translations. Neural networks’ capacity for learning and adaptation makes it possible for NMT systems to gradually improve in accuracy.

Flexibility and Integration

Through APIs and SDKs, NMT models can be included into a wide range of software applications, providing flexibility in both deployment and usage.

Handling of Idiomatic Expressions

By taking into account the larger context, NMT systems especially those with complex architectures are better able to comprehend and translate colloquial language and idiomatic idioms.

Disadvantages of Neural Machine Translation

Although neural machine translation (NMT) has transformed machine translation and natural language processing (NLP), it also has a number of drawbacks and difficulties.

Need for Large Amounts of High-Quality Parallel Data

Large volumes of parallel text (source and target language pairs) are necessary for the efficient training of NMT models, which are data-hungry. Obtaining such huge and excellent datasets can be a major obstacle, particularly for languages with limited resources or particular fields.

Challenges with Out-of-Vocabulary (OOV) and Rare Words

Words that were uncommon in the training data frequently cause problems for NMT models. They might fail to translate OOV words, particularly named entities or technical phrases, or they might translate them incorrectly. Subword segmentation is one technique that helps lessen this, although it is not a complete solution.

Challenges with Long Sentences

It was challenging for early NMT models to accurately translate very long words, especially those with basic encoder-decoder architectures. Even while attention processes have made this better, accurately translating very long and complicated phrases is still difficult.

Lack of Interpretability (Black Box Nature)

In NMT, deep neural networks are frequently referred to as “black boxes.” It’s hard to troubleshoot mistakes or learn more about the translation process when it’s hard to comprehend why a model generates a particular translation. In delicate applications, this lack of transparency may be troublesome.

Potential for Fluent but Inaccurate Translations

Sometimes the translations produced by NMT models sound fluent and grammatically perfect, but they don’t adequately capture the content of the original text. Unlike disfluent translations, this can be deceptive and more difficult to spot.

Difficulty Handling Low-Resource Languages

As previously stated, the availability of training data has a significant impact on how well NMT systems work. NMT models frequently perform worse than conventional techniques or fail to generate any effective translations at all for languages with constrained parallel resources.

Domain Dependence

When translating text from one domain (like news) to another (like medical or legal), NMT models trained on that domain might not translate successfully. Additional training data or specific methods are frequently needed to adapt NMT models to new domains.

Computational Expense

It takes a lot of computational resources (time, GPUs, memory) to train large NMT models. It can be computationally demanding to even deploy and run these models for real-time translation.

Potential for Bias

Biases in the training data may be unintentionally learned and reinforced by NMT models, resulting in unfair or incorrect translations pertaining to gender, ethnicity, or other sensitive characteristics.