An introduction to Restricted Boltzmann Machines

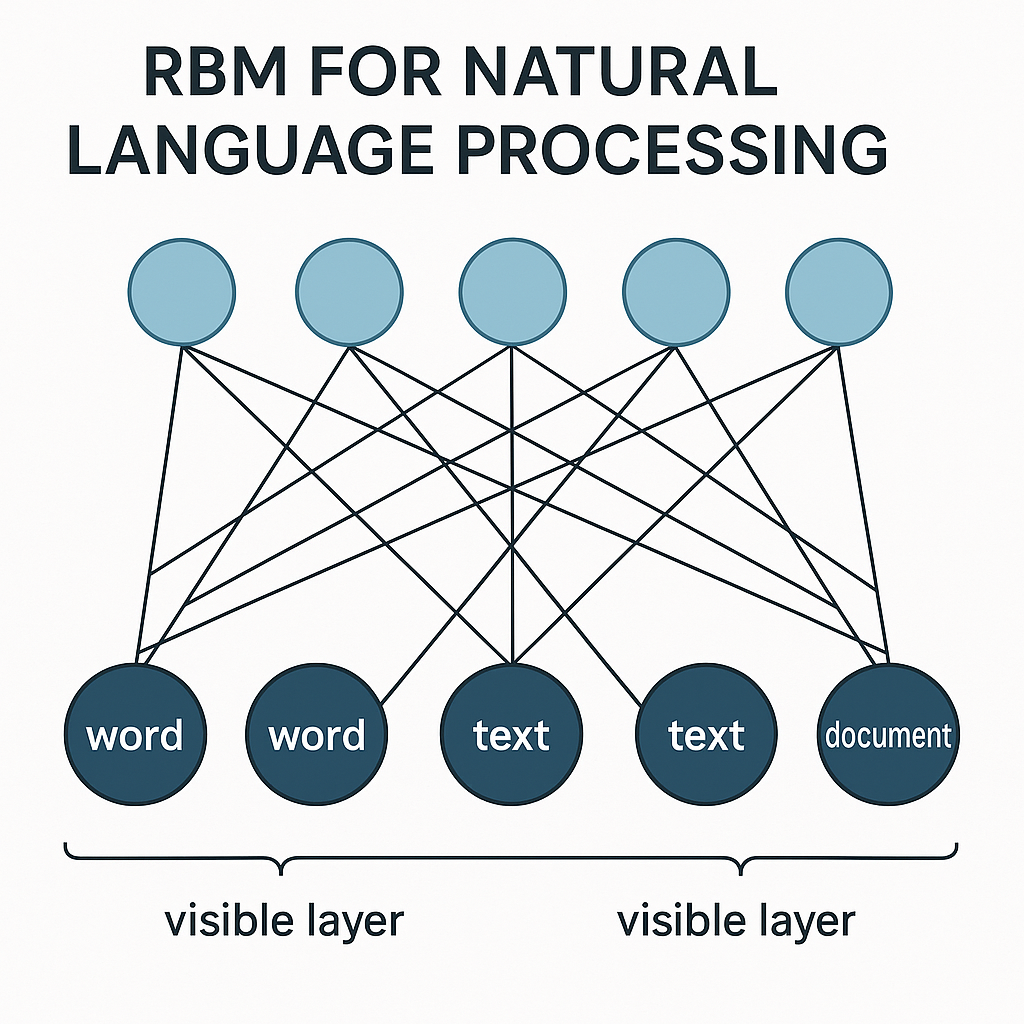

Restricted Boltzmann Machines (RBMs) are unsupervised learning models used in Natural Language Processing (NLP) to learn a probability distribution across words or texts. They can extract features, reduce dimensionality, and form Deep Belief Networks. RBMs can be trained on word embeddings or documents to learn latent features or representations for topic modelling, document classification, and data generation in NLP. One kind of neural network model called Restricted Boltzmann Machines (RBMs) is mainly employed for generative data modelling and unsupervised learning. They are associated with graphical models that are probabilistic.

Based on the references given, the following is a thorough explanation:

Relationship to Other Models

- Hopfield networks, energy-based models used for unsupervised modelling and storing training data instances in local minima, are the ancestors of RBMs.

- In order to gain higher generalization, Hopfield networks were extended to Boltzmann machines, which are stochastic variations that employ probabilistic states (based on Bernoulli distributions). By controlling the joint distribution of these states, Boltzmann machines seek to learn parameters that maximize the probability of the data. They have both visible states, which reflect seen data, and hidden states, which represent latent variables.

- A logical special case of the Boltzmann machine is the Restricted Boltzmann Machine (RBM).

Important Features of RBMs

- Since RBMs are bipartite networks, only connections between visible and hidden units are permitted. Neither pairs of visible states nor pairs of concealed states are connected. In comparison to the conventional Boltzmann machine, this simplification results in training techniques that are more efficient.

- They may be thought of as a specific instance of Markov random fields or are also called harmoniums.

- In contrast to conventional feed-forward networks, which map inputs to outputs, RBMs are undirected networks made to learn probabilistic correlations.

- They generate latent representations of the data points, namely stochastic hidden representations.

Probability and Energy

- The probability of a state configuration s=(v, h) that is defined by a Boltzmann machine (and RBM) is proportional to the exponentiated negative energy of that configuration, P(s) $\propto$ exp(-E(s)), where E(s) is the energy.

- The sigmoid function applied to a unit’s energy gap, which is impacted by the biases and the weighted sum of connected unit states, determines the likelihood that the unit is active (state=1). This relates the logistic sigmoid function to energy ideas in statistical physics.

Data Generation

Because of the cyclical relationships between states, generating sample data points from a Boltzmann machine necessitates an iterative procedure, such as Gibbs sampling or Markov Chain Monte Carlo (MCMC), in order to attain thermal equilibrium.

Training RBMs

- Maximizing the log-likelihood of the training data is the goal of RBM training.

- Because MCMC needs a large number of samples to achieve thermal equilibrium, traditional Boltzmann machine training procedures may be sluggish.

- A quicker, approximative training technique designed especially for RBMs is the Contrastive Divergence (CD) algorithm. The quickest version, CD1, begins with the training data and employs only one extra MCMC iteration.

- A weight update strategy for training is based on the difference between correlations found in model samples (both visible and hidden states sampled freely) and data-centric samples (visible states clamped to training data, hidden states sampled).

- Instead of utilising only binary samples, practical training frequently uses mini-batches and makes use of real-valued probabilities (activations) at some sampling steps to minimise noise.

Applications

Numerous supervised and unsupervised applications have had success using RBMs:

- Dimensionality Reduction: Using the conditional probabilities of hidden states given visible states, for example, to create a simplified representation of the data.

- Recommender Systems (Collaborative Filtering): Used to complete matrices, especially when user ratings or other missing data are present. By employing softmax units for visible layers, they are able to manage non-binary ratings (such as 1–5). Each user can have their own RBM with shared weights.

- Classification: RBMs are most frequently employed as a pretraining technique. An encoder-decoder network is created by unrolling a trained RBM, adding a classification layer on top, and using backpropagation to adjust the weights. There is a discriminative variation with a closed-form prediction computation that directly optimises conditional class likelihood.

- Topic Models: Comparable to PLSA, it is used for text-specific dimensionality reduction and latent topic discovery by modelling document words (often count data using softmax visible units) with binary hidden units.

- Multimodal Data Learning: Used with data that combines text and graphics, among other modalities.

Beyond Binary Data

Although binary units are assumed by the majority of RBMs, they have been generalized:

- Categorical or Ordinal Data (like ratings): Make use of softmax visible units.

- Count Data: Make use of softmax visible units.

- Real-valued Data: Make use of real-valued hidden units (such as those with ReLU activation) and Gaussian visible units.

Stacking RBMs

- Deep networks may be made by stacking many RBMs. Usually, this entails training each RBM layer by layer in a systematic manner (pretraining phase). A deep feed-forward network may then be initialised using the learnt weights, and backpropagation can be used to fine-tune it.

- Advanced variants of stacked RBMs with distinct connection patterns are called Deep Boltzmann Machines (DBMs) and Deep Belief Networks (DBNs). Whereas DBNs might only have bi-directional connections in the top layer, DBMs have bi-directional connections between all of their neighbouring layers.

In the past, RBMs have been crucial in the spread of pretraining methods for deep learning models.