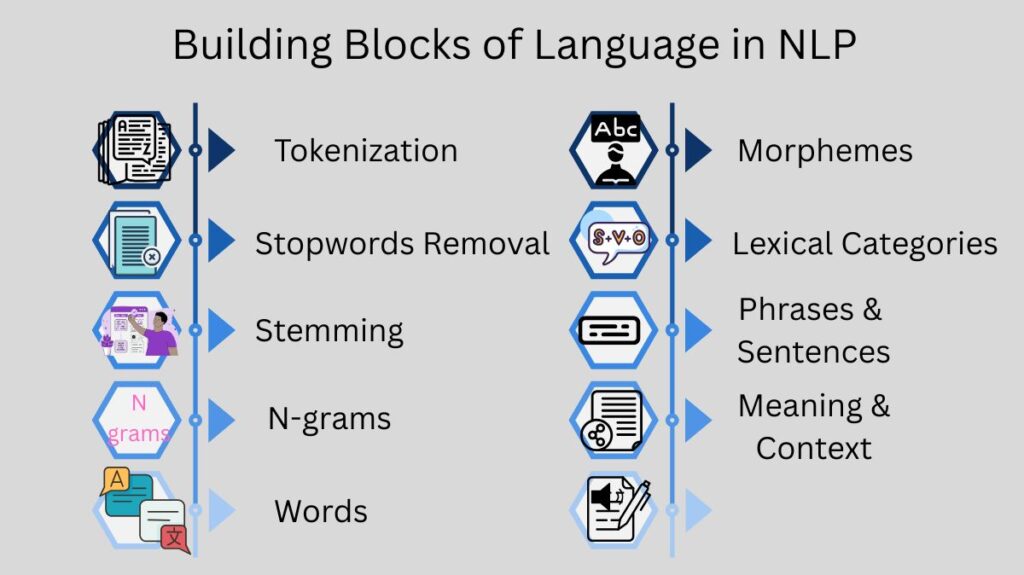

Building Blocks Of Language In NLP

The following interrelated layers are used to understand and analyse the linguistic building elements in Natural language Processing (NLP):

Tokenization

The process of tokenising a given text involves dividing it up into smaller components called tokens. Words, sentences, or even characters can be used as these tokens, depending on the level of detail needed for a certain study.

Stopwords Removal

Not every word in a given text adds the same amount of meaning to it. Natural Language Processing analysis frequently views stopwords common words like “the,” “and,” and “is” as noise. In order to minimise the dimensionality of the data and concentrate on words that contain meaning, stopword removal is an essential step in text processing.

Stemming

One method of text normalisation is stemming, which is breaking words down to their most basic or root form. Catching the essence of words and classifying word variants is the aim.

N-grams

Words, letters, etc. that appear one after the other in a text; these sequences are used to examine word correlations and patterns.

Words

It is common knowledge that words are the fundamental units of writings written in natural language. Nevertheless, the word “words” might be vague. One distinguishes between a word’s surface form, or tokens (like “horses”), and its type, or lemma (like “horse”). In most cases, the dictionary entry is the lemma. Lexmes, also known as lexical items, are collections of different word forms that convey a concept; these make up a language’s lexicon. One of the basic preprocessing tasks for text is tokenisation. Linking morphological variations of a word to its lemma is a fundamental aspect of lexical analysis.

Morphemes

Internal structure is possessed by morphemes. Within a word, morphemes are thought to be the smallest units of information. Morphology is the study of how words are formed and their inherent structure. The generative nature of language means that systems come across new words or word forms that may be morphologically related to existing words, allowing for inference about their features. This makes an understanding of morphological processes crucial in Natural Language Processing. Morphological analysis is an essential component of lexical analysis, frequently utilising finite-state approaches.

Lexical Categories (Parts of Speech)

Words are categorised according to their behaviour into lexical categories, such as parts of speech (POS), syntactic categories, or grammatical categories. Words are described by POS tags based on their internal construction, patterning, and related grammatical characteristics. Three key POS category examples are nouns, verbs, and adjectives. Part-of-speech tagging is a popular NLP task that involves giving words these tags.

Phrases and Sentences

Sentences and phrases are made up of words. Sentences are assumed to be the fundamental unit for meaning analysis in a lot of Natural Language Processing work since they convey a proposition, concept, or notion. A sentence is more than just a list of words. The study of how sentences are constructed and organised inside is known as syntax. A sentence’s grammatical structure is ascertained through syntactic analysis, also known as parsing. Analysing word arrangements and demonstrating the connections between them are part of this. The laws of grammar are what make sentences coherent. Both phrase structure trees and dependency graphs can be used to illustrate syntactic structure.

Meaning and Context

Interpreting language entails more than just analysing its structure. Semantics is the study of how sentences are understood. The study of pragmatics focusses on how context influences interpretation and how to use and comprehend language in various contexts. Discourse focusses on the relationships between sentences in a series. A general understanding of the world, or “World Knowledge,” may also be necessary to comprehend language. To extract and describe meaning, for instance, ontological semantics relies heavily on a world model, or ontology.

Sound and Writing

There are two types of language: spoken and written. The study of the physical components of language is known as phonetics. Phonology describes word structural limitations associated with pronunciation and is the study of sound system structure. Despite not being called a building block like words or sentences, orthography is the foundation for text processing tasks like sentence segmentation and tokenisation.

Language processing requires all of these linguistic structure layers, from morphemes to words, their categories (POS), their combining into phrases and sentences (syntax), and their interpretation in context (semantics, pragmatics, discourse).