Example Based Machine Translation (EBMT) in NLP

Machine translation (MT) is a major NLP area. It entails employing computational techniques to automatically translate text between languages. Example Based Machine Translation (EBMT) is unique among MT techniques since it is data-driven and intuitive.

By remembering and modifying earlier samples, EBMT emulates how humans typically translate. It is particularly helpful in fields where translation consistency is essential and parallel corpora texts in two languages are accessible.

What is EBMT?

MT method “Example Based Machine Translation” uses a parallel corpus of bilingual sentence pairs. Instead than depending just on probability or grammar rules, EBMT finds examples that are comparable to the original text and then modifies the corresponding translation to produce a new output.

Makoto Nagao proposed this method in the 1980s after finding humans translate by analogy. The same way you can translate “I like apples,” you can translate “I like bananas” easily.

How EBMT Works

The pipeline used by EBMT systems is three steps:

Matching

The system looks for source sentences that are comparable to the input sentence in the bilingual corpus. It might search for similar language patterns, partial matches, or precise matches.

Alignment

The algorithm aligns corresponding words or phrases between the source and target sentences after locating an appropriate example. This makes it possible to identify translation equivalents.

Adaptation via Recombination

EBMT modifies portions of pre-existing translations if the input sentence does not exactly match. For example, multilingual phrase tables or dictionaries can be used to replace a sentence that lacks a noun or verb. These translated passages are then reassembled into a coherent, grammatically sound statement.

Learn more on RBMT In NLP: Understanding Rule-Based Machine Translation

For instance:

- Training example:

“I like apples.” is the source.

Objective: “J’aime les pommes.”

- Sentence input: “I like oranges.”

- The result of EBMT is “J’aime les oranges.”

Key Components of EBMT

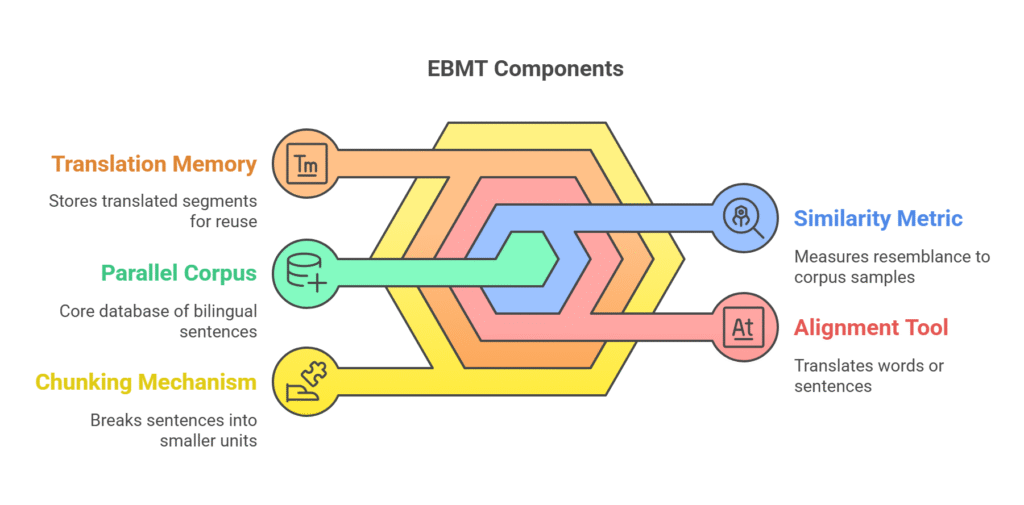

- The database of bilingual sentence pairs is known as the Parallel Corpus.

- The similarity metric calculates the degree to which an input sentence resembles samples found in the corpus.

- Alignment Tool: Translates words or sentences between languages.

- Translated segments are stored in Translation Memory (TM) for later use.

- Chunking Mechanism: Makes it easier to align and recombine sentences by breaking them up into smaller phrases or units.

Advantages of Example Based Machine Translation

- Translation Style That Is Human-Like: The outputs of EBMT typically sound more fluid and natural since it uses actual human-translated examples.

- Architecture That Is Independent of Language: If a decent parallel corpus is available, Example Based Machine Translation(EBMT) can be more easily adapted to other languages because it does not require in-depth linguistic study or knowledge of grammar rules.

- Translation Consistency: Frequently used expressions or terms unique to a given industry are reliably translated using examples that have been stored.

- Maintenance Ease: Adding new sentence pairings to the database is all that is required to update the system. No need to retrain intricate models or re-engineer rules.

- Effective with Fixed Phrasing: Perfect for translating semi-structured or standardized materials such as legal texts, technical manuals, and help files.

Learn more on What Is Language Detection In Natural Language Processing

Disadvantages of EBMT

- Dependency on Data: The parallel corpus’s size and quality have a significant impact on performance. Insufficient examples make it difficult for EBMT to generate precise translations.

- Inadequate Generalization: Sentences that differ greatly from the stored examples are difficult for EBMT to translate correctly, in contrast to neural models.

- Unreliable Results for Invisible Input: Recombination may produce grammatically erroneous or awkward outputs when input sentences deviate significantly from examples that already exist.

- Problems with Scalability: If indexing or pre-processing methods are not used to optimize large corpora, search and alignment become slower.

- Limited Coverage of Languages; Low-resource languages, or those with fewer parallel corpora, gain less from EBMT.

Learn more on Syntax Based Machine Translation: Advantages & Applications

Challenges in EBMT

Retrieval of Examples

It is computationally difficult to rapidly and precisely find the best matching sentence or phrase from a large database.

Alignment of Chunks

Errors can occur when automatically aligning phrases and segments between source and destination sentences, particularly when the sentence structures differ greatly.

Quality of Recombination

If not done correctly, piecing together passages from other examples frequently produces grammatically incorrect output.

Adaptability of Domains

Due to variations in language and structure, Example Based Machine Translation(EBMT) may perform well in one area (such as legal or medical translation) but poorly in another.

Managing Context

EBMT frequently lacks a thorough comprehension of semantics or context, which could lead to unclear or inaccurate translations.

Applications of EBMT

Even with the popularity of neural techniques, EBMT is still helpful in a number of situations:

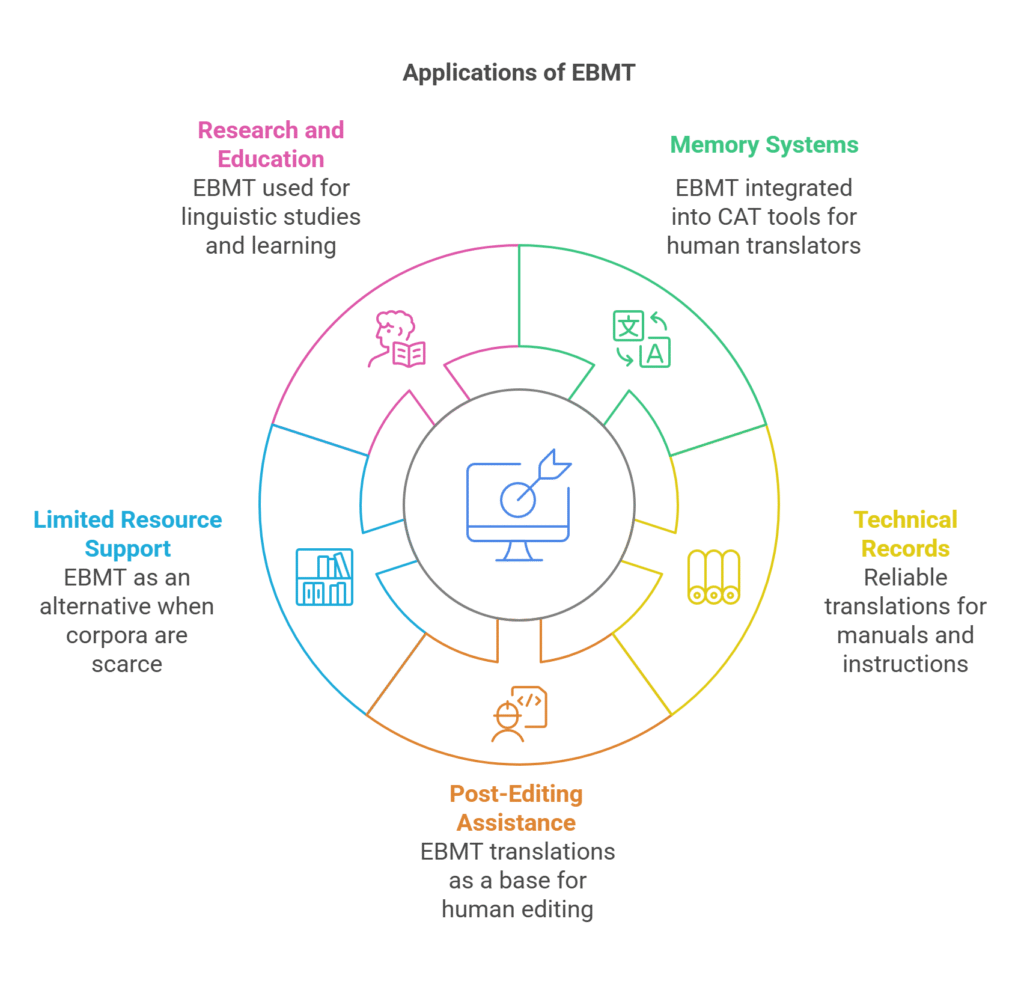

- Memory Systems for Translation: Extensively utilized in human translators’ Computer-Assisted Translation (CAT) systems. EBMT ideas are included into tools such as SDL Trados and MemoQ.

- Technical Records: The capacity of EBMT to provide reliable translations is advantageous for manuals and instructions that contain repetitious language.

- Assistance with Post-Editing: To expedite the translation process, EBMT-generated translations are frequently utilized as a foundation for human post-editing.

- Language Support with Limited Resources: EBMT may be a good substitute when there are no huge corpora available for neural system training.

- Research and Education: Linguists and scholars can explore corpus-based learning, bilingual alignment, and phrase translation with the use of EBMT systems.

The Future of EBMT

Even if neural machine translation has gained popularity recently, EBMT is still relevant. A lot of contemporary systems use hybrid strategies that combine neural or statistical models with EBMT. For example, EBMT principles are currently used in translation memory systems to support neural models, particularly in business environments where control and consistency are essential.

Because of its interpretability, reusability, and domain control, EBMT is especially helpful in workflows that call for a high level of human engagement or accuracy.

Learn more on Real World Applications Of NLP Natural Language Processing

In conclusion

One of the fundamental techniques in the development of machine translation and natural language processing is example-based machine translation. Example Based Machine Translation(EBMT) mimics the way human translators frequently operate by utilising historical instances and modifying them for fresh inputs. Its scalability and generalization limits notwithstanding, its translation consistency, naturalness, and domain-specific applications are excellent. EBMT is still a potent tool in the translator’s toolbox given the correct data and optimization, particularly when paired with contemporary neural approaches.