Syntactic analysis: what is it? The distinction between lexical analysis (tokens) and syntactic analysis (sentence structure) in natural language processing is explained in detail in this article, which also offers an example of syntactic analysis.

What is Syntactic analysis?

Parsing, also known as syntactic analysis, is a basic stage in natural language processing (NLP). Based on a formal grammar, it entails figuring out how to structurally describe a group of words, usually a sentence. In order to evaluate grammar and word arrangements and to uncover word links, the procedure examines the constituent words. “The school goes to boy” is an example of a sentence that would be rejected by an English syntactic analyser due to its erroneous word order.

Language analysis is frequently seen as a series of steps in traditional NLP, reflecting linguistic differences. After lexical analysis and text preprocessing (such as tokenisation and sentence segmentation), syntactic analysis comes next. Tokenised and lexically analysed input is passed into the syntactic parser in the traditional pipeline. Syntactic analysis’s output, which gives things structure and order, is then meant to help with semantic analysis, which is concerned with literal meaning, and pragmatic analysis, which takes context into account. Although this phased division is helpful for software engineering and pedagogy, it is acknowledged that language processing isn’t always easily divided into these discrete categories in practice.

Grammar-driven parsing is usually used as a means to an end, rather than as a substitute for other processing steps, including giving the sentence meaning. Typically, the goal is a syntactic structure that is hierarchical and appropriate for semantic interpretation.

What is the purpose of syntactic analysis?

NLP studies phrase grammar using syntactic analysis, or parsing. It forms phrases and sentences from sentence structure and word combinations. Classical natural language processing places syntactic analysis before semantic and pragmatic analysis and after lexical analysis, including tokenization. Syntactic analysis’s organized output is thought to be easier to interpret semantically later on.

How does Syntactic Analysis work?

Syntactic analysis focusses on sentence form. The syntax and word placement of a sentence are examined to show their relationships. Sentences are more than just linear word sequences; understanding their structure is critical to deriving meaning. This makes the analysis vital. Finding this underlying structure results in a structured object that can be more easily manipulated and interpreted in later stages of processing.

Approaches of syntactic analysis

Various approaches and formalisms are employed to do syntactic analysis:

- Finding Grammatical Structure: The goal of natural language processing (NLP) techniques, especially those grounded in generative linguistics, is to ascertain the syntactic or grammatical structure of sentences.

- Mapping to Parse Trees: The challenge of mapping a string of words to its parse tree is known as syntactic parsing.

- Dependency Grammar (DG) and Phrase Structure Grammar (PSG) are popular formalisms for phrase syntactic structure.

- Phrase Structure Grammar (PSG): This method breaks sentences into phrases (e.g., verb, noun, prepositional) and segments them into smaller components recursively. Traditional sentence diagrams are the source of phrase structure analysis.

- According to Dependency Grammar (DG), the syntactic structure is composed of lexical elements connected by asymmetric, binary relations known as dependencies. Each word in a sentence is parsed by connecting it to other words that depend on it.

- Identifying constituent boundaries (clause, NP), the grammatical function of words or constituents (subject, complement, auxiliary), and dependencies between words or constituents (head/dependent) are all examples of syntactic information.

- Managing Ambiguity: When a statement may have more than one conceivable interpretation, ambiguity presents a major issue for syntactic parsing. To deal with this, algorithms need to be properly selected. To resolve ambiguity, models such as Probabilistic Context Free Grammars are employed.

- Syntactic Features: Using syntax tree-based features, syntactic analysis can also entail collecting syntactic relationships and structures.

The findings of previous phases of linguistic research are frequently expanded upon in syntactic analysis. Morphological analysis, which includes lemmatization and POS tagging, follows syntactic analysis. The first layer of linguistic annotation is usually morphosyntactic, which includes POS, lemma, and inflection information. Layers addressing syntactic relations come next.

The structural basis for semantic analysis’s ability to ascertain a sentence’s meaning is ultimately provided by syntactic analysis. Semantic analysis, for instance, can use the grammatical structure found by syntactic analysis to analyze the meaning of words that combine to form a sentence as well as the relationships between individual words.

You can also Learn more Lexical Categories: The Process Of Organizing Words in NLP

In general, parsing can be divided into methods according to several grammatical formalisms:

Phrase Structure Grammar, also known as Constituency Grammar Parsing: Sentences are broken down using this method into constituents, or phrases, which are then broken down into smaller constituents. Noun Phrases (NP), Verb Phrases (VP), Prepositional Phrases (PP), and other phrase and clause structures are described. In order to manage displaced elements or long-distance interactions, a phrase structure analysis may combine concepts from generative grammar and frequently draws inspiration from conventional sentence diagrams. A constituent parse tree, which is a rooted tree with internal nodes covering a continuous span of words labelled with their syntactic role, and leaves representing the original words, is the usual output. Syntactic categories that are used include, for example, S (sentence), NP, VP, PP, Det (determiner), and N (noun). Sentences in treebanks, such as the Penn Treebank, are annotated with parse trees, offering a data-driven approach to syntax and a source of information for parsers.

Dependency Grammar (DG), also known as Dependency Parsing, is a formalism in which lexical items are connected by binary asymmetric relations known as dependencies to produce the syntactic structure. Rather than constructing constituent phrases, a dependency parse illustrates the relationships between words by displaying the “parent” or “head word” for every word in the sentence. As a subset of parsing models, dependency-based models are examined.

Frequently, the parse tree is the result of the parsing process. In a parse tree with a context-free grammar, the sentence is the root, the individual words are the terminals at the leaves, and intermediate nodes (such as noun_phrase and verb_phrase) are non-terminals with children.

Numerous syntactic parsing methods and algorithms have been investigated, including:

- Grammar-driven parsing is the process of examining a word string using a formal grammar. A grammar creates a search space, and the parser looks for a series of rewritings of grammar rules (a derivation) that change the start category of the grammar into the input string.

- Statistical parsing: By choosing an ideal analysis or presenting a ranked list, statistical parsing maps sentences to their preferred syntactic representations. Statistical parsers are frequently trained using annotated corpora such as Treebanks. Generative models, discriminative models, and probabilistic context-free grammars (PCFGs) are some methods.

- Chart parsing: Using a well-structured substring table (chart) to store and reuse the output of parsing substrings is known as chart parsing.

- The CCKY Parsing (Cocke–Kasami–Younger Algorithm) is a dynamic programming technique for parsing without context.

- One method of deterministic parsing is LR Parsing.

- A general framework for parsing is called deductive parsing.

- An further parsing algorithm is shift-reduce parsing.

- Transition-based parsing: A method that represents parsing as a series of state changes.

Lexical Vs Syntactic analysis

The main goals of lexical analysis include feature extraction and data cleaning using methods like lemmatization, stemming, and spelling correction.

Conversely, syntactic analysis seeks to determine the functions of words inside a phrase, comprehend the connections between words, and analyse the grammatical structure of sentences.

Take a look at these sentences for an example:

Tom is a wise man.

Is Tom a wise man?

Only the first statement is syntactically valid and easily comprehensible, even if all the words are the same in both.

However, using merely basic lexical processing approaches does not allow you to make these distinctions. You would have to use more advanced syntax processing methods to make these distinctions in order to comprehend the connections between the words in a phrase.

Lexical analysis ignores several aspects of a sentence, but syntactic analysis considers a number of them. Among these elements are:

Word order and meaning

The goal of syntactical analysis is to determine how words in the content depend on one another. It will be more difficult to understand the text if the word order is altered.

Holding on to Stop Words

The entire meaning of the statement may alter if the stop words are eliminated.

Morphology of Words

By reducing the words to their most basic form, stemming and lemmatization will alter the sentence’s syntax.

Parts-of-speech of Words in a Sentence

Determining a word’s appropriate part of speech is crucial.

You can also learn more Types Of Morphemes: Prefixes, Suffixes And More in NLP

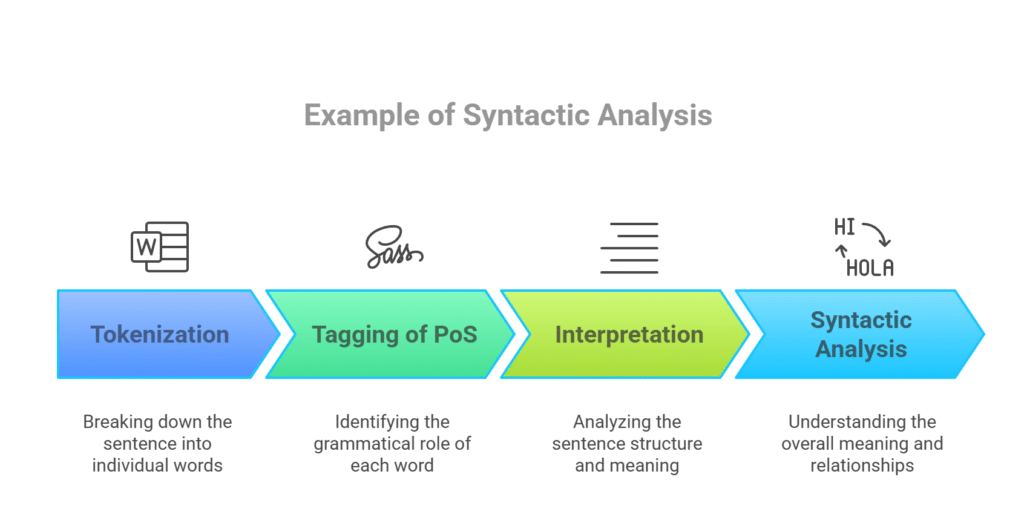

Example of syntactic analysis

See “The dog chased the cat.”

Tokenization

“The” , “dog” , “chased” , “the” , “cat.”

Tagging of PoS

The article “the”; “dog”; “chased”; “cat”

Interpretation

“According to the parser, “chased” is the verb, “dog” the subject, and “cat” the object. To illustrate the connections between the words, it may also create a dependency tree.

Syntactic analysis

Understanding the meaning of the text, including the roles of the dog and cat as well as the chasing motion.

Techniques

- Rule-based approaches: Parsing sentences according to predetermined grammar rules.

- Statistical techniques: Predicting grammatical structure with statistical models trained on huge datasets.

- Machine learning algorithms: Using neural networks and other machine learning models to identify linguistic patterns.

In many NLP tasks, such as text summarization, question answering, and machine translation, syntactic analysis is an essential first step.