A method for producing concise summaries of lengthy texts is feature-based Text Summarization in NLP, which involves taking characteristics of sentences and utilising them to assess how important they are to include in the summary. Sentences are ranked using this technique according to the scores that are determined from their attributes.

- The main idea of feature-based summarization is that each phrase in the text is given a score based on a list of predetermined attributes. Sentences with higher scores are more likely to be included in the final summary since they are deemed more significant.

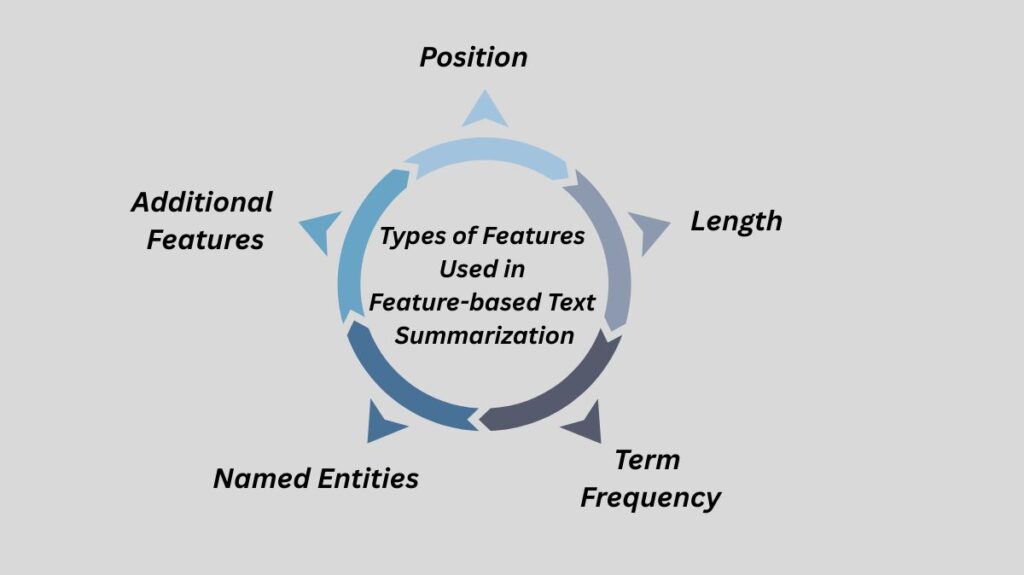

Types of Features Used in Feature-based Text Summarization in NLP

Sentences may be evaluated for relevance by extracting a number of attributes as follows:

Position: A sentence’s placement inside a document might reveal how important it is (for example, a document’s initial and last phrases or paragraphs are frequently crucial).

Length: Although the ideal sentence length may differ based on the context and preferred summary style, sentence length can still play a role.

Term Frequency: The frequency with which a word occurs in a phrase might indicate how relevant it is to the text’s primary ideas. Words can be ranked according to their significance in a document and throughout a corpus using methods such as TF-IDF (Term Frequency-Inverse Document Frequency). TF-IDF distributes weights to words depending on their significance for information retrieval and text categorisation. It has also been demonstrated to be successful in sentiment classification and may be used in conventional topic-based text classification.

Named Entities: Named things, such as individuals, organisations, or places, can draw attention to a sentence’s informative content. The process of locating and extracting entities from text is known as Named Entity Recognition (NER).

Additional Features:

These might include the existence of topic words, cue words, or semantic data.

The following extra features that can be used in feature-based text summarization:

Features based on syntactic information can be included, such as tree fragments of a syntactic tree and its sub-trees.

Head chunks: A sentence’s initial noun chunk might serve as a feature.

Words that frequently occur with a particular question class can be utilised as features because they are semantically connected. Although this is discussed in relation to question categorisation, sentence importance in summarisation could be covered by the fundamental idea of semantic relatedness.

The use of vector representations of words that capture syntactic and semantic information as features is known as word embedding. The word vectors of the words that make up a sentence can be combined or averaged to represent the sentence.

Sentence embeddings: Fixed-size embeddings of sentences can be produced using models such as BERT. These embeddings can then be utilized as features to determine the significance of a sentence for summarization.

Graph-based features: While TextRank is mentioned as a graph-based algorithm, characteristics obtained from the graph representation of the text, such as the degree of a sentence node (number of links to other sentences based on similarity), might be employed in a feature-based approach.

Machine learning model features: Sentence length, word frequency, and semantic similarity are just a few of the variables that machine learning models use to score sentences. One could think of the features that these models use (like in a Support Vector Machine or Clustering algorithm) as a component of a feature-based summarisation technique.

Topic-based features: Another technique is to identify the text’s subjects and then use the sentences’ relevance to these topics as features. Documents can be assigned probability distributions and topics can be identified using topic modelling approaches such as Latent Dirichlet Allocation (LDA) and Non-negative Matrix Factorisation (NMF). Topic distributions at the sentence level could be used as features.

Discourse cues: Cue words or phrases that signify importance or transitions are examples of features that capture the discourse structure of the text.

It’s crucial to remember that the particular text being summarised and the desired summary attributes may affect how successful these elements are. Summarisation performance can frequently be enhanced by combining different features. integrating features can be accomplished by integrating them into a single generative model or by first refining with more costly features after utilising a less costly feature.

Luhn’s Algorithm

Specifically, one of the feature-based algorithms for text summarization is Luhn’s Algorithm. It can be implemented using Python’s sumy package.

One such feature-based approach that is expressly mentioned is Luhn’s approach. Each sentence is given a score based on a number of factors, including position, length, term frequency, and named entities.

feasible procedures for applying the Python sumy package to Luhn’s Algorithm:

The first step is to use the command to install the sumy library!pip install sumy.

After that, you must import the required packages such as HtmlParser, PlaintextParser, and Tokenizer from the sumy library.

Thus, according to the sources cited, Luhn’s Algorithm is a technique in natural language processing (NLP), specifically for feature-based text summarization, in which the significance of each sentence is ascertained by examining a variety of sentence properties. This algorithm can be implemented using Python’s sumy module.

Feature-based Text Summarization Procedure

In general, feature-based text summarization would entail the following steps:

Feature Extraction: Locating and removing each sentence’s designated characteristics from the supplied text. This might entail methods such as named entity recognition, tokenisation, part-of-speech tagging, and word frequency computation.

Scoring: Determining a score for every sentence using predetermined criteria or weights and the attributes that were retrieved. Sentences with more named things or high-frequency subject words, for example, may score better.

Ranking: The sentences are ranked according to their computed scores.

Selection: Selecting the top-ranked sentences to be included in the summary, generally based on a desired summary length or a threshold score.

Relation to Other Techniques

In the context of other techniques like topic-based summarization and TextRank (a graph-based ranking algorithm), the feature-based text summarization in passing. This implies that feature-based approaches are a subset of the larger text summarization area.

Essentially, feature-based text summarization uses the statistical qualities of sentences and linguistic traits of the original text to extract the most important information and create a condensed version of it. The precise selection and weighting of the characteristics is crucial to this method’s efficacy.