How Does Neural Machine Translation Work?

In natural language processing (NLP), Neural Machine Translation (NMT) uses artificial neural networks to understand the intricate links between words and phrases in many languages. Then, it uses this information to translate fresh material. NMT models learn these mappings directly from massive volumes of parallel text data (bilingual sentence pairs), as opposed to typical machine translation systems that rely on explicit linguistic rules.

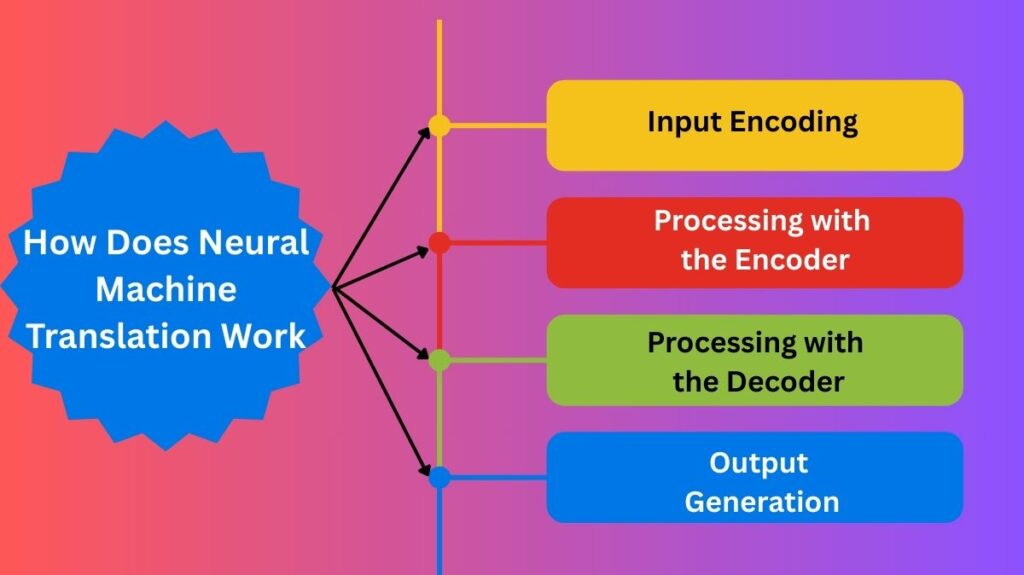

Considering the predominant Transformer architecture utilized in contemporary NMT, the following is a condensed explanation of the procedure:

Input Encoding

- First, the sentence in the source language (the text to be translated) is divided into individual words or sub-word units (such as word fragments).

- After that, each of these units is transformed into an embedding, which is a dense vector representation. In a continuous vector space, where words with similar meanings are positioned closer to one another, these embeddings reflect the semantic meaning of the words.

- These embeddings are supplemented by positional encodings in the Transformer architecture. Positional encodings give the model information about the word order in the input sentence because the Transformer processes all words simultaneously.

Processing with the Encoder

- The encoder receives the series of input embeddings (with positional encodings).

- Multiple stacked encoder layers make up the encoder. Every layer includes:

- Multi-Head Self-Attention: The Transformer’s central component. It enables the model to comprehend how several words relate to one another inside a single input text. In order to capture context and dependencies, the self-attention mechanism evaluates each word in the phrase to assess its relevance to the present word. “Multi-head” refers to the fact that this attention process is carried out several times in parallel in order to capture various kinds of relationships.

- Position-wise Feed-Forward Networks: Following the attention process, a basic feed-forward neural network is individually run through the representation of each word. This gives the model more non-linearity and enables it to process the data further.

After processing the full input sentence, the encoder generates a series of output embeddings, each of which now represents a word from the original phrase but is enhanced with contextual details from the full sentence.

Processing with the Decoder

- Word by word, the decoder aims to produce the translated sentence in the target language. Moreover, it has several stacked decoder layers. Every layer includes:

- Masked Multi-Head Self-Attention: This type of self-attention is comparable to that of the encoder, but it differs significantly. This masking stops the decoder from “peeking” at words that are supposed to be predicted in the target text during training. This guarantees that each word’s prediction depends only on the words that came before it.

- Multi-Head Attention over Encoder Output: This layer functions similarly to the preceding RNN-based models’ attention mechanism. When creating the subsequent word in the target sentence, it enables the decoder to concentrate on the pertinent portions of the encoded source sentence (the encoder’s output). In order to generate a context vector that guides the next word prediction, it computes attention weights depending on the output of the encoder and the present state of the decoder.

- Position-wise Feed-Forward Networks: A feed-forward network is applied to every position, much like the encoder.

- A unique “start-of-sentence” token is processed first by the decoder. The next word in the target phrase is predicted at each step using the context vector from the attention over the encoder output and the previously generated word (or the start token initially).

Output Generation

- The final output of the decoder stack is subjected to a linear layer and a softmax layer.

- A vector of scores, one for each word in the target language’s vocabulary, is created from the decoder’s output by the linear layer.

- These scores are transformed into a probability distribution across the target vocabulary by the softmax layer. The next translated word is the one with the highest likelihood.

- This procedure keeps going until the decoder produces a unique “end-of-sentence” token, which indicates that the translation is finished.

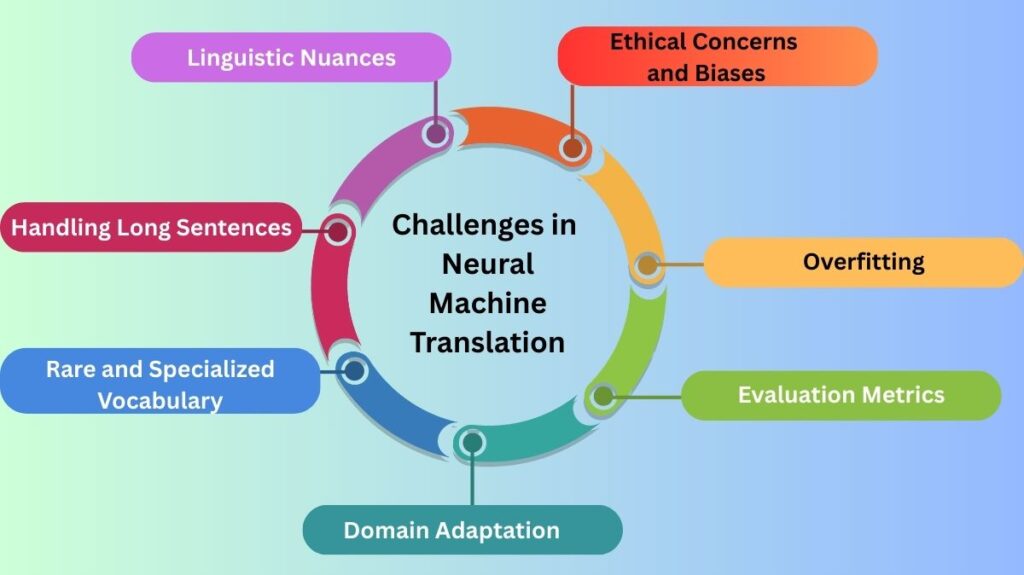

Challenges in Neural Machine Translation

Natural language processing (NLP) presents a number of challenges in neural machine translation (NMT), including the limitations of existing AI models and the intrinsic complexity of human language. Below is a summary of some major obstacles:

Linguistic Nuances

- Ambiguity is the inability of NMT systems to determine the appropriate interpretation based on context since words and phrases frequently have numerous meanings (polysemy).

- Since NMT models lack direct translations into other languages, they often fail to capture the rich context and culture that these language-specific terms and cultural nuances require.

- Syntax & Grammar: NMT models have improved, but complex sentence patterns in the target language make it hard to maintain grammatical precision and natural-sounding wording.

- Languages with limited resources: NMT models are trained primarily using huge parallel corpora, or bilingual data. Data scarcity presents a major challenge for languages with insufficient data.

Handling Long Sentences

With increasing input sentence length, basic encoder-decoder NMT models frequently perform worse. This is due to the fact that a long sentence becomes challenging to fully convey in a fixed-length vector.

Rare and Specialized Vocabulary

When unusual terms or highly specialized vocabulary from technical, scientific, or industry-specific domains are not well-represented in the training data, NMT systems may have trouble translating them.

Domain Adaptation

When used for particular domains, such as legal, medical, or technical writings, models trained on broad data might not function as well because of variations in language and style. NMT systems are still difficult to adapt to these domains.

Evaluation Metrics

Despite being popular measures for assessing the quality of machine translation MT, metrics such as BLEU are not very good at fully capturing semantic accuracy and fluency. An continuing problem is creating better evaluation measures that are more closely aligned with human judgment.

Overfitting

It is possible for NMT models, particularly ones with a lot of parameters, to overfit the training set, which means that while they perform well on the training set, they are unable to generalize to freshly collected data.

Ethical Concerns and Biases

The translations generated by NMT systems may reflect and magnify biases present in training data, which may be connected to gender, culture, or other factors. One of the most important challenges is addressing these ethical issues and guaranteeing justice.

Applications of Neural Machine Translation in NLP

Using its capacity to automatically translate text from one language to another with greater fluency and accuracy, neural machine translation (NMT) has a broad range of significant applications across numerous fields. The following are some important uses:

Global Communication and Localization

- Localization of Websites and Software: NMT helps companies to automatically translate their user manuals, software interfaces, and websites into a variety of languages, reaching a worldwide audience while saving time and money on localization.

- E-commerce: Enabling companies to communicate with clients throughout the world in their native tongues by translating brand descriptions, customer testimonials, and promotional materials.

- help for Customers: Reducing language barriers and enhancing customer satisfaction by offering real-time translation for customer care engagements, including chatbots and live agent help.

- Content sharing and social media: allowing people to interact with and comprehend content in multiple languages on websites that share content and social media platforms.

Information Access and Knowledge Sharing

- Automatically translating news stories and media content to make information available to a larger global audience is known as news and media translation.

- Helping researchers comprehend and use research papers and publications written in several languages is known as academic research.

- Creating cross-linguistic access to literary masterpieces and historical documents through digital libraries and archives.

Business and Professional Applications

- Translating legal contracts, financial records, technical manuals, and business documents is one way to streamline global business operations.

- International Collaboration: Encouraging multilingual teams and global initiatives to communicate and comprehend one another.

- Translation services for travel guides, hotel details, and other materials for foreign visitors are provided by the travel and tourism industry.

Personal and Educational Use

- Language Learning: Helping language learners understand foreign literature and improve.

- Personal Communication: Allowing multilingual friends, family, and coworkers to email and chat.

- Allowing people to read foreign-language books, articles, and other resources.

Integration with Other NLP Applications

- Question answering in many languages: allowing users to pose queries in one language and get responses from documents in several languages.

- Enabling users to look for information in their mother tongue and access papers published in other languages is known as cross-lingual information retrieval.

- Multilingual text summarization is the process of condensing documents written in several languages into a single language.

Read more on Advantages Of Neural Machine Translation NMT And What Is It?