Lexical Analysis Definition

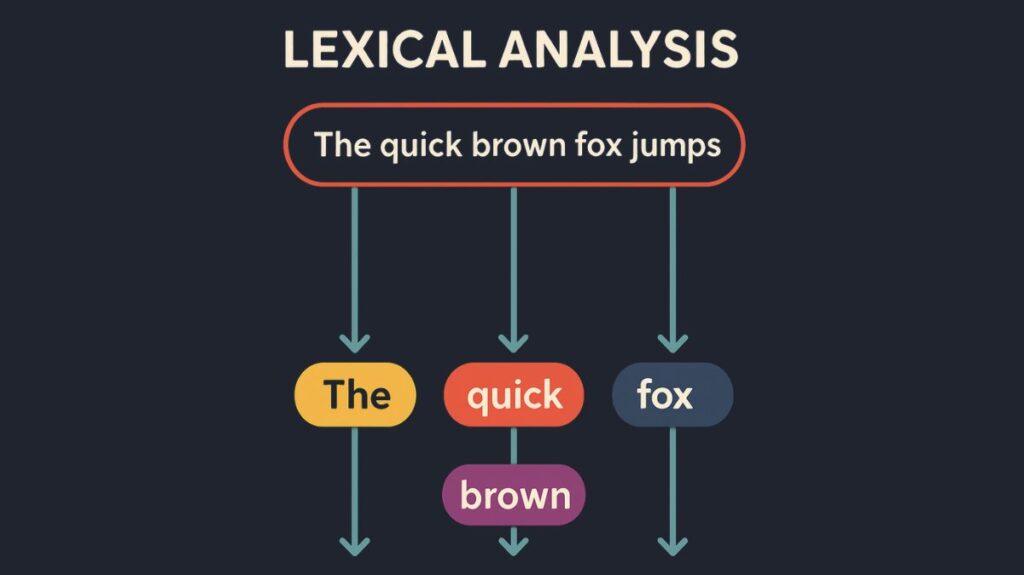

The process of analyzing a text at the word level is called lexical analysis. Another name for it is computational morphology. Its main objective is to examine the internal organisation of words, acknowledging that they are more than just atomic units. Lexical analysis breaks down words to reveal information about their constituent parts that will be useful for further Natural Language Processing(NLP) activities.

There are two ways to think of a word: either as a string of characters that appear in a text (such as “delivers”), or as a more abstract idea that serves as a cover phrase for a group of strings. This abstract thing is called a lexeme or lemma. For example, the set {delivers, deliver, delivering, delivered} is named by the verb DELIVER.

Lemmatization, or linking a word’s several morphological forms (also known as morphological variants) back to their base or dictionary form, the lemma, is a crucial task in lexical research. According to their shared lemma, this procedure establishes that many surface forms, such as “sang,” “sung,” and “sings,” are all variations of the verb “sing.” Likewise, the lemma “be” is shared by “am,” “are,” and “is.” Lemmas must have related syntactic and semantic information in order to do this task. The lemma DELIVER, for instance, along with morphosyntactic information such as {3rd, Sg, Present}, could be used to refer to “delivers”

What Makes Lexical Analysis Important?

It would be extremely inefficient to process morphologically complex words by simply listing every possible variant in a large inventory (a full-form lexicon). This is especially true for languages with rich morphology, such as Finnish or Bantu languages, which can have millions of inflected forms per verb. A basic inventory will never be comprehensive beyond storage since any fixed list will unavoidably contain words that are missing. via breaking down these unfamiliar or unidentified words into their component pieces, morphological processing which is carried out via lexical analysis can assist in handling them. Processing languages with intricate morphology requires this. Ambiguities are also inherent in natural languages, and writing systems can make them more difficult to resolve.

You can also read Morphological Analysis NLP: Stemming, Lemmatization & More

Fundamental Ideas in Lexical Analysis

The foundation of lexical analysis is the idea that morphemes the smallest meaningful elements of language are the building blocks from which words are constructed. These consist of:

- Stems: The main part of a word that is frequently thought to encode its grammatical category and essential meaning.

- Affixes are morphemes that join a stem and usually alter the category of a word or encode grammatical characteristics. The prefix un- and the suffix -ly or -ed in uncommonly or composed are examples.

- Morphosyntactic features like number, person, tense, case, and gender are represented via affixes. These characteristics are linked to word forms in lexical analysis, which are frequently shown as feature-value pairs (e.g., {3rd, Sg, Present}).

- This field studies how morphology and phonology interact, taking into account stem and affix shape as they merge. In the past tense, “sing” becomes “sang” by an internal vowel shift. Textual spelling standards can also be considered a component of this interaction.

- The principles that determine how stems and affixes mix and what order they can be used in inside a word are known as morphotactics.

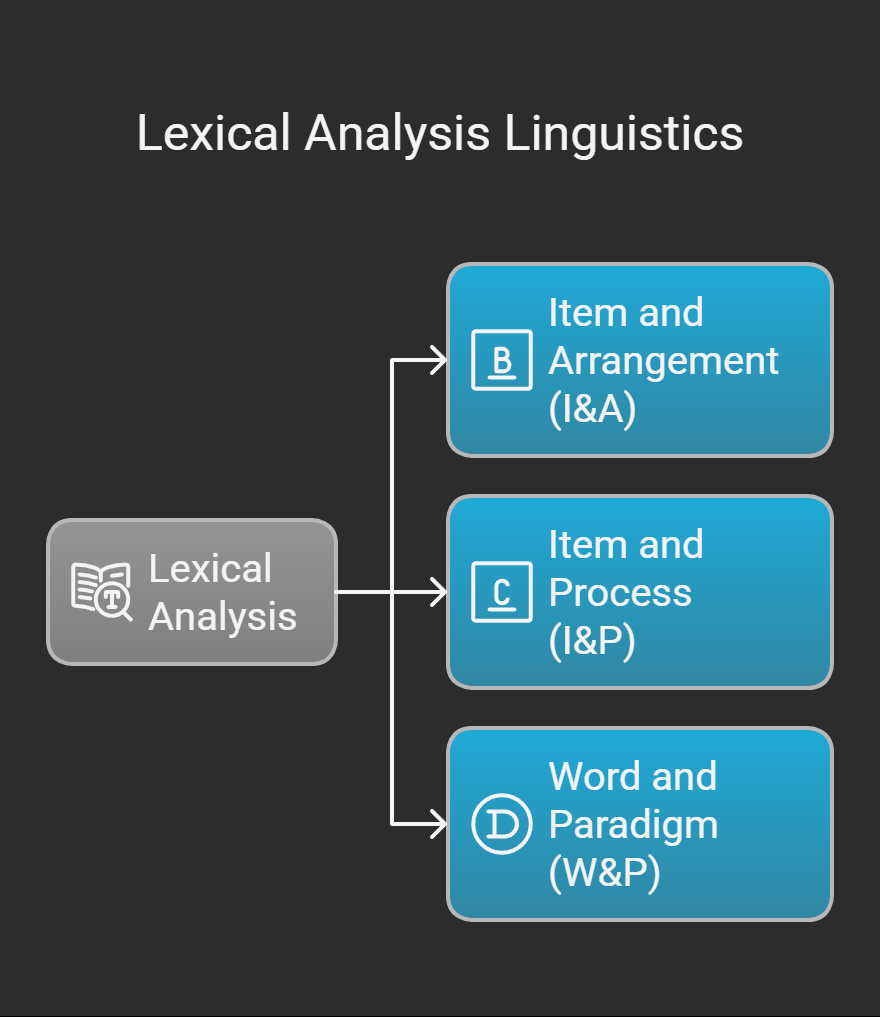

Lexical analysis linguistics

Various linguistic approaches to word structure have an impact on computational models for lexical analysis:

Item and Arrangement (I&A)

According to this perspective, complex words are created by fusing an affix and a stem, and the information or meaning is calculated from the contributions of both. This method is effective for languages like Turkish, where the affixes for negation, aspect, tense, and subject agreement follow the stem in a predetermined order, where the morphological components are neatly separable and contiguous. This perspective is frequently supported by finite state morphology.

Item and Process (I&P)

This viewpoint explains differences in stem or affix forms by emphasizing the processes (such as phonological shifts) that take place when morphemes combine. The focus is on morphonology.

Word and Paradigm (W&P)

According to this method, the lemma is linked to a “paradigm” basically, a table that associates every morphological variation of the lemma with a particular set of morphosyntactic characteristics. The meaning is linked to the paradigm’s cell and isn’t always interpreted as the culmination of distinct morpheme meanings. This provides a different way to account for morphology that is more intricate or “difficult” to integrate into the I&A model.

Methods of Computation

One popular and computationally effective method for lexical analysis is the use of finite state transducers, or FSTs. The transfer of words’ surface forms to their higher-level representations, like the annotated lemma with morphosyntactic properties, is something that FSTs are skilled at handling. They are able to encode the morphotactics, guaranteeing that the morphemes are arranged correctly.

One of the main benefits of FSTs is that they are bidirectional, meaning that the same FST model can usually be used for both generation (creating a word form from a lemma and features) and analysis (parsing), which breaks a word down into its constituent parts and features. One important design principle for lexical analysis systems is this flexibility. Spelling rule complexity can also be handled by FSTs.

Lexicons, or machine-readable dictionaries, are essential to lexical research because they contain data on words and morphemes. These lexicons must have information on irregular forms, regular stems, affixes (and the characteristics they communicate), and ideally their syntactic categories in order to be analyzed precisely.

It is possible to list irregular forms along with the lemma and feature information that correspond to them. Stem lexicons assist in handling words whose base forms are no longer productive (e.g., avoiding “un-” + “kempt” if “kempt” isn’t a legitimate stem) and preventing inaccurate analyses (e.g., avoiding analyzing “corpus” as “corpu” + “-s”). A two-step procedure is occasionally employed, with the first stage concentrating on morpheme forms and the second stage (typically associated with syntactic analysis) excluding analyses in which morpheme properties are considered incompatible with the stem.

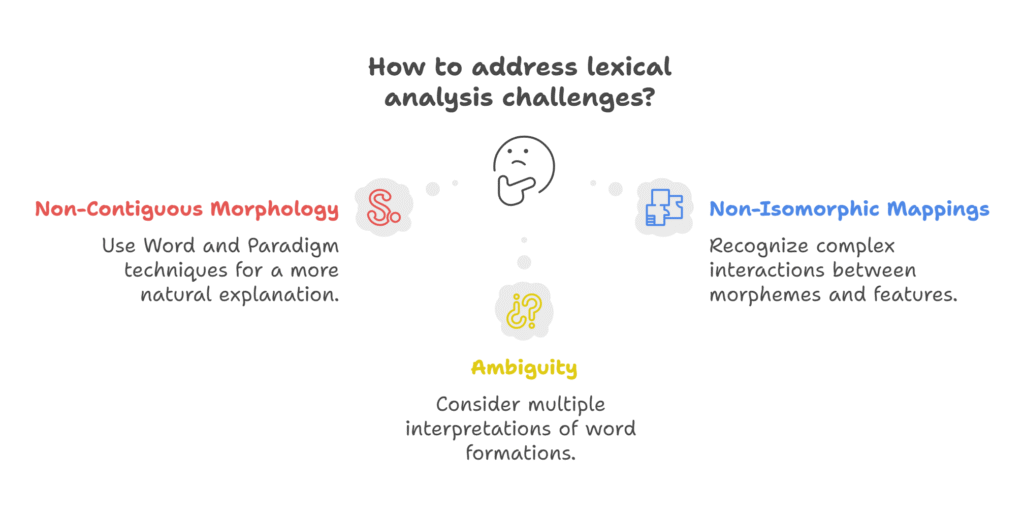

Lexical analysis challenges

The stem-plus-affix model is seen as “idealistic” for many languages, including as English, French, German, and Russian, even though it works well for others. This is due to the fact that morphology isn’t always a straightforward, linear combination of discrete morphemes with a single function. The following languages have “difficult” morphology:

When a root of consonants is interleaved with vowels or patterns to generate distinct words, this is known as non-contiguous morphology. This is found in languages like Arabic, where the morpheme exponent is not a single series of symbols. It is stated that Word and Paradigm techniques offer a more linguistically natural explanation, even if FSTs can handle this by treating it as a linear translation problem.

Non-isomorphic mappings occur when a single morphosyntactic feature is marked by several morphemes or intricate interactions, or when a single affix does not map to a single morphosyntactic characteristic in a neat manner.

Ambiguity: Word formations may have more than one conceivable interpretation due to their morphological ambiguity.

Connection to Additional NLP Tasks

Tokenization and sentence segmentation are examples of first text preparation techniques that are usually followed by lexical analysis. It provides the comprehensive morphosyntactic information required to comprehend how words mix to form phrases and sentences, making it an essential prelude to syntax analysis (parsing). Treebanks used for syntactic analysis frequently contain this morphosyntactic annotation.

Lexical analysis and Part-of-Speech (POS) tagging are closely related. POS tagging is a simplified type of morphological analysis in which the surface form of a word is assigned a tag that indicates its category. A more thorough morpheme-based analysis may be required for POS tagging in complex languages.

Semantic analysis, which focusses on the meaning of words and sentences, depends heavily on the findings of lexical analysis, especially the lemma and the information that goes along with it. The lemma dictionary serves as the link between lexical analysis and lexical semantics, which is concerned with the meaning of individual words.

Lemmatization provides access to lexical semantics through lemma dictionaries, and the morphosyntactic representations are utilized in the linguistic analysis of the source language in applications such as Machine Translation (MT).

Information retrieval (IR) systems occasionally employ lexical analysis, or a more basic version called stemming, to classify related word forms. Stemming, which reduces words to a canonical form that might not be a linguistic stem, can occasionally impair precision and does not always improve IR performance. In general, complete morphological analysis is thought to be more linguistically sound than basic stemming because it gives compositional information.

Last but not least, lexical acquisition is crucial due to the natural productivity of language, where new words and applications are always emerging. This entails researching vast text corpora to learn about word attributes and enhance already-existing machine-readable dictionaries.