NLP Knowledge Base techniques use external knowledge bases and ontologies to improve language processing. Structured data like facts, relationships, and domain-specific knowledge improves Natural Language Processing system accuracy and capability.

Furthermore, purely symbolic systems lack the probabilistic reasoning that is frequently necessary to achieve adequate performance.

Historically, NLP has shifted significantly towards data-intensive approaches combined with machine learning and an evaluation-led methodology due to the difficulties rule-based systems confront, especially in domains like automatic speech recognition. Data-driven systems proven to be much more precise, efficient, and resilient when handling real-world data and variance, which is why this shift took place.

Nonetheless, linguistic insights that are frequently linked to knowledge-based approaches remain significant. When there is a shortage of training data, linguistic structure appears to be very useful. A lot of contemporary NLP systems use hybrid strategies, which incorporate aspects of statistical/machine learning and rule-based techniques. By turning linguistic insights into features for machine learning frameworks, they can be integrated into data-oriented methodologies. For instance, hybrid information extraction techniques may employ a machine learning system for more complicated entities and a rule-based system for easily identifiable ones. Commercial Named Entity Recognition (NER) systems frequently combine supervised machine learning, lists, and rules in a practical way. Future directions include combining dictionary-based learning with corpus-based learning, which is a type of knowledge source.

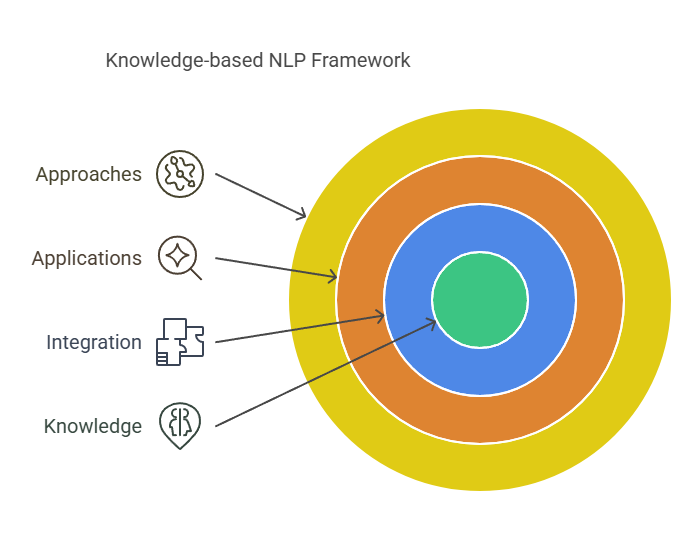

Important facets of NLP Knowledge base Framework:

Representation of Knowledge:

Knowledge is arranged and structured into ontologies or knowledge bases, which can show the connections between facts, entities, and concepts.

Integration of Knowledge:

NLP systems include information into their processing pipelines from outside sources, including dictionaries, encyclopaedias, and domain-specific databases.

Areas of Application:

Knowledge-based Natural Language Processing is applied to a number of activities, such as:

- Word sense disambiguation is the process of correctly interpreting words in context by using knowledge bases.

- Question answering is the process of retrieving responses to queries in plain language by utilising knowledge resources.

- Text simplification is the process of making complex texts easier by substituting more straightforward synonyms or explanations for challenging concepts.

- Information extraction is the process of identifying entities and relationships in unstructured text by utilising knowledge bases to extract structured information.

- Machine Translation: Using domain-specific information to increase machine translation accuracy.

Knowledge-based NLP approaches include, for example:

Lesk Algorithm: A well-known word sense disambiguation system that determines a word’s contextually suitable meaning by consulting dictionary definitions.

Parsing Semantically: Converting queries in natural language into logical structures and then using knowledge bases to produce responses.

NLP Knowledge Base Advantages

Enhanced Precision: Domain-specific knowledge can help NLP systems predict and make judgements more accurately.

Improved Contextual Knowledge: Knowledge bases aid NLP systems in deciphering linguistic context and clearing up ambiguities.

Improved Inference and Reasoning: NLP systems can carry out reasoning and inference tasks, such deriving conclusions from facts and relationships, with knowledge bases.

Enhanced Flexibility: A greater variety of linguistic tasks and domains may be handled by knowledge-based NLP systems.

NLP Knowledge Base drawbacks

Knowledge-based methods have several drawbacks, even if they can offer detailed, organized representations. They may find it difficult to deal with natural language’s inherent ambiguity and unpredictability. Compared to data-driven approaches, manually creating intricate rules might result in a substantial knowledge acquisition bottleneck and algorithms that perform badly on naturally occurring text. It is challenging to learn in-depth domain knowledge represented in formalisms such as First-Order Predicate Calculus (FOPC). Complex morphological phenomena that defy common prefix/suffix assumptions might likewise be problematic for pure finite-state models.

Acquiring and Maintaining Knowledge: It can be difficult and time-consuming to build and manage sizable and accurate knowledge libraries.

Development of Ontologies: It can be difficult to create suitable ontologies that encapsulate the pertinent domain-specific knowledge.

Scalability: Large knowledge bases can be computationally costly to integrate into NLP systems.

Updates to the Knowledge Base: It can be difficult to keep knowledge bases current with new and changing information.

NLP uses many methods to assist computers understand and process human language. These methods are probabilistic (statistical or machine learning) and knowledge-based (rule-based or symbolic). NLP’s past and present show how these paradigms have changed and continue to interact.

Handcrafted rules and linguistic information, usually obtained from human skill, are the foundation of knowledge-based systems in Natural Language Processing (NLP). These techniques, which have their roots in philosophical rationalism, create computational models based on intricate sets of rules and processes unique to language components. Rule-based approaches were first prevalent in the historical arc of NLP development.

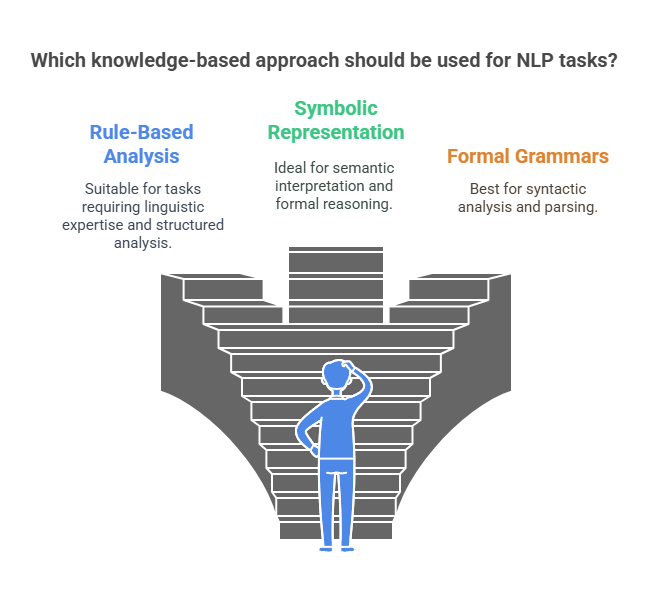

The following knowledge-based approaches should be used for NLP tasks?

- Assigning tags or analyzing structures using manually created rules derived from linguistic expertise.

- Dependence on symbolic representations for semantic interpretation, such as predicate calculus or logic. Computational semantics occasionally makes use of variants such as typed lambda calculus. Another formal method for addressing issues like anaphora resolution and semantic interpretation is Discourse Representation Theory (DRT).

- Because natural language computing relies on rules to manipulate symbolic representations, approaches like unification-based or feature-based grammar became important.

- Finite-state methods, such transducers and finite-state automata, are commonly employed in computational approaches to word structures and sound patterns (phonology and morphology). Spelling rules can also be implemented via finite state transducers.

- For syntactic analysis or parsing, formal grammars such as context-free grammars (CFGs) and generative grammar are employed.

- In order to query databases of facts, knowledge-based question answering entails creating a semantic representation of the query, such as a logical representation. Early AI-based QA systems used database-encoded information to answer queries. Making formal meaning representations is one method of bridging the gap between language and commonsense understanding.

- These methods make use of static knowledge sources such fact databases, ontologies, onomasticons (lexicon of names), and lexicons that link ontologies with natural language. Ontologies, lexicons, and meaning structures are specified using knowledge representation languages. The importance of ontology-based methods in semantic research is becoming more widely acknowledged.

- One of the fundamental steps in NLP that is frequently connected to traditional or rule-based methods is lexical analysis.