What is the NLP Statistical or Probabilistic Approach in NLP

In Natural Language Processing (NLP), probabilistic approaches also known as NLP Statistical invoke the modelling and processing of language through quantitative techniques, especially probability theory. This contrasts with methods that are solely knowledge-based or symbolic, which mostly depend on manually constructed linguistic rules and symbolic representations. Due in large part to the realisation that natural language is extremely varied and confusing, statistical techniques have gained importance in NLP in recent years.

According to statistical natural language processing, language processing is the process of taking data and utilising machine learning techniques to extract information, find patterns, predict missing information, or create probabilistic models. In contrast to the categorical judgements sometimes associated with rationalist or Chomskyan linguistics, these approaches are consistent with an empiricist perspective on language, emphasising learning from evidence and detecting correlations and preferences in language use. It is believed that statistical Natural Language Processing‘s quantitative approach can manage the non-categorical phenomena present in language.

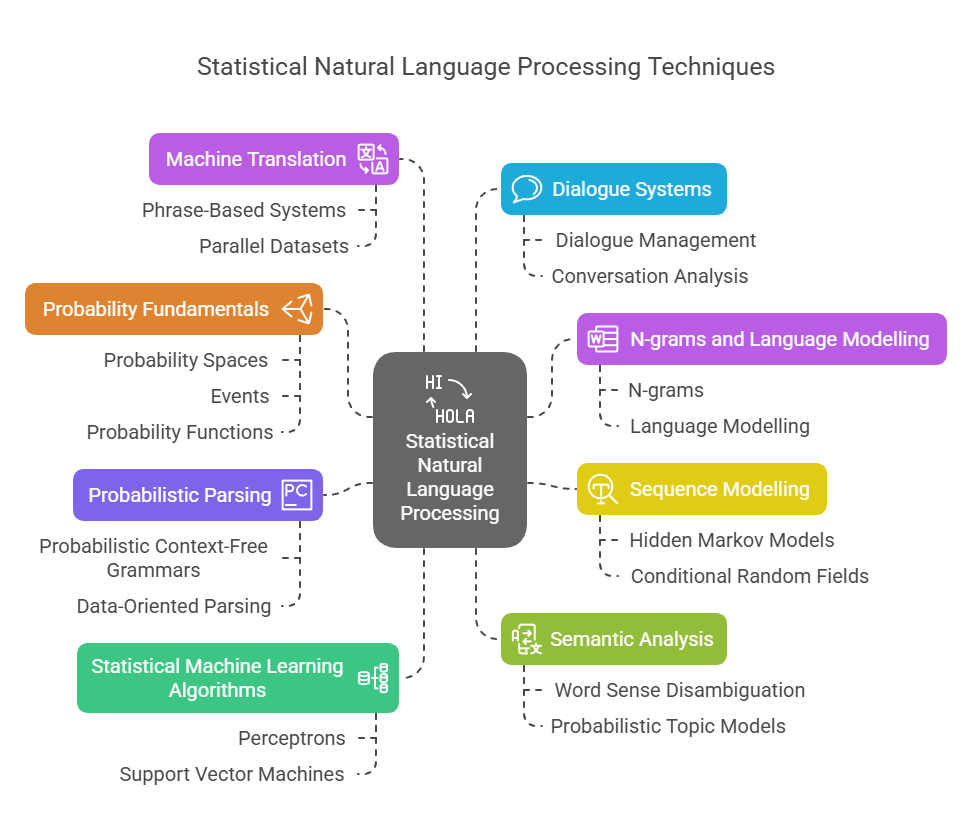

Important models, methods, and formalisms in probabilistic NLP include

- Concepts like probability spaces, events, probability functions, distributions, conditional probability, and joint probability are all part of the fundamentals of probability. Techniques such as relative frequency estimation are essential for calculating probabilities from data. In statistical natural language processing, Bayesian statistics offer a valuable alternative to frequentist statistics. The notions of prior and posterior probability are crucial.

- N-grams and Language Modelling: N-grams are word sequences of N that are used to estimate the likelihood that a word will appear given the words that come before it. The goal of language modelling is to anticipate the next word or give a sequence of words a probability. The use of n-grams to model word sequences was made possible by early research by Markov and Shannon. Smoothing techniques like backoff or interpolation, notably Kneser-Ney smoothing, are necessary for estimating n-gram probabilities, particularly when dealing with sparse data. Extrinsic task performance or perplexity are used to assess N-gram language models. Neural language models have essentially replaced n-gram models, notwithstanding their influence.

- Sequence Modelling: Probabilistic models are commonly used for tasks such as Part-of-Speech (POS) tagging, in which every word in a sequence is given a tag. A traditional generative probabilistic method for sequence tagging, Hidden Markov Models (HMMs) frequently use the Viterbi algorithm to determine the most likely tag sequence and maximum likelihood estimation for probabilities. Additionally, discriminative models that learn to select the optimal sequence based on many attributes are employed, such as Conditional Random Fields (CRFs) and Maximum Entropy models.

- Probabilistic Parsing: In syntactic analysis, probabilities are employed to ascertain the grammatical structure of phrases. One important formalism is Probabilistic Context-Free Grammars (PCFGs), which add probabilities to CFG rules. Probabilities are used by statistical parsers to distinguish between several potential parse trees. Data-Oriented Parsing (DOP), which generates probability from subtrees observed in a corpus, and History-Based Models, which condition parsing decisions on the derivation history, are other probabilistic parsing techniques. Additionally, probabilistic variants of LR parsing have been investigated.

- Statistical Machine Learning Algorithms: Many of the methods utilised in statistical natural language processing are derived from machine learning. Both supervised and unsupervised learning techniques are included in this. Common techniques include Perceptrons, Support Vector Machines (SVMs), Logistic Regression, and Naive Bayes classifiers (which are frequently used for text categorisation and make independence assumptions). For tasks like unsupervised word sense induction, latent variable models such as Expectation-Maximization (EM) and Mixture Models are employed.

- Semantic Analysis: Although statistical methods have historically been symbolic, they also aid in the comprehension of meaning. Word Sense Disambiguation (WSD) can employ probabilistic or dictionary-based approaches. Statistically speaking, meaning can also be seen as existing within the distribution of contexts. Textual topics can be analysed using probabilistic topic models. Semantic parsing and textual entailment have been approached using statistical methods. Probabilistic reasoning may be required for actual systems even when semantics is handled by formalisms such as First-Order Predicate Calculus (FOPC).

- Machine Translation: Based on training techniques applied to parallel datasets, phrase-based statistical machine translation systems have found widespread use.

- Dialogue Systems: Dialogue management can benefit from the application of probabilistic reasoning. Dialogue act labelling can be accomplished with probabilistic decision trees.

Probabilistic approaches necessitate assessing system performance, frequently with the use of metrics such as recall and precision.

Hybrid approaches that blend rule-based or linguistic insights with statistical/machine learning techniques are commonly used in modern Natural Language Processing systems. Building on statistical underpinnings, deep learning architectures like RNNs and Transformers are used for probabilistic language modelling, sequence labelling, and sequence classification. They represent the state-of-the-art for many tasks.