What is Data Acquisition?

The process of gathering, preparing, and organizing unprocessed textual data for use in training and assessing natural language processing (NLP) models is known as data acquisition. Since the performance and generalizability of models are strongly impacted by the quality and diversity of data, it is one of the most important elements in any NLP pipeline.

Importance of Data Acquisition in NLP

NLP models use the data they are trained on to identify patterns, relationships, and structures in human language. Building dependable applications like chatbots, interpreters, summarizers, and sentiment analyses therefore requires obtaining the correct type of data in terms of quality, quantity, language variety, and domain relevance.

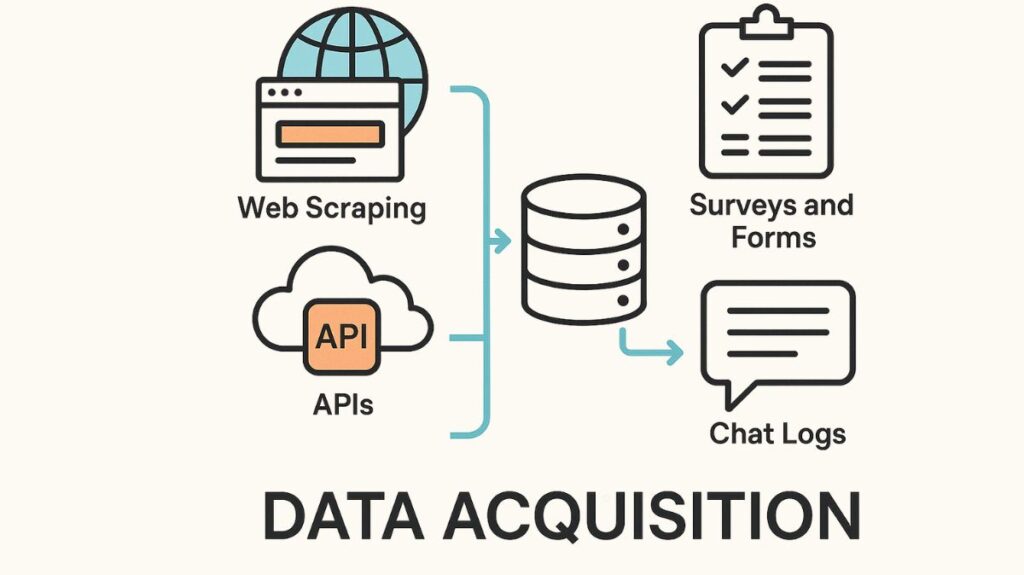

There are several sources of NLP data, including:

- Web scraping involves extracting text from Wikipedia, blogs, news portals, and forums.

- Open datasets: Using LDC corpora and Common Crawl, BookCorpus, and Open Subtitles.

- APIs: Using Twitter, Reddit, and newspaper APIs to gather both organised and unstructured data.

- Internal or proprietary data: Businesses frequently need to adhere to stringent privacy regulations when using emails, service requests, or customer evaluations as training data.

Data Acquisition Examples

- Gathering articles, blogs, or reviews from websites (such as news sites, Amazon, and Reddit) is known as web scraping.

- APIs: Collecting structured text data using systems such as Google Books API or Twitter API.

- Public Datasets: Making use of publicly available datasets from sites like Wikipedia, Common Crawl, Kaggle, Hugging Face, or UCI.

- Forms and surveys: Gathering user replies in plain language.

- Customer service tickets and chat logs are used to train conversational AI.

Benefits

- Model Training: Accuracy and performance of NLP models are enhanced with high-quality data.

- Domain adaptation aids in customizing models for certain industries, such as law, healthcare, or finance.

- Real-World Relevance: Verifies that the NLP system is aware of slang, jargon, and current language usage.

- Diversity & Inclusion: Models are more equitable and inclusive because large datasets capture a variety of dialects, accents, and cultural backgrounds.

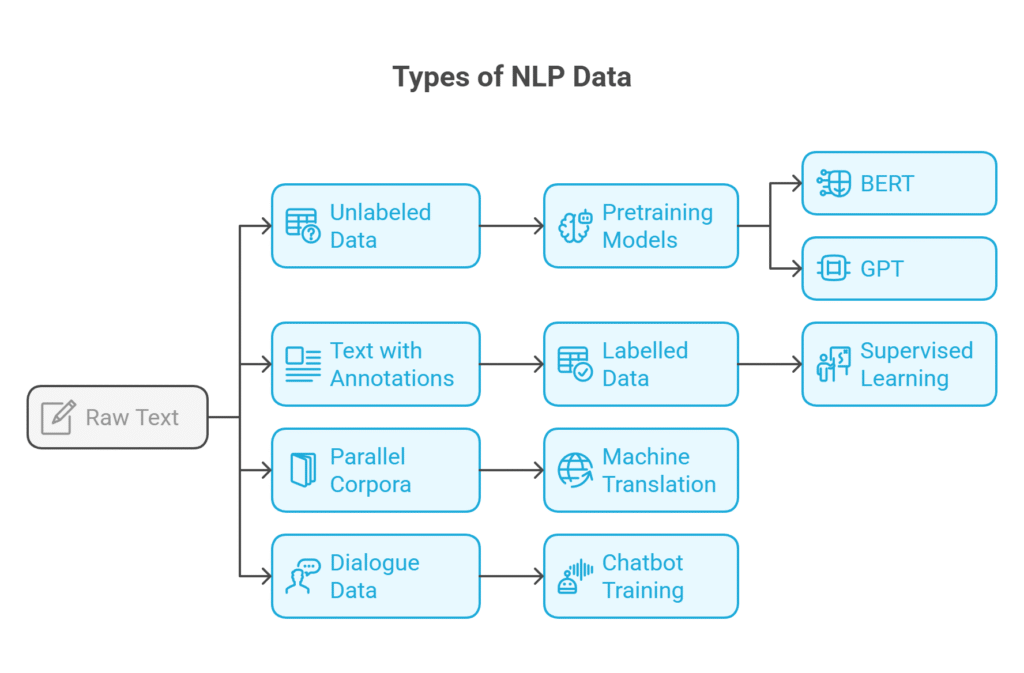

Types of NLP Data

Data capture concentrates on several text types based on the task:

- Raw text without annotations is known as unlabeled data, and it is utilized in pretraining models such as BERT and GPT as well as unsupervised learning.

- Text with annotations (such as sentiment tags, named entities, or speech segments) is known as labelled data, and it is necessary for supervised learning.

- Machine translation uses parallel corpora, which are collections of aligned text in several languages.

- Dialogue data: Beneficial for chatbot or conversational agent training.

Preparing the Gathered Information

Following acquisition, data typically needs to be thoroughly cleaned and preprocessed:

- Normalization of text (lemmatization, stemming, lowercasing, and punctuation removal)

- Splitting text into words or subwords is known as tokenisation.

- Noise reduction (deleting spam, HTML elements, and advertisements)

- Managing emojis and unusual characters, particularly in social media data

Ethical Considerations

Legal and ethical concerns must be taken into consideration when gathering NLP data:

- Ensuring the removal or protection of personally identifiable information (PII) is known as data privacy.

- Avoiding data that can bring linguistic or societal bias into models is known as bias reduction.

- Licensing: Making sure that information utilized complies with terms of service and copyright regulations.

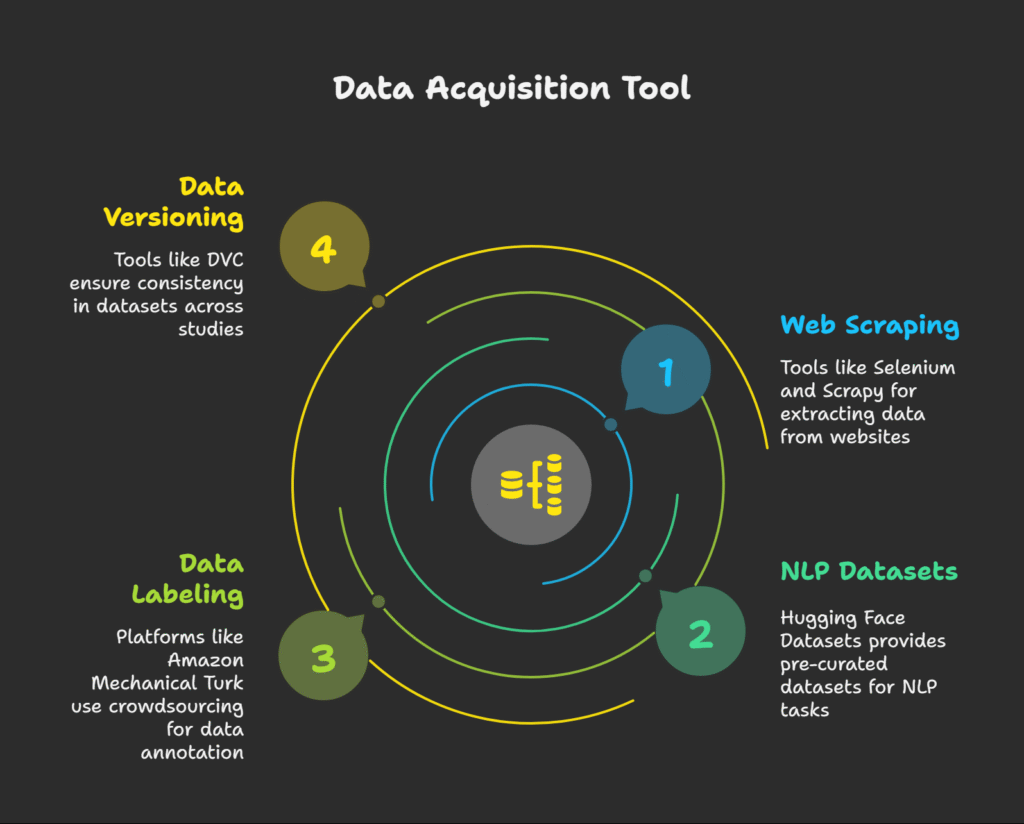

Tools for Data Acquisition

During this stage, some common platforms and tools utilized are:

- Selenium, Scrapy, and BeautifulSoup for web scraping

- Hugging Face Datasets offers pre-curated NLP datasets for download.

- Platforms for data labelling that use crowdsourcing, such as Amazon Mechanical Turk

- Tools for data versioning, like DVC, are used to keep datasets consistent across studies.

Challenges in Data Acquisition

- Lack of data for languages or topics with limited resources

- High annotation costs for intricate operations like coreference resolution or summarization

- Dataset class imbalance that results in biassed model behaviour

- Web material that is dynamic and subject to change or disappearance over time

Also Read About Types of Text Summarization In NLP And Why it is Important

Best Practices

- Integrate several sources to increase diversity.

- Verify the accuracy of the data using automatic or manual tests.

- Periodically update datasets to reflect linguistic change in the real world.

- For improved filtering and analysis, use metadata (such as language, domain, and date).

In conclusion

In NLP, data acquisition is more than just gathering a lot of text. It’s a strategic approach that calls for consideration of ethics, legal compliance, quality, and relevancy. The foundation of successful NLP systems is a well-gathered dataset, which also makes it possible to make significant progress in comprehending and producing human language.