A key idea in supervised machine learning, linear classification is frequently used for applications involving natural language processing, such text categorization.

What is Linear Classification?

A basic machine learning technique for classification applications, linear classification is especially popular in natural language processing. The objective is to give a specific input instance a discrete label from a predetermined list of potential labels. Usually, this input instance is shown as a vector of features or measurements.

Linear classifiers have hyperplane decision boundaries. This boundary is a line in two-dimensional feature space, a plane in three, and a hyperplane in higher dimensions.

Classification issues may include:

- Assigning one of two possible labels (such as spam or not spam, or labels from {-1, 1}) is known as binary classification.

- Assigning a label from a group of more than two options (such as the topic of a news story from “sports,” “technology,” or “politics”) is known as multi-class classification.

How it Works

A linear scoring function or a linear combination of input features are the foundations of linear classification.

Input Representation

First, the input instance (text document) must be converted into a numerical feature vector. This crucial stage often uses TF-IDF for text or the Bag of Words.

Scoring Function

The data is used to learn f(x), a real-valued scoring function. This function is linear in the case of linear classifiers.

- A popular linear scoring function for binary classification is f(x) = wTx + b, where b is a bias factor, w is a vector of weights, and x is the input feature vector. This function’s usual range is (-∞, +∞).

- One potential method for multi-class classification is to assign a weight vector and bias to each class. The vector inner product θ · f(x, y) is frequently used to calculate the compatibility or score between the input x and a prospective label y. In this case, f(x, y) is a feature function that can capture properties of the input x in respect to the prospective output y, and θ stands for the weights. Each feature’s weight indicates its relative relevance.

Rule of Decision

- The sign of the scoring function usually determines the label for binary classification. Assigning label +1 if f(x) > 0 and -1 otherwise is one example. A squashing function such as the sigmoid may be used in log-linear binary classification.

- The instance is given the label y that produces the highest score in multi-class classification. The “one-versus-all” strategy is one technique for multi-class problems, in which each class is given its own binary classification algorithm, and the class with the highest scoring function wins. The SoftMax function is frequently used to obtain probabilities in log-linear multi-class classification.

Learning Process

Supervised machine learning is used to construct linear classifiers. The classifier is trained using a dataset of labelled instances {(x1, y1),…, (xn, yn)} in order to determine the ideal weights and bias. By minimizing an objective function, such minimizing classification error, or a particular loss function (like hinge loss or negative log-likelihood), the learning process chooses a classifier from a parameterized family. Gradient descent and other optimization techniques are frequently employed. Separate test data should be used to assess the performance. Performance may be impacted by regularization.

Features Used

The classifier uses features, which are measurements or attributes of the input. The feature engineering method employed has a fundamental impact on classification accuracy. Simple types like texts, integers, and Booleans must be used to encode features. Inputs for text classification are feature vectors that are extracted from the text’s content.

The following text feature representations are frequently used with linear classifiers:

- Bag of Words (BoW): Ignores order and represents text as a vector of word counts.

- Bag of N-Grams: Employs n-word or character sequences.

- Words are weighted according to their frequency within a document and their inverse frequency across documents using the TF-IDF algorithm.

- Dense vector representations of words are known as word embeddings.

- One-Hot Encoding: A simple vectorisation technique that uses binary vectors to represent each distinct item (like a word).

- Lexical Features: Characteristics of words, like their forms, existence in lists (gazetteers), or the words themselves.

- Syntactic features, like dependency arcs, are characteristics that come from the grammatical structure.

- Features can be specified more broadly for a pair of inputs (x) and suggested labels (y), sometimes relying on both. In certain situations (such as Maximum Entropy modelling), characteristics include details about the object’s class and measurements.

A key component of the machine learning pipeline is feature engineering. Features can be as simple as a word’s final letter or as complicated as the results of another classifier.

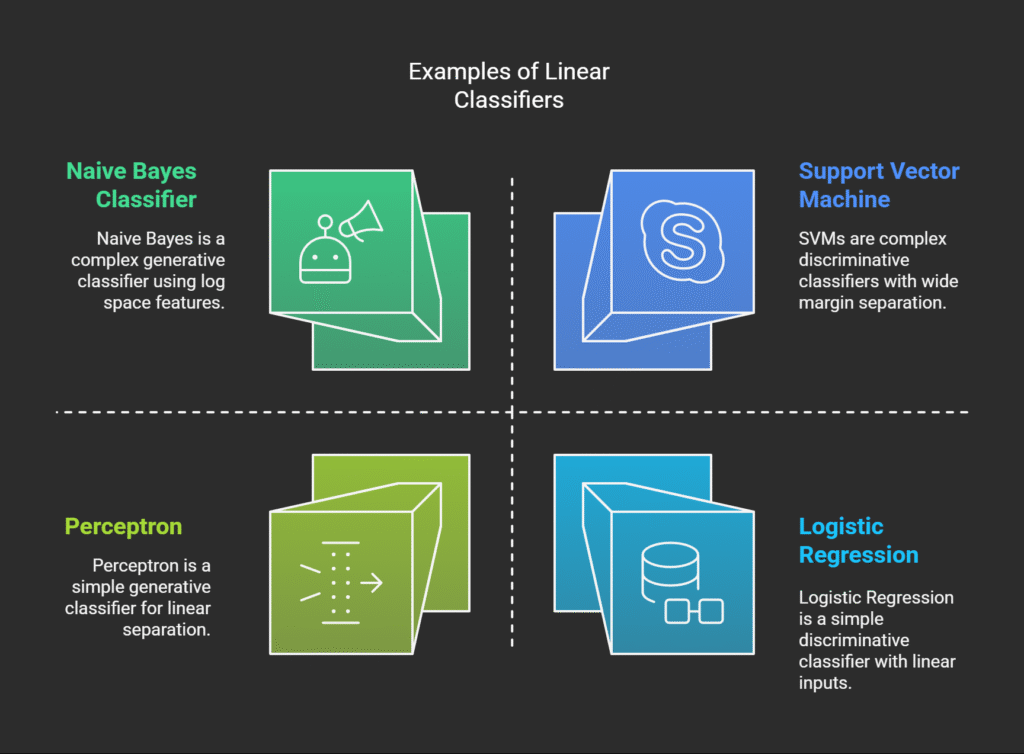

Examples of Linear Classifiers

A number of algorithms can be used as linear classifiers or produce them:

- Naive Bayes Classifier: The predicted class is calculated as a linear function of input features when log space features are taken into account. A generative classifier is Naive Bayes.

- Logistic Regression: These classifiers combine inputs in a linear fashion. The regularized negative log-likelihood is minimized by logistic regression. It belongs to the family of generalized linear models and can be thought of as a loglinear model. One discriminative classifier is logistic regression.

- Perceptron: A straightforward approach for linear classification. The perceptron algorithm will always find a separator if the data is linearly separable. Weights are updated by the algorithm according to errors.

- A well-liked linear classification technique that relies on identifying a wide margin separating hyperplane is the Support Vector Machine (SVM). The regularized margin loss is a component of the objective function. For tasks like document relevance classification, SVMs have been widely selected. Non-linear borders can be produced by kernel SVMs.

A framework for discriminative learning techniques that convert inputs and outputs into feature vectors with weights is known as a global linear model. Sequence prediction methods make use of this.

Advantages and Disadvantages

- Advantages: If the data is linearly separable, linear classifiers, such as the perceptron, are assured to identify a solution. For text categorization, they frequently work well, especially when working with high-dimensional feature spaces. The effectiveness of Naive Bayes is well known.

- Disadvantages: The exclusive-or function is one example of a problem that linear classifiers cannot solve since it is not linearly separable. The conditional independence of characteristics is assumed by Naive Bayes, which is frequently untrue in natural language. Certain linear classifiers (like Naive Bayes) may limit the kinds of features that can be employed by using techniques like Expectation-Maximization.

In several evaluations, support vector machines (SVM) have outperformed decision trees, closest neighbours, and Naive Bayes in text classification problems, which are commonly addressed by statistical packages employing classification techniques. Linear classifiers are still essential even with the emergence of deep learning (e.g., CNNs, LSTMs, Transformers).