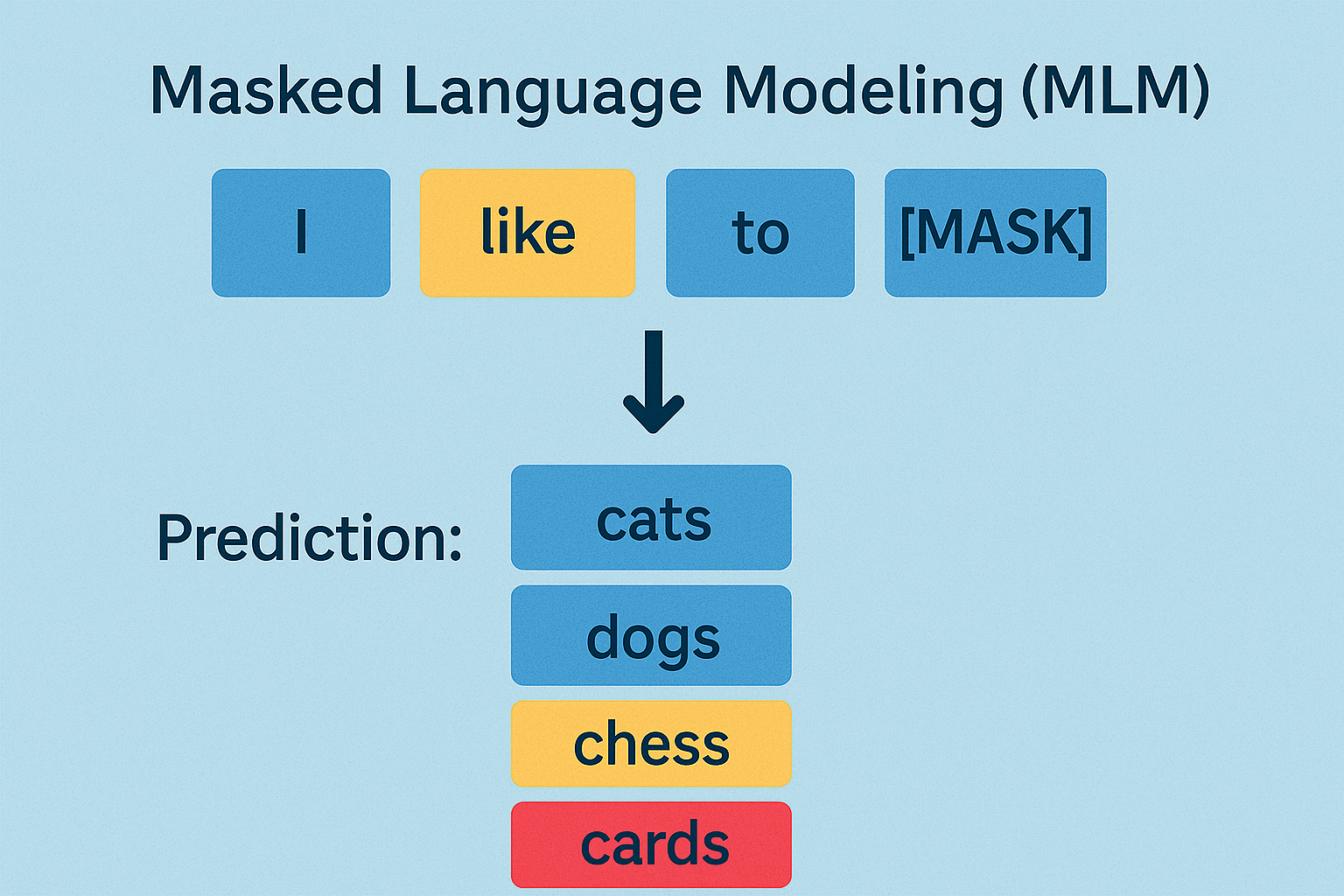

Masked Language Modelling (MLM)

MLM is a technique in which the model is asked to predict the original, masked tokens after a subset of the input tokens (words or subwords) in a phrase are “masked” (hidden). The context that the surrounding, unmasked words in the phrase give must be used by the model to deduce the missing words. One of the main pre-training goals for models such as BERT is Masked Language Modelling (MLM). It is the process by which bidirectional transformer encoders are trained.

Purpose: Transformer networks are trained to comprehend and produce text that resembles that of a person using MLM, a pre-training technique. Bidirectional encoders, which can view the complete input text simultaneously, including context to the left and right of a particular token, are intended to be trained by it.

The Assignment: The cloze task is a “fill-in-the-blank” challenge that the model learns to complete. This indicates that tokens that have been “masked” or concealed inside the input text are predicted by the model.

How Masking Works: A random selection of tokens from the input text is chosen for the learning assignment during pre-training. The training texts usually hide 15% of the input tokens. A token can be utilised in one of three ways after being selected for masking:

- The unique vocabulary token [MASK] takes its place.

- Another token from the vocabulary, chosen at random using token unigram probabilities, takes its place.

- It remains unaltered.

- Usually, a subword model like BPE (Byte-Pair Encoding) is used in the tokenization process. The whole token replacement is restricted to 15% of the input sequence, and the masking operation is carried out at the subword token level.

Bidirectional Context: Because the masked tokens can appear anywhere in the text, this pre-training process is known as a bidirectional language model. This enables the model to estimate the concealed token by using significant context from both the left and right of the mask. Traditional language models, on the other hand, usually only parse text from left to right.

The Process of Learning:

- The input sequence is sent to the model with certain tokens changed or hidden. A stack of bidirectional transformer blocks processes the input embeddings, which combine token and positional embeddings and occasionally segment embeddings for tasks like Next Sentence Prediction.

- Each sampled (masked, substituted, or unaltered) token’s output vector from the last transformer layer is fed into a classifier.

- The assignment involves predicting one of the vocabulary’s subword tokens using a multi-class classifier.

- Usually, a softmax layer serves as the classifier, generating a probability distribution over all vocabulary tokens.

- The typical cross-entropy loss function, which seeks to maximize the likelihood of the right initial token, is used to train the model.

- The average loss over the sampled items in a training batch or sequence serves as the basis for the weight update gradients.

Unsupervised Nature: Because MLM pre-training does not need expert labelling and instead depends only on vast volumes of unannotated text input, it is sometimes referred to as an unsupervised method.

Variations: It is possible to generalize the idea of corrupting the input and then asking the model to restore it. One kind is called span-based masking, in which a span a continuous series of tokens is masked rather than individual tokens. This is driven by the fact that finding and categorizing phrases or components is a common task in NLP applications.

Importance: Because MLM pre-training instantiates extensive representations of word meaning depending on context, it is essential in BERT and related models. The models may be more readily adjusted for different downstream Natural Language Understanding (NLU) tasks with their learnt representations.

Next Sentence Prediction (NSP)

BERT uses Next Sentence Prediction as a pre-training assignment to assist the model comprehend how sentences relate to one another. It is frequently used for activities like conversation systems, summarization, and answering questions. Finding out if a given second statement logically follows the first is the aim. “A pre-training method for language models, specifically for bidirectional transformer encoders like BERT and its offspring, is called Next Sentence Prediction (NSP).

For instance:

- Sentence A: “She opened the door.”

- Sentence B: “She saw her friend standing there.”

Since Sentence B comes after Sentence A in this instance, the label is 1 (consecutive). Sentence B would get the designation 0 (non-consecutive) if it were unrelated, such as “The sky was blue.

Training a model to predict whether a given pair of sentences is made up of two sentences that were initially nearby in the training corpus or if they are unrelated is the aim of the NSP task. The approach seeks to collect information about the links between sentences by learning to differentiate between sentences that are truly following and those that are not. This information is useful for applications later on that need comprehension of sentence-level linkages, like:

- Finding out whether two phrases have similar meanings is known as paraphrase detection.

- Finding out if the meaning of one sentence implies or contradicts the meaning of another is known as textual entailment.

- Determining if two adjacent sentences make up a cohesive text is known as discourse coherence.

Next Sentence Prediction using BERT

Here is a more thorough breakdown of Next Sentence Prediction’s operation, especially as it relates to models like BERT:

- The model is given sentence pairs to work with during training.

- Two methods are used to build these pairs:

- Positive examples: Comprising two phrases that, in the training corpus, were genuinely next to each other.

- Negative examples: Consists of a sentence that is followed by an unrelated sentence that was chosen at random from the corpus.

- A 1:1 ratio of positive and negative pairings 50% real adjacent pairs and 50% random pairs is commonly used in BERT training data.

- The combined sentence pair sequence that is input into the transformer network starts with a unique [CLS] token.

- After processing the complete sequence, the transformer encoder creates a contextualized embedding for every token including the [CLS] token.

- Using the attention method, the contextualized embedding of the [CLS] token implicitly extracts information from the full input text (both sentences).

- After being taught to produce a prediction indicating whether the sentence combination was a positive or negative example, a binary classifier receives this [CLS] embedding.

- A particular NSP loss function is used during training to gauge the model’s performance on this binary classification task, which is based on separating true pairs from random pairs.

Because it just uses unprocessed text data from a corpus, this pre-training job is “unsupervised” in the sense that it doesn’t need any expert comments beyond the text’s natural sentence structure. In order to provide reliable representations that are applicable to a variety of downstream NLP tasks, NSP is frequently trained in conjunction with another pre-training goal, such as masked language modeling.