What is Semantics in NLP?

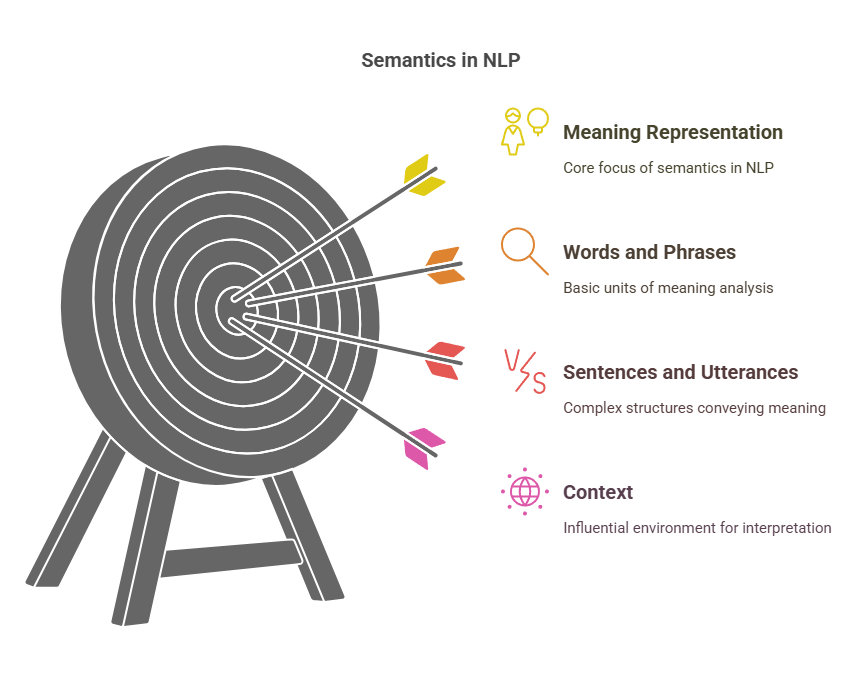

The study of word meanings, statements, and constructions is known as semantics. Semantic analysis in NLP focusses on meaning representation. Words, fixed phrases, whole sentences, and utterances in context are all analyzed for meaning. Original language phrases are converted into a semantic metalanguage or comparable representational system through this method. It takes into account the sentence’s aim, underlying meaning, and the relationships between its many parts.

Lexical semantics and supralexical (combinational, or compositional) semantics are the two primary categories into which semantics is traditionally separated.

Lexical Semantics

- The meanings of individual words and set word combinations are the focus of lexical semantics.

- Like dictionary definitions, it starts by examining the meanings of individual terms.

- Words can be thought of as either a string in text (for example, “delivers”) or as a more abstract object, such as a lexeme or lemma, which is a cover word for a group of strings (for example, DELIVER names the set {delivers, deliver, delivering, delivered}). The citation form is another name for the lemma. Wordforms are particular forms such as “sung” or “mice”.

- Lemmatisation, which connects morphological variations to their lemma, is a fundamental process that is frequently carried out during lexical analysis. Invariant syntactic and semantic information must be linked to lemmas. Using the item DELIVER and morphosyntactic attributes like {3rd, Sg, Present}, for instance, “delivers” may be referred.

- Word senses, also known as senses, are the several meanings that each lemma might have. A distinct depiction of one facet of a word’s meaning is called a word sense. Polysemy is the phenomena when words have several meanings. Interpretation might be challenging when polysemy is present. Discrete lists of senses are frequently provided by dictionaries. separate truth requirements, distinct syntactic behaviour, separate sense connections, or antagonistic meanings are some examples of criteria that might be used to classify senses as discrete. A kind of polysemy known as homonymy occurs when the senses are unconnected.

- Lexical semantics also examines the relationships between word meanings. Meaning connections between lexical elements are what these sense relations are. Important relationships consist of:

- Synonymy: Two terms with almost the same meaning but different meanings.

- Antonymy: words that have opposing connotations, such as “hot” and “cold.”

- Hypernymy and Hyponymy: “IS-A” or taxonomic relationships. A more generic phrase is called a hypernym (or hyperonym); for example, animal is a hypernym of cat. A hyponym is a phrase that is more specialised.

- Meronymy: The link between component and total (for example, tyre is a synonym for automobile). We refer to the entire as a holonym.

- The process of identifying which sense of a polysemous word is being used in a given context is known as word sense disambiguation, or WSD. Dictionary-based approaches as well as supervised, unsupervised, or semi-supervised learning strategies can be used for this. “One sense per discourse” is a popular heuristic.

- Semantic roles, often referred to as thematic roles, are another area of study in lexical semantics. These roles define the connections between a predicate (such as a verb) and its arguments (such as noun phrases). In “The boy ate the apple,” for instance, “boy” may be the AGENT and “apple” the THEME.

- A predicate’s limitations on the semantic characteristics of its arguments are known as selectional limits. For instance, the verb “eat” needs an appetising object as its THEME.

- Multiword expressions (MWEs), which are common phrases whose attributes, particularly semantics, are unpredictable from their component words (e.g., “top dog”), are also granted lexical status.

Compositional Semantics

- Phrasal semantics, also known as compositional semantics, is the study of sentence and phrase meaning.

- The fundamental concept is compositionality, which states that a phrase or sentence’s meaning is constructed from the syntactic combination of its constituent elements.

- The objective is to use formal structures known as meaning representations to convey the meaning of verbal utterances. Unlike actual language, these representations should be clear.

- In addition, meaning representation techniques need to facilitate computational inference, connect language to outside information, observations, and actions, and be expressive enough to cover a broad variety of topics.

- Logical forms can be used to represent meanings. Predicate logic and first-order logic are two examples of formal systems that are employed.

- Event and state structures are frequently captured in representations.

- Semantic parsing is the term used to describe the process of translating phrases into meaning representations using methods such as the lambda calculus.

- The underlying syntactic representation of sentences is frequently referred to as semantic analysis, which typically comes after syntactic analysis in the NLP pipeline. With some linguists favouring the name lexicogrammar, the relationship between word-level semantics and grammatical semantics is becoming more widely acknowledged.

Resources and Challenges

- The lemma and morphosyntactic information obtained by lexical analysis connects to the semantic data found in lexicons, or machine-readable dictionaries.

- WordNet and other lexical relation databases are valuable tools for expressing word senses and lexical relations like synonymy, hypernymy, and meronymy. Despite being a lexical resource, WordNet is occasionally referred to as an ontology, which is a separate idea that involves networks of conceptualisations. Ontologies can be used to define meanings in methods such as Ontological Semantics.

- Semantic responsibilities and predicate-argument structures are explained via resources such as FrameNet and PropBank.

- Through usage, collocation, and frequency patterns, corpora large text collections offer proof for semantic analysis.

- Semantics encounters challenging issues, especially in cross-linguistic situations and lexical semantics. It has been understated how complicated and varied lexical semantics are among languages. Comparing linguistic semantics to other linguistics subfields, there is also less agreement.

- One important problem is to deal with ambiguity at both the structural and word level (word sense ambiguity/polysemy). It should be possible for semantic analysis to resolve word ambiguities in context and clarify confusing statements. It also recognises syntactically correct but meaningless phrases like “Colourless green ideas sleep furiously”.

- It is important yet difficult to integrate linguistic knowledge with cultural norms and practical knowledge.

For many NLP applications, including machine translation (MT), information extraction, question answering, dialogue systems, and text summarization, that need a deeper comprehension of meaning and context, semantic analysis is essential. Semantic representations can function as an interlingua in MT, for instance, and lexical semantics can be accessible through the lemma dictionary.