Efficient Problem Solving in DSA

The fundamental knowledge of what computers can do, how they complete tasks, and what they could struggle with is provided by data structures and algorithms, which are at the heart of computer science. They are essential to many computer algorithms’ correct operation. To put it simply, an algorithm is a methodical process for completing a task in a limited amount of time, whereas a data structure is a systematic technique to organise and retrieve data. Programmers can improve their ability to implement the best solutions when creating new apps by learning about their advantages and disadvantages. Writing quality code and comprehending new frameworks require a strong foundation in both ideas, which are complementary and interconnected.

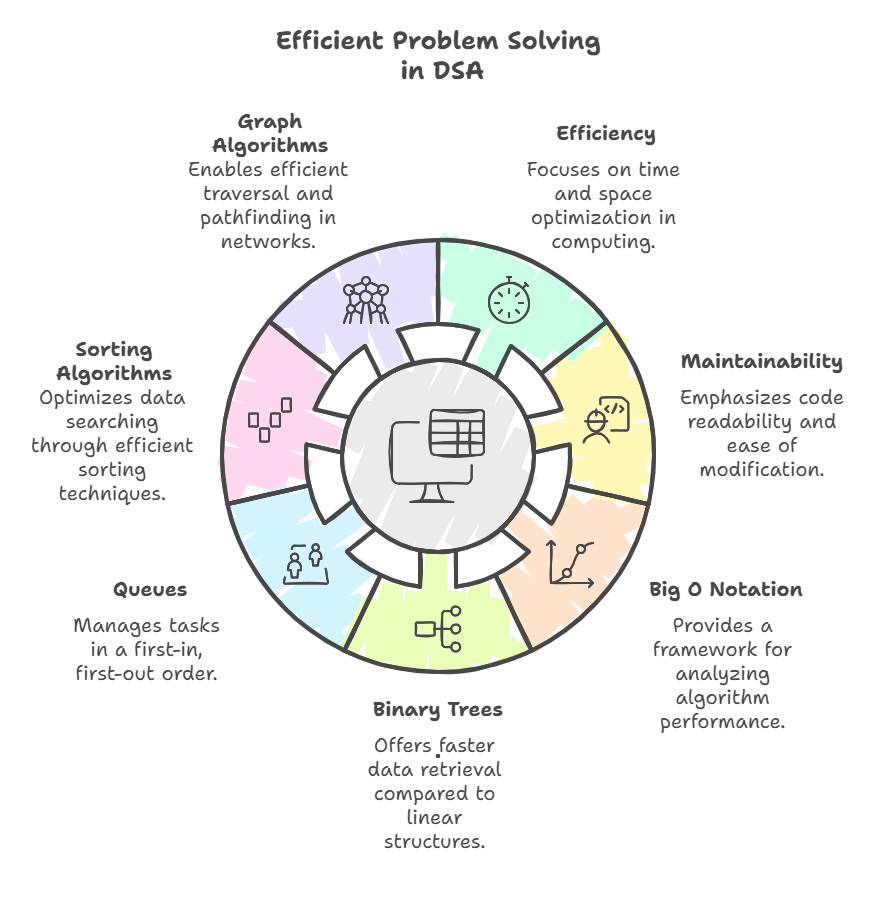

Importance for Efficient Problem Solving

Program efficiency and maintainability are directly impacted by data structures and algorithms, which is the key factor that makes them so important. Particularly in projects involving real-time systems, choosing the incorrect data format or method can result in serious performance issues.

Efficiency: Efficiency takes into account the quantity of space or memory needed, but it mostly pertains to the time it takes to compute things.

Maintainability: The ease with which other programmers (or even you later) may comprehend and alter your programs is known as maintainability.

What can be computed with a given quantity of computer resources is significantly impacted by an understanding of data organisation and how it is coupled with suitable algorithms. Because of this, it is expected of computer engineers to be proficient in the effective collection, organisation, and retrieval of information utilising data structures.

The Role of Big O Notation

In order to categorise algorithms and data structures as “good,” we require accurate methods of analysis. Big O notation is the main analytical tool since it offers an abstract metric for evaluating algorithm performance without the need for mathematical proofs. It aids in describing how long algorithms and data structure operations take to execute, with space use being another important factor.

Understanding how an algorithm’s execution time increases with input size (n) is made easier with the use of Big O notation. From fastest to slowest, the following kinds of functions are frequently utilised in algorithm analysis:

Constant (O(1)): The operation’s duration is independent of the size of the input, such as when a node is added to a linked list’s tail and a pointer to the tail is consistently kept. Data structure operations should ideally execute in a fixed amount of time.

Logarithmic (O(log n)): As the input size rises, the time increases relatively slowly. For data structure operations, this is ideal.

Linear (O(n)): Time increases in direct proportion to the amount of the input. Linear time algorithms are regarded as efficient.

N-log-N (O(n log n)): Grows considerably more slowly than quadratic but somewhat faster than linear. This running time is present in many significant algorithms, including the quickest sorting algorithms.

Quadratic (O(n^2)): As the input size squares, the time increases. For big inputs, algorithms with quadratic running times are less useful. An example of an O(n^2) algorithm is bubble sort.

Cubic (O(n^3)): As the input size cubes, the time increases. If at all, these techniques should only be applied to relatively minor situations.

Exponential (O(a^n)): As the size of the input increases, the time increases exponentially. Avoid these if at all possible, as they are typically impractical for any but the tiniest inputs.

Comparing these growth rates makes it clear how important well-designed algorithms are. Even if the quicker algorithm has a poorer constant factor, one that is asymptotically faster will eventually outperform one that is asymptotically slower. It is impossible to overcome the disadvantage of an asymptotically slow algorithm, even with large hardware speedups. Therefore, it is crucial to consider the space needs and run time complexity of the approach you have chosen.

How Data Structures and Algorithms Enhance Efficiency

Based on problem analysis, data structures and algorithms are selected, taking into account variables such as data kinds, frequency of operations, and volume of data.

Binary Trees: Compared to linear data structures like array-based lists or linked lists, binary trees allow for substantially faster algorithms. This is a major advancement in data organisation. An unordered array or linked list would require, on average, 500,000 comparisons (O(N)), but it only takes about 20 comparisons (O(log N)) to identify an item in a balanced tree of one million elements. An very effective approach for finding values in big datasets is binary search, which runs in O(log n) time.

Queues: The first-in, first-out (FIFO) principle governs the operation of these basic data structures. Among its many uses are the implementation of algorithms like Breadth-First Search (BFS), the scheduling of tasks in operating systems with equal priority, the answering of customer support calls, and the management of requests for web servers or networked printers.

Sorting Algorithms: Sorting is important because a sorted data set can substantially optimise data searching. Sorting n arbitrary values using the fastest techniques takes time proportional to n log n. It is essential to comprehend sorting algorithms since their concepts are applied to the construction of algorithms in many other computing domains. Merge sort, bubble sort (inefficient, O(n^2)), and rapid sort (efficient) are some examples.

Graph Algorithms: Effective data structures are necessary for algorithms such as Depth-First Search (DFS) for traversal and Dijkstra’s for shortest path. For example, traversing over neighbouring vertices in time proportional to their number is possible when a graph is represented using an adjacency list or map. This improves asymptotic performance for sparse graphs when compared to an adjacency matrix.

Searching for an Element

We may utilise the concepts to comprehend the effects of data structure and algorithm choice, even clearly demonstrate efficiency with a specific runnable code sample. Think about the challenge of looking for a certain item in a data collection.

The challenge is to determine whether a target number is present in a list of n numbers.

Approach 1

Applying Sequential Search to an Unsorted List (Array) The simplest method for finding an element in an unsorted list is to go through each element one at a time until you find a match or reach the end of the list.

- Algorithm: Sequential search, often known as linear search, is the algorithm.

- Data Structure: An array or Python list is a data structure that approximates primitive types.

- Efficiency: In the worst situation, you may need to examine each component. O(n), the linear temporal complexity, is the outcome of this. On average, you might compare 500,000 things out of a list of one million.

Approach 2

Applying Binary Search to a Sorted List (Array) We can employ a far more effective search method if the list is sorted.

- Algorithm: Algorithms are step-by-step instructions for solving problems in a finite amount of time. Algorithms are essential to many computer applications and allow efficient code to solve daily tasks.

- Data Structure:Computer memory data is organised and accessed using data structures. It organises and stores data in computer memory for faster access and manipulation.

- Efficiency: The search interval is frequently divided in half by binary search. O(log n), the logarithmic temporal complexity, results from this. You would only require roughly 20 comparisons for a sorted list of one million objects.

Comparison

| Feature | Approach 1: Unsorted List (Sequential Search) | Approach 2: Sorted List (Binary Search) |

|---|---|---|

| Description | Check each element one by one until a match is found or the end of the list is reached. | Repeatedly divides the search interval in half to find the element. |

| Algorithm | Sequential Search (also called Linear Search) | Binary Search |

| Data Structure | An array or Python list (unordered). | A sorted array or list. |

| Worst-Case Time Complexity | O(n) – linear time. | O(log n) – logarithmic time. |

| Example (1,000,000 items) | On average, approximately 500,000 comparisons might be performed. | Only about 20 comparisons would be needed. |

This conceptual example demonstrates the significant performance difference that can result from selecting the appropriate technique (binary search vs. sequential search) and data structure (sorted vs. unsorted). An O(log n) algorithm is significantly better than an O(n) approach for very big datasets, saving a significant amount of calculation time. This illustrates how crucial insights into program design and efficiency can be gained by a thorough examination and comprehension of data structures and algorithms.