Let us discuss about Challenges in Named Entity Recognition, Types and About Named Entity Recognition.

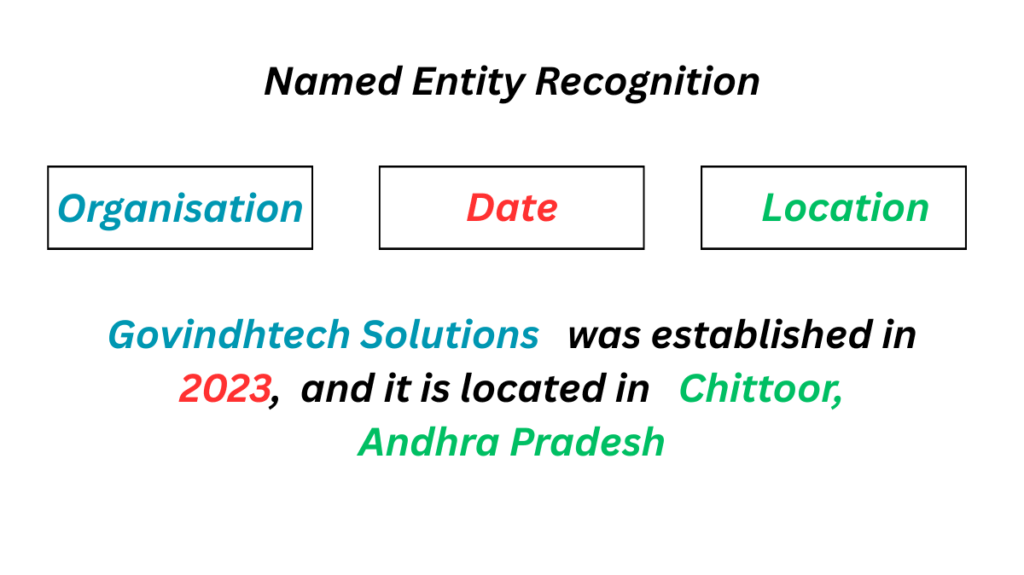

Named Entity Recognition is a key NLP problem. It is frequently a crucial stage in the NLP pipeline and is regarded as a traditional problem in the field of information extraction (IE). Entity recognition and entity extraction are other names for NER.

Finding and categorizing identified things referenced in text into predetermined groups is the aim of a NER system. Determining the bounds of the identified thing and defining its type are the two primary subtasks involved in this. NER is helpful in other tasks like Question Answering (QA) and Information Retrieval (IR), even though it is frequently used as a preliminary to finding relations in information extraction.

Usually, named entities are proper names or definite noun phrases that relate to certain people. Nonetheless, the phrase is frequently used to refer to a variety of expressions in addition to things and proper nouns.

Types of Named Entity Recognition

Types of named entities that are often utilised include:

- PERSON (such as President Obama or Eddy Bonte)

- ENTITY (e.g., WHO, Georgia-Pacific Corp.)

- LOCATION (e.g., Mount Everest, Murray River)

- GPE (Geo-Political Entity, like as the Midlothian region or South East Asia)

- DATE (for instance, June 29, 2008)

- TIME (e.g., 1:30 p.m., two fifty a.m.)

- MONEY (e.g., GBP 10.40, 175 million Canadian dollars)

- PERCENT (for example, 18.75 percent, 20 percent)

- FACILITY (such as Stonehenge and the Washington Monument)

- MISC (Miscellaneous, a catch-all for other names such as gods, illnesses, artwork, and gentilics)

A distinct, frequently larger collection of object types may be used in particular datasets or applications. For example, NER is fundamentally important for things such as genes, proteins, cell lines, cell types, chemicals, tissues, organs, organisms, genotypes, and phenotypes in biomedical text mining (BioNLP).

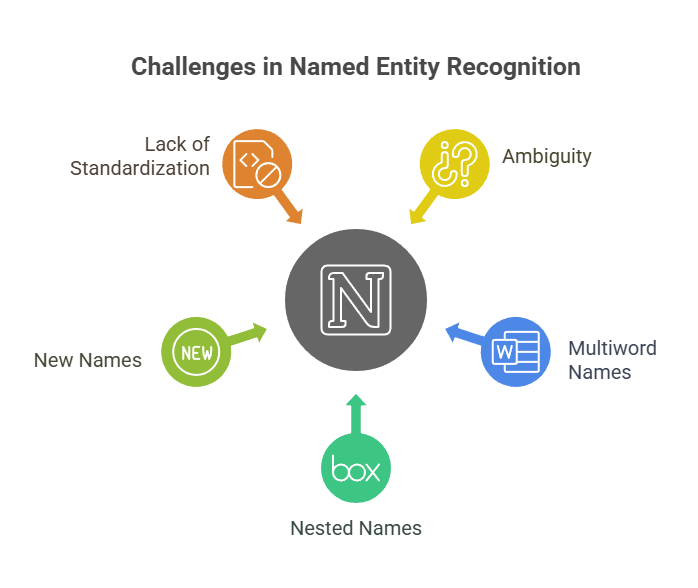

Challenges in Named Entity Recognition

Natural language is inherently complex, which presents challenges for NER:

- Ambiguity: Depending on the context, many words can refer to several kinds of creatures. “Washington” might refer to a person, city, state, or organisation, for instance. “Milan” can refer to a place (a city) or a group (a sports team). “Apple” can refer to a fruit or a business.

- Multiword Names: It is necessary to determine the start and finish of entities that span many tokens.

- Nested Names: Other entities may occasionally be included within entities.

- New Names: Extensive lists (gazetteers) are insufficient on their own since new people, organisations, items, etc. are always appearing, especially in fields like news or blogs.

- Lack of Standardisation: As is the case with gene names, authors might not follow accepted naming practices. The precise kinds of names to recognise are likewise not widely agreed upon.

NER frequently makes use of the results of other NLP activities and is closely connected to them:

- To separate the text into words and sentences, tokenization and sentence segmentation are usually done initially. Tokenization and NER can be combined, particularly in languages with ambiguous word boundaries.

- Words are given grammatical categories by Part-of-Speech (POS) tagging, which is particularly beneficial for NER systems since POS tags offer crucial properties. For NER, POS tagging is regarded as a foundation.

- Chunking is a method for identifying, classifying, and segmenting multitoken sequences, such as noun phrases, which frequently match named things.

Though they are frequently handled as separate activities or follow-up procedures, NER is also connected to Named Entity Disambiguation (NED) and Word Sense Disambiguation (WSD), particularly for ambiguous names. Tasks like Relation Extraction and Coreference Resolution, which seek to connect references relating to the same entity, need entity identification. By connecting discovered mentions to knowledge base entries, Entity Linking expands on NER.

Approaches and Techniques for NER

NER systems may be constructed using the following methods and techniques:

Rule-Based Approaches: Identifying and categorising items using manually created language rules and patterns based on characteristics like word position, capitalisation, or surrounding context.

Machine Learning (ML) methods: Using annotated datasets to train supervised models. Support Vector Machines (SVMs) and Conditional Random Fields (CRFs) were early techniques. Deep learning models are widely used in more contemporary methods, including:

- Recurrent Neural Networks (RNNs)

- Long Short-Term Memory (LSTM) networks

- Convolutional Neural Networks (CNNs)

- Bidirectional LSTMs (Bi-LSTMs)

- Transformers (e.g., RoBERTa, DistilBERT, SpanBERT, and BERT and its variations)

Sequence tagging approaches

Given that most tagging algorithms work word-by-word and named entities are frequently multi-word phrases, a popular method is to describe NER as a sequence tagging problem utilizing approaches such as:

- BIO (or IOB) Notation: The entity type (e.g., B-PER, I-LOC) is followed by tags that indicate whether a token represents the beginning (B-), inside (I-), or outside (O) of a named entity mention. This enables clear entity span recovery.

- IO Notation: A simpler version that combines the B- and I-prefixes.

- BILOU Notation: Adds tags for a span’s unique (U-) and last (L-) tokens.

Sequence tagging characteristics

Several characteristics are used by these sequence tagging models:

- Word-level features: The present word’s identity, as well as that of its prefixes, suffixes, and neighbours.

- Orthographic features: Word form (e.g., capitalisation, digit/hyphen presence).

- Linguistic features: Tags for parts of speech.

- Lexical resources: Determining if a word is in a list that has already been put together, such as a name list or gazetteer (for places).

- Distributional features: Word vectors or embeddings that use word occurrences to capture syntactic or semantic links. Transformers‘ contextualized embeddings capture representations that are particular to a certain situation.

Metrics like accuracy, recall, and F1-score are used to assess NER systems; the entity, not the individual word, is usually taken into account as the unit of response. Training and assessment are conducted using domain-specific datasets (e.g., for biological text) and annotated corpora such as the Penn Treebank and OntoNotes.

In many jobs involving natural language processing, named entity tagging is a helpful initial step. Sentiment analysis may be used to determine how a customer feels about a specific entity. When answering questions or connecting text to information in structured knowledge sources like Wikipedia, entities are a helpful initial step. Additionally, named entity tagging plays a key role in activities that require the construction of semantic representations, such as the extraction of events and participant relationships.