The LSM

Researchers are always looking for models that replicate the brain’s effective information processing in the domains of computational neuroscience and artificial intelligence. A reservoir computing system that provides a novel method for processing temporal data is the Liquid State Machines (LSM). In contrast to conventional neural networks, LSMs convert input signals into intricate, time-dependent states by utilizing the dynamic characteristics of randomly connected neurons. This makes them especially useful for sequence-based applications like motion prediction, speech recognition, and real-time signal processing.

This article examines the basic ideas of Liquid State Machines, as well as their biological inspiration, salient characteristics, benefits, and uses. By the conclusion, you’ll know why LSMs are a fascinating topic for neuroscience and machine learning research.

What is LSM?

One kind of reservoir computer that makes use of a spiking neural network is called a Liquid State Machines (LSM). It is regarded as a model of a cortical microcircuit that is biologically credible. The term “liquid” refers to a process that transforms an input (a falling stone) into a spatiotemporal pattern of liquid displacement (ripples) when it is dropped into a body of water. This comparison shows how an LSM’s network of neurons converts time-varying inputs into intricate spatiotemporal patterns of activation.

History

In 2002, Maass et al. introduced the idea of the Liquid State Machines as a biologically realistic model for a cortical microcircuit. Its creation was spurred by the neocortex’s adaptability in the mammalian brain, which uses networks of neurons to regulate processes including motor commands, sensory perception, and spatial reasoning. Research efforts have mostly concentrated on training the model and identifying the best learning strategies since its debut.

Also Read About What is sparse decomposition? and its Fundamental Goals

Liquid State Machines Architecture

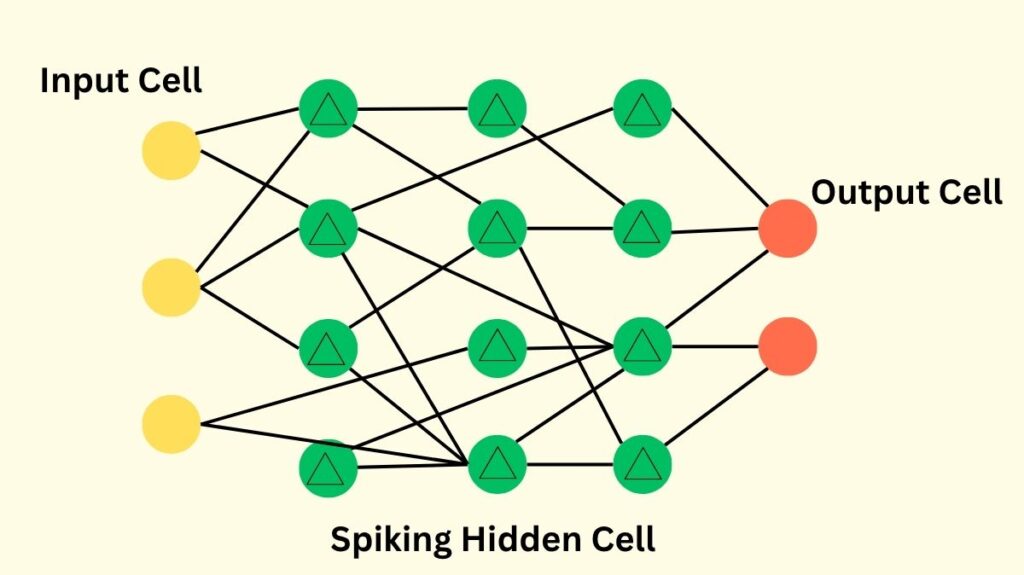

There are three main parts to an LSM:

Input Layer

External sources provide time-varying input to this layer. It has random, sparse connections to the liquid layer, and input-liquid synapses are invariably excitatory.

Liquid (Reservoir) Layer

- Modelled as a random, sparsely linked, recurrent reservoir of spiking neurons, this is the central component of the Liquid State Machines.

- Since the connections of these neurons are usually locked within the reservoir, their weights are not trained once they are created.

- The liquid is a network with many dimensions. It is common practice to model neural dynamics using conductance-based leaky integrate-and-fire (LIF) neurons.

- Excitatory/inhibitory neurons are maintained at a common biological ratio, usually 80/20%.

- The likelihood of synaptic connections between neurons, which is based on their Euclidean distance, defines the topology of the Liquid State Machines.

Readout/Output Layer

- A supervised-training classifier makes up this layer.

- It typically has no memory and does not remember the dynamical system’s past states.

- To carry out particular duties, the readout unit combines the spatiotemporal patterns produced by the liquid in a linear fashion. For example, the spike counts of the excitatory neurons during the course of a sample can be used to train a Logistic Regression classifier.

How Liquid State Machines Works

An Liquid State Machines works on the basis of transforming inputs through a trainable readout layer after a fixed, high-dimensional, and dynamic reservoir.

Transformation of Input: Speech and other time-varying input signals undergo preprocessing (e.g., conversion to cochleograms and subsequently spike trains). A spatiotemporal pattern of activations within the recurrently coupled neurons is then produced by projecting these spike trains onto the liquid.

Reservoir Dynamics: The liquid converts the lower-dimensional input stream into a higher-dimensional internal state by means of its recurrent and random connections. Numerous non-linear functions are computed on the input by the “soup” of recurrently connected nodes. The conductances of the recurrent neurons undergo exponential decay after changing over time in response to incoming spikes.

Feature Extraction: By recording historical events and converting them into a high-dimensional echoing internal activity state, the liquid functions as a potent spatiotemporal feature extractor.

Readout Classification: Trained to categorise or interpret these high-dimensional internal states from the liquid, the readout layer lacks memory. Usually, the readout layer’s training is determined by the excitatory neurons’ spike counts during the course of a sample. By determining which readout neurone fires most frequently, the categorisation judgement is made.

Also Read About What is Deep Convolutional Inverse Graphics Network(DC-IGN)?

Features

The following are important Features and biological inspirations included in LSMs and their extensions:

Random, Sparse Reservoir

A network of neurons with sparse connections makes up the liquid.

Fixed Synapses

After formation, synaptic connections within the liquid are usually fixed.

Trainable Readout

To carry out classification or regression tasks, only the output layer is learnt.

Excitatory/Inhibitory (E/I) Balance

The balance of excitatory and inhibitory presynaptic currents is guaranteed by this bio-inspired expansion. E/I balance is associated with the edge of chaos, or optimized dynamical network chaos, which enhances classification performance and maximises neural coding efficiency.

Spike-Frequency Adaptation (SFA)

A passive neural mechanism called spike-frequency adaptation (SFA) modifies a neuron’s spiking threshold in response to its prior spiking behaviour. By decreasing firing activity, SFA increases the network’s memory capacity and boosts coding efficiency.

Neuronal Heterogeneity

The dynamical reactivity of the LSM is enhanced by adding diversity in neuronal characteristics, such as differentiation in time constants. This increases the variability in temporal feature extraction, resilience, and computing needs.

Types (or Enhanced Models)

Although they are not separate “types” in the conventional sense, LSMs have been improved using a number of methods:

- Baseline LSM: The initial model, including its basic structure.

- The main improvement mentioned is the Extended Liquid State Machines (ELSM), which combines neural heterogeneity (differing temporal constants), spike-frequency adaptation (SFA), and E/I balance. In spiking neural networks, the ELSM can attain accuracy that is close to state-of-the-art and continuously outperforms the baseline LSM.

- Resistive Memory-Based Zero-Shot LSM: This hardware-software co-design allows for zero-shot learning of multimodal event data by physically integrating trainable artificial neural network (ANN) projections with a fixed and random LSM SNN encoder.

- Deep Liquid State Machines: Suggested as deeper architectures based on the LSM notion, these machines were mentioned in relation to video activity identification.

- Adaptive/Learned Parameters: Although they can add complexity, several studies investigate task-agnostic, data-driven training of recurrent liquid weights, continuous neural adaptation, or evolutionary optimization of LSM parameters.

Advantages

LSMs provide a number of advantages, especially when considering neuromorphic computing and artificial intelligence with biological inspiration:

Biological Plausibility

By simulating elements of cortical microcircuits, LSMs are regarded as one of the most biologically realistic spiking neural networks.

Minimal Training Complexity

Because only the readout layer needs to be trained, they have minimal training complexity and allow for backpropagation-free learning. This streamlines and expedites the training process.

Hardware Compatibility

LSMs work with effective neuromorphic hardware. The Extended LSM (ELSM) is less computationally demanding and requires less memory because it can function with 4-bit precision for synaptic weights without experiencing appreciable performance degradation.

Computational Efficiency

LSMs can be implemented with less hardware because they are sparsely constructed and show sparse spiking behaviour. For example, it has been demonstrated that the ELSM reduces the number of spikes per neurone by more than 20%.

Spatio-Temporal Feature Extraction

They exhibit outstanding performance on a range of tasks and are adept at extracting spatio-temporal information.

Managing Continuous Time Inputs “Naturally”

LSMs are made to manage continuous time inputs “naturally.”

Also Read About What is Recurrent Neural Networks? and Applications of RNNs

Multi-scale Computations

Computations on many time scales can be carried out by the same network.

Universal Function Approximation

LSMs have the potential to serve as universal function approximators due to characteristics like input separability and fading memory.

Zero-Shot Learning Capability

Recent developments have demonstrated that LSMs can generalise current knowledge via zero-shot learning multimodal event data.

General Purpose Feature Extractor

The ELSM is a high-dimensional, spatiotemporal, general, all-purpose feature extractor.

Preference for Online Learning

Online learning techniques can lower implementation costs and the complications that come with batch learning, and they frequently yield the best results.

Disadvantages/Criticisms

Notwithstanding their benefits, LSMs have several drawbacks and restrictions.

- Limited Brain Explanation: According to critics, LSMs can only partially recreate brain activity and do not provide a complete explanation of how the brain works.

- Lack of Interpretability: It is impossible to determine how or what calculations are being made by breaking down a functional network.

- Limited Control: The fixed liquid portion of the network offers very little control over the learning process.

- Historical Performance Gap: Due to their alleged poorer classification performance as compared to cutting-edge spiking neural networks trained with backpropagation through time (BPTT) employing surrogate gradients, LSMs have frequently been ignored. The ELSM seeks to address this, though.

- Higher Training Complexity for Enhancements: Although the basic LSM has a low training complexity, certain suggested improvements to boost performance come with higher training complexity and parameter adjustment that is based on data.

- Greater Network Size: LSMs (and ELSMs) typically need more neurons in the liquid to achieve classification performance comparable to BPTT-learned techniques.

Challenges of LSM

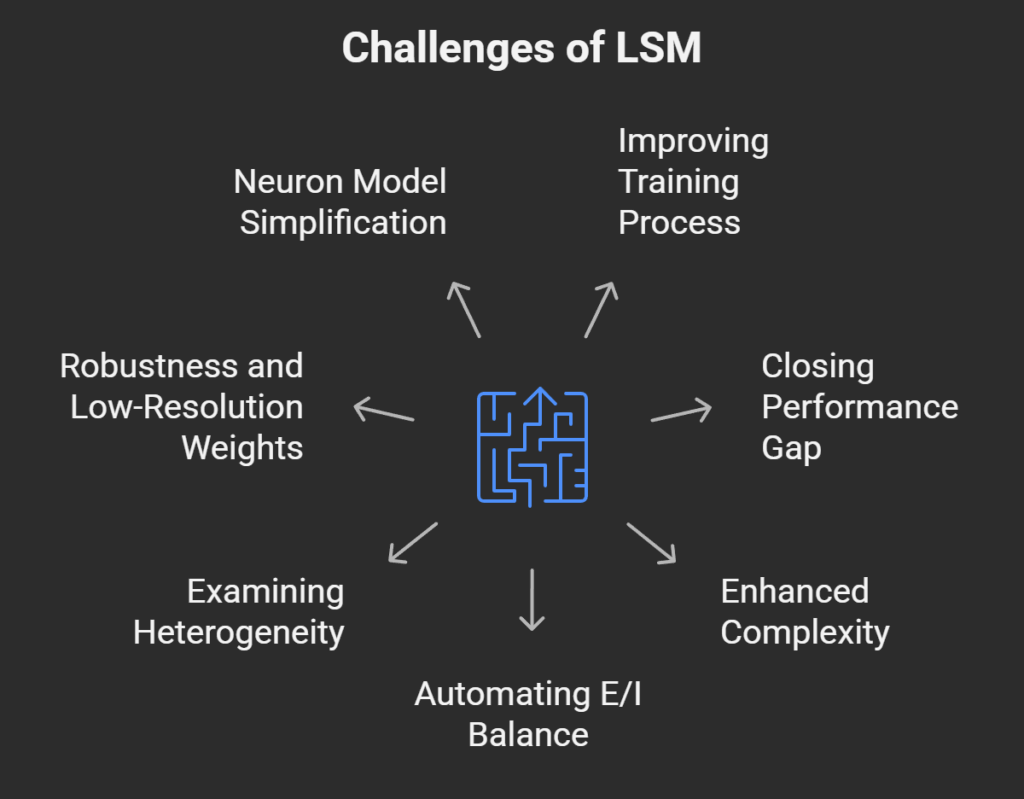

The following are some present and upcoming obstacles in LSM application and research:

Improving the Training Process

Research is still ongoing to determine the ideal weight range for achieving the best results.

Closing the Performance Gap

Traditionally, LSMs have had trouble keeping up with deep neural networks or SNNs that have been trained via backpropagation through time (BPTT). An important step towards bridging this gap without biologically implausible gradient backpropagation is the ELSM.

Enhanced Complexity

Although beneficial, certain performance-enhancing improvements may result in longer training times and data-dependent parameter adjustment, negating some of LSM’s intrinsic benefits.

Automating E/I Balance Optimization

By using astrocyte-modulated plasticity to learn synaptic weights, for example, it may be possible to automate the process of determining the ideal E/I balanced weight distribution.

Examining Additional Heterogeneity

Adding dendrites to neuron models and looking at topological heterogeneity difference in network structure could enhance learning and further diversify liquid responses.

Robustness and Low-Resolution Weights

Two crucial topics for future research are a thorough robustness analysis and additional research on the usage of low-resolution weights (such as 4-bit) for hardware implementation.

Neuron Model Simplification

Another possible future step is to see if simpler neuron models, as opposed to the conductance-based LIF model employed in some studies, can produce results that are comparable.

Additional Details

Connection to Reservoir Computing: Echo State Networks are part of the conceptual framework of reservoir computers, of which LSMs are a particular kind.

Implementation: Software platforms such as Python and the Brian2 neural simulator are frequently used to implement LSMs.

Applications: Spiking Heidelberg Digits (SHD), TI-Alpha (a 26-word “alphabet set”), Google Speech Commands (GSC), and N-TIDIGITS (spoken digits recorded on neuromorphic hardware) are just a few of the speech data sets that have been used to assess LSMs for speech recognition. They have also been used for emotion recognition using EEG data and gesture recognition using radar.

Scalability: The size of an LSM’s reservoir affects its processing capacity. Results can be enhanced and performance can be on par with SNNs trained using BPTT by scaling to a larger number of neurons.

Learning Paradigms: According to research, online learning approaches typically result in the best performance for LSMs because they process data sample by sample and update parameters for every new instance, allowing for quicker learning in situations when data is changing or not yet completely available. This is in contrast to batch (offline) learning, which might lower recognition rates but may reduce complexity by supplying prior information.

Also Read About What is Sparse Auto-Encoders? And its Mechanism and Architecture