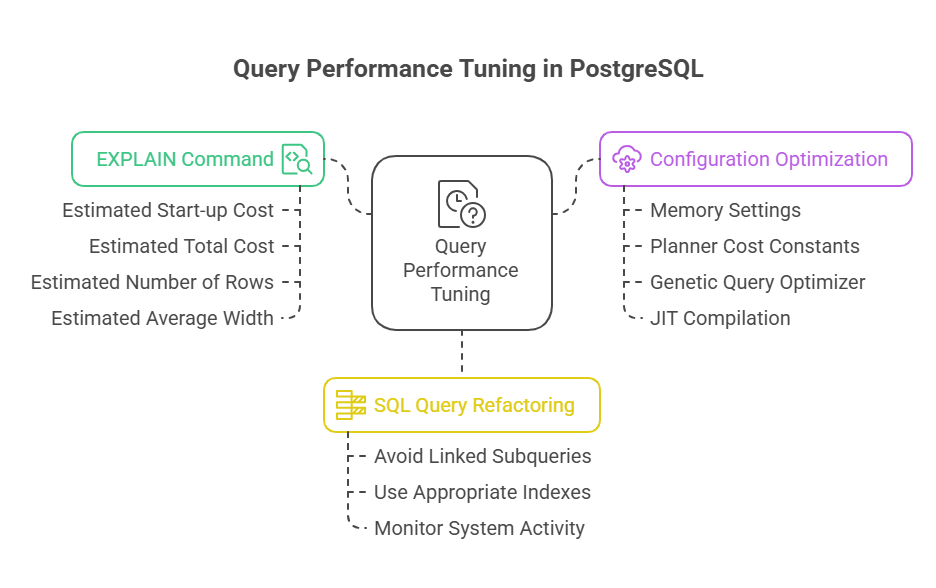

Query Performance Tuning

A multidimensional strategy is used in PostgreSQL query performance optimisation to optimise the database’s processing and execution of SQL statements, guaranteeing effective usage and quicker result retrieval. A cost-based optimiser creates an execution plan with the goal of minimising the estimated cost during the planning and optimisation phase, which is followed by the query executor processing the plan. PostgreSQL’s query processing is fundamentally structured, beginning with a rewrite phase for update, delete, and insert statements.

The EXPLAIN command, which shows the execution plan together with data scan methods (such as index scans or sequential scans), join methods, and estimated rows, is a key tool for comprehending and fine-tuning queries. By running the query and providing the actual run durations and row counts, EXPLAIN ANALYSE enables comparison with estimations to identify performance bottlenecks. EXPLAIN (ANALYSE, BUFFERS) provides additional information by reporting shared buffer hits, which show the amount of data that was previously in memory. Tools like pgAdmin and DBeaver also include EXPLAIN’s graphical results, which facilitate bottleneck visualisation.

Analyzing Query Performance with EXPLAIN

EXPLAIN is the main PostgreSQL query decipher and optimiser. The PostgreSQL planner’s execution plan for a query includes the join technique, expected row numbers, and data scanning methods (index or sequential scan).

Important details that EXPLAIN offers include:

Estimated start-up cost: In PostgreSQL’s query execution plans, the projected start-up cost is the amount of time needed before a plan node’s output phase may start. This figure appears first in the output of the EXPLAIN command, followed by the step’s total projected cost. These expenses, which are frequently dependent on the price of sequential disc page fetches, are quantified in arbitrary units.

Estimated total cost: The planner’s estimate of the overall amount of work needed, expressed in arbitrary units (usually disc page fetches), is known as the estimated total cost. The planner aims to reduce this figure.

Estimated number of rows: The anticipated number of rows that the plan node will produce. This indicates the selectivity of WHERE clauses, so it is not necessarily the number scanned.

Estimated average width: A specific plan node’s average size of tuples (rows), expressed in bytes, is known as the estimated average width in PostgreSQL’s EXPLAIN command output. The EXPLAIN output’s fourth value, usually enclosed in parenthesis, is this one.

For more in-depth understanding, EXPLAIN provides a number of choices.:

EXPLAIN (ANALYZE): Runs the query and returns the row counts and actual run times for every plan node, enabling comparison with estimated values. Additionally, it can display the sorting method (such as quicksort) and the amount of memory used for sorting and hashing.

EXPLAIN (BUFFERS): This option, when used in conjunction with ANALYSE, reports shared buffer usage (such as shared hit), showing the amount of data that was found in memory as opposed to the amount that required disc reads. A high hit_percentage signifies effective caching.

EXPLAIN (VERBOSE): A PostgreSQL EXPLAIN (VERBOSE) command displays a comprehensive query strategy for a SQL statement. This option adds columnar detail to the normal EXPLAIN output. EXPLAIN (ANALYSE, VERBOSE, BUFFERS) can offer column time and output information when paired with other options.

FORMAT {TEXT | XML | JSON | YAML}: The FORMAT {TEXT | XML | JSON | YAML} option enables output in machine-readable formats for additional analytical purposes.

Due to the fact that child steps feed into parent steps, execution plans are usually read from the most to the least indented node.

Code Example:

Let’s give a basic example to demonstrate. Initially, we make a table and add some information to it:

CREATE TABLE test_explain_1 (

id INT PRIMARY KEY,

name TEXT NOT NULL

);

INSERT INTO test_explain_1 SELECT n , md5(random()::text) FROM generate_series(1, 100000) AS foo(n);

ANALYZE test_explain_1;Output:

CREATE TABLE

INSERT 0 100000

ANALYZEThree distinct commands were successfully executed, according to the output from a PostgreSQL database that was supplied. The test_explain_1 table was successfully defined in the database schema, as first confirmed by the CREATE TABLE message. The successful addition of 100,000 new rows to the table is then confirmed by the INSERT 0 100000 line.

The 0 at the start of this output means that there was no data returned by the INSERT command itself, and the 100,000 represents the number of rows that were impacted by the operation. In order for the database’s query planner to produce effective execution plans for next queries, it is essential that the ANALYSE output confirm that statistical information about the data in the test_explain_1 table has been correctly gathered and updated.

Detecting and Optimizing Problems

Several tips can be used to identify issues with query plans:

Mismatch between estimated and actual row counts: Significant differences between the estimated and actual row counts suggest that the planner’s statistics are faulty, which results in a less-than-ideal plan. Particularly when join operations are involved, this can result in notable slowdowns.

In-memory vs. in-disk sort operations: If a sort operation (for ORDER BY, DISTINCT, UNION, EXCEPT) is carried out on disc rather than in memory, it denotes insufficient work_mem and may cause performance to lag.

Low buffer cache hit ratio: Low buffer cache hit ratio: When using EXPLAIN (BUFFERS), low shared hit numbers indicate that data is frequently fetched from disc rather than memory.

To maximise the performance of queries:

Maintain current statistics: Maintain current statistics: PostgreSQL’s query planner uses table content statistics from the ANALYSE and VACUUM ANALYSE commands. Run these commands frequently to guarantee precise cost estimates.

Optimize PostgreSQL configuration:

Memory settings: Depending on system and workload, modify work_mem (memory for sorting/hashing) and shared_buffers (memory for data caches). Indicating the amount of OS cache available for disc caching, effective_cache_size helps the planner.

Planner cost constants: Index scans can be preferred over sequential scans on fast storage, such as SSDs, by adjusting parameters like random_page_cost.

Genetic Query Optimizer (GEQO): GEQO decreases planning time by using a probabilistic search for queries with a lot of joins (default threshold 12 FROM items); however, it may produce less-than-ideal plans than an exhaustive search.

JIT Compilation: PostgreSQL 11 introduced JIT compilation, which uses LLVM to speed up tuple deforming and expression evaluation for lengthy CPU-bound analytical queries.

Refactor SQL queries:

Refactor SQL queries: Avoid frequent errors like linked subqueries and unnecessary DISTINCT or UNION when UNION ALL is enough. For aggregates, FILTER or CASE expressions may work better than subqueries.

Use appropriate indexes: The standard and all-purpose index is the B-tree. Certain data types and situations (such as text search and geospatial data) are better suited for other types, such as GiST and GIN. WHERE predicates benefit greatly from indexes on foreign keys and columns.

Monitor system activity: Understanding database behaviour, identifying performance problems, and maintaining system health all depend on tracking system activity in PostgreSQL. To enable thorough monitoring, PostgreSQL provides a robust suite of built-in tools, system views, and extensions in addition to support for common operating system utilities.

Conclusion

In summary, maximising query speed in PostgreSQL necessitates a thorough strategy that includes precise statistics, thoughtful query design, suitable indexing, and precisely calibrated memory and planner parameter settings. While other parameters like BUFFERS and VERBOSE offer deeper insights into cache and data access patterns, the EXPLAIN and EXPLAIN ANALYSE commands are essential for detecting performance issues since they reveal execution plans, cost estimates, and runtime behaviour.

The planner can make better judgements by choosing indexes that are appropriate for the nature of the workload, keeping up-to-date statistics with ANALYSE, and modifying settings like work_mem, shared_buffers, and random_page_cost. Efficiency is further increased by methods like SQL restructuring, GEQO for complex joins, and JIT compilation. Developers and DBAs may guarantee effective usage, spot bottlenecks early, and achieve consistently quicker query performance by regularly monitoring execution plans and system activities.