Frequency Encoding Machine Learning

To enable model learning and generalization, preprocessing data is crucial in machine learning. A major problem during preprocessing is handling categorical data, which consists of variables representing labels like colors, types, and groups. Categorical data must be converted to numerical data for machine learning algorithms to interpret.

Several methods can encode categorical variables, including One-Hot, Label, and Frequency. For high-cardinality characteristics, Frequency Encoder is preferred. This article discusses frequency encoding, its pros and cons.

What is Frequency Encoding?

By substituting each category with its actual frequency, Frequency Encoder converts categorical variables into numerical values. This technique assumes that the frequency of a category in the data may give predictive power. By transforming categories to frequencies, the model can learn from statistics.

Calculating the number of occurrences of each distinct category in the data and replacing them with their counts. This transformation converts categorical variables to numbers.

How Frequency Encoding Works?

The frequency encoder technique involves several steps:

- Identification of Categorical Variables: First, identify which dataset columns are categorical and need encoding.

- Calculation of Category Frequencies: The frequency (or count) of each category in a categorical column is calculated.

- Replacement with Frequency Values: Each category instance is replaced by its frequency value after frequency calculation. This replaces the category’s string value with a frequency value.

- Modeling with Encoded Data: Machine learning algorithms can use the converted dataset, which now has numerical values for the categorical feature.

Why Use Frequency Encoding?

There are several reasons why Frequency Encoder can be a valuable encoding technique:

- Handling High Cardinality: Encoding high-cardinality categorical variables (those with many unique categories) is difficult. With several categories, One-Hot Encoding can explode feature counts, creating a sparse matrix that is computationally expensive. By maintaining dataset dimensionality, Frequency Encoding makes it more scalable.

- Capturing Distribution Information: frequency Distribution of categories is encoded. Frequent categories are valued higher. As frequently recurring categories may influence the target variable more, this may indicate a data trend.

- Efficiency and Simplicity: Computationally efficient and simple, Frequency Encoder outperforms One-Hot and Target Encoding. Encoding categorical variables is straightforward and resource-efficient because it requires no additional columns.

- Works Well with Certain Models: Decision trees and ensemble approaches like Random Forests and Gradient Boosting Machines can naturally handle numerical representations of categorical data. Since they can recognize category distribution patterns, these models may benefit from encoded frequencies.

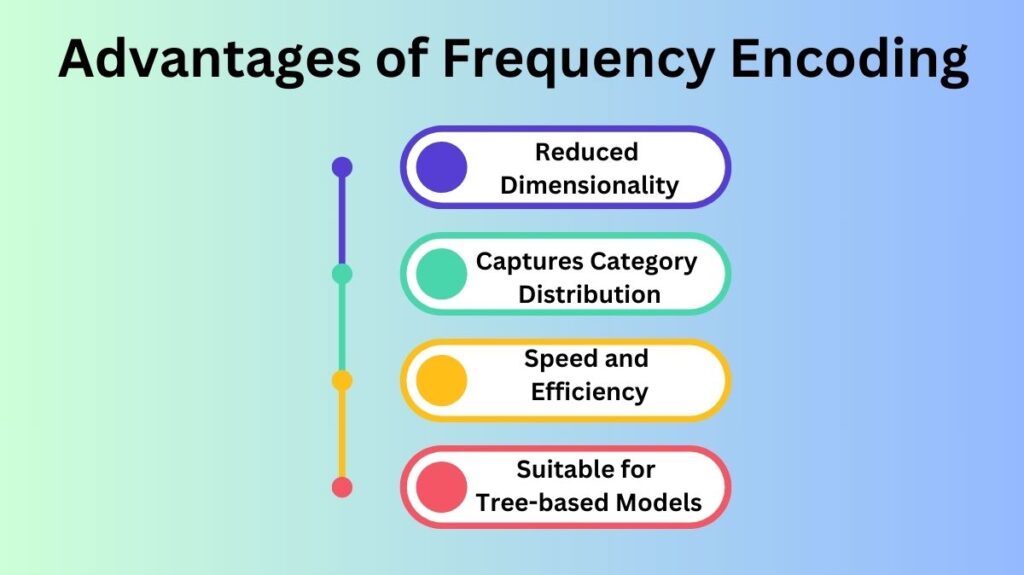

Advantages of Frequency Encoding

- Reduced Dimensionality: Frequency Encoder overcomes the dimensionality issue of One-Hot Encoding. With multiple categories, One-Hot Encoding generates many new characteristics, creating high-dimensional data. The dataset is more efficient and manageable with Frequency Encoding because it does not increase dimensionality.

- Captures Category Distribution: Capture Category Frequency Encoder maintains categorical variable statistical distribution. This shows how often each category appears in the dataset, which can reveal its structure. High-frequency categories may be more impactful on the target variable.

- Speed and Efficiency: Frequency Encoding is computationally fast compared to sophisticated encoding methods that need many binary columns. For large datasets or limited time and resources, this is useful.

- Suitable for Tree-based Models: Decision Trees, Random Forests, and Gradient Boosting Machines are generally robust to input feature scale. Frequency Encoding is used with these algorithms because it efficiently uses encoded numerical values.

Disadvantages of Frequency Encoding

- Risk of Overfitting: Especially for scarce categorical features, Frequency Encoder may overfit. Infrequent categories may be noisy and inaccurate predictors. Overfitting occurs when the model learns noise or irrelevant patterns from training data, affecting generalization.

- Loss of Semantic Meaning: Frequency Encoding ignores category semantics and linkages. Some categories have a natural hierarchy or relationship, however Frequency Encoding sets numerical values based only on their frequency. Thus, category relationships may be lost, resulting in knowledge loss.

- Handling of Unseen Categories: If a model encounters a new or unfamiliar category in the test set or during deployment, Frequency Encoding may struggle. Since encoding relies on training data frequency, any new category not present during training would not have a frequency value. Predicting fresh data may be difficult.

- Uninterpretable Values: Unlike One-Hot Encoding, which assigns each category a binary vector, Frequency Encoding produces numerical values that may be difficult to read. The dataset frequency of each category is represented by a numerical value, which may not be useful in the problem area.

When to use Frequency Encoding?

Frequency Encoding is best suited for the following situations:

- High Cardinality: Frequency Encoding can reduce dimensionality difficulties in categorical variables with many unique categories. It works best with several unusual categories in the categorization feature.

- Tree-based Models: Frequency Encoding helps decision tree-based algorithms like Random Forests, XGBoost, and others handle numerical features and leverage frequency information in encoded values.

- When Frequency is Predictive: Frequency Encoding may be useful if a category’s frequency or distribution is predicted to affect the target variable. If certain categories predict events or trends, this could happen.

Conclusion

By substituting each category with its frequency, frequency encoder is a simple way to encode categorical data into numerical values. It is useful for high-cardinality features and maintains data dimensionality. Overfitting, semantic meaning loss, and unseen categories must be considered. By revealing data category distribution, Frequency Encoder can improve model performance, especially in tree-based methods.

Frequency Encoding, like any data preprocessing methods, must be tested on the dataset and model. Test alternative encoding methods and validate them with model performance to find the optimum one for your machine learning pipeline.