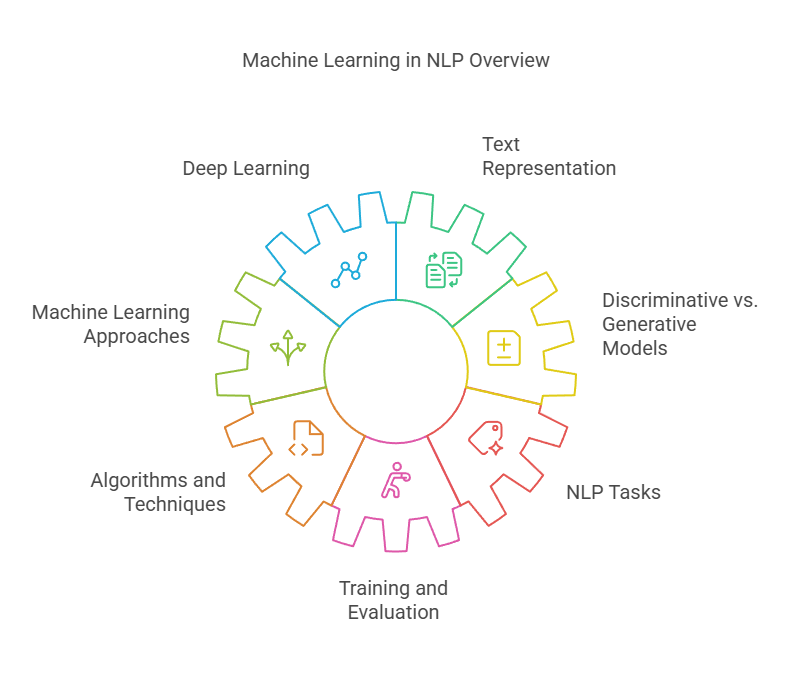

Machine Learning in NLP

Statistical models are used in the machine learning technique to uncover hidden patterns in data and provide classifications or predictions without the need for explicit programming. By employing techniques such as TF-IDF and Bag of Words, it may convert the textual data into numerical features and carry out feature engineering to produce new features. Additionally, it is capable of applying many techniques, including Random Forests, Support Vector Machines (SVM), and Naive Bayes.

For a variety of NLP applications, this method is adaptable since it can handle enormous volumes of data, identify underlying links between words or phrases, learn from multiple datasets, and adjust to changing language patterns. The performance, however, will be highly dependent on the calibre and volume of training data, and it might miss intricate correlations with simple and conventional models. The actual application will be as follows.

In contemporary Natural Language Processing (NLP), the machine learning methodology is essential.

Below is the summary of machine learning’s function and techniques in NLP:

Central Role of Machine Learning in Modern NLP

Modern methods for processing natural language mostly rely on machine learning. Complex computer programs can be created from examples with the variety of general techniques offered by machine learning. Through information extraction, pattern recognition, missing information prediction, or the creation of probabilistic models of the data, this scientific field focusses on learning from data. Due in great part to machine learning, the statistical approach to NLP has grown in significance. Human language (text data) may be understood and interpreted by machines with machine learning. Applied machine learning might be considered to be a large portion of current NLP research.

Relationship with Deep Learning

The artificial intelligence umbrella includes deep learning, which enables systems to automatically identify patterns in data. Encoder-Decoder models, CNN, LSTM, GRUs, and Transformers (BERT, GPT, ALBERT) are among the particular architectures that are involved. Neural networks, in particular, are a class of potent machine learning models that are used to analyse natural language data. Deep learning techniques are now widely used for POS tagging and other NLP applications.

Text Representation for Machine Learning

Machines or algorithms need input in numerical format, including binary, because they are unable to comprehend words, phrases, or characters directly. Feature engineering of text data is the process of transforming unprocessed text data into a numerical or machine-readable representation. This translation frequently affects how well deep learning and machine learning algorithms function. Text representation is accomplished using methods such as word embeddings.

Types of Machine Learning Approaches

- Supervised Learning: There are supervised and unsupervised categories of machine learning approaches. Using tagged data for training is known as supervised learning. POS tagging, text classification, and Named Entity Recognition (NER) are a few instances of supervised tasks in natural language processing. Models of supervised learning problems are used for many NLP tasks.

- Unsupervised learning is the process of learning from unlabelled data without direct supervision in order to find representations, patterns, or structures. The use of Expectation-Maximization, an unsupervised technique, to group word uses as senses is referred to as word sense induction.

- Semi-supervised learning: In the semi-supervised learning paradigm, both labelled and unlabelled data are used.

Discriminative vs. Generative Models

Learning algorithms can be discriminative or probabilistic. A probabilistic technique aimed at estimating a joint probability distribution is called Naïve Bayes. Support Vector Machines (SVM) and Perceptrons are examples of discriminative, error-driven algorithms whose learning goals are directly tied to the quantity of errors in the training set. For sequence labelling tasks like POS tagging, probabilistic models called Hidden Markov Models (HMMs) and Maximum Entropy Markov Models (MEMMs) are employed, whereas Conditional Random Fields (CRFs) are utilised for discriminative series.

Specific Machine Learning Algorithms and Techniques

- Statistical Methods: Neural language processing tasks frequently employ basic statistical approaches. N-grams for language modelling and Maximum Likelihood Estimation are two examples.

- Perceptron: Despite its shortcomings, this basic machine learning technique is explored in relation to text classification.

- Naive Bayes: Because it is effective and can integrate evidence from several characteristics, Naive Bayes is often employed in machine learning when data is represented by attributes.

- Support Vector Machines (SVM): One such discriminative learning approach is Support Vector Machines (SVM).

- Logistic Regression: As an additional discriminatory technique, logistic regression is discussed.

- HMMs (Hidden Markov Models): Ideal and very effective for POS labelling. employed in speech recognition as well.

- Conditional Random Fields (CRFs): In NER and sequence labelling, conditional random fields (CRFs) are utilised.

- Neural Networks / Deep Learning: Deep learning neural networks are multi-layer perceptrons. CNN, RNN, LSTM, GRUs, encoder-decoder models, and Transformers solve NLP challenges.

- Attention Mechanism: In order to improve performance on tasks like machine translation, neural network models employ an attention mechanism that helps them concentrate on pertinent portions of the input sequence.

Machine Learning in NLP Tasks

A variety of NLP activities are subject to machine learning:

- Text Classification.

- Part-of-Speech (POS) Tagging.

- Named Entity Recognition (NER).

- Syntactic Analysis (Parsing), including dependency parsing. Statistical parsing is a key area.

- Machine Translation. Statistical Machine Translation (SMT) involves finding the most likely translation using probabilistic models. Neural Machine Translation (NMT) uses encoder-decoder architectures and attention mechanisms.

- Word Sense Disambiguation.

- Information Extraction.

- Question Answering.

- Sentiment Analysis.

- Text Summarization.

- Speech Recognition.

- Chatbots.

- Sequence Tagging and Segmentation.

- Text Similarity

Training and Evaluation

Training is necessary for machine learning models in NLP, frequently utilising supervised or unsupervised techniques. The assessment of system performance, encompassing both internal and external evaluation, is an essential component.

Essentially, machine learning specifically, statistical and deep learning approaches offers the fundamental algorithms and methodologies for developing systems that can comprehend, analyse, and produce human language by identifying patterns in data.