Different Types of Tokenization

Word Tokenization

The most used type of tokenisation is word tokenisation. Using delimiters (characters like “,” “,” or “;”), it divides the data into its individual words during natural interruptions, like as voice pauses or text spaces. Despite being the most straightforward method for breaking down voice or text into its constituent pieces, this approach has many limitations.

Word tokenisation finds it challenging to distinguish between unfamiliar or OOV terms. This is frequently resolved by substituting a simple token that indicates a word is unknown for unknown terms. This is a crude answer, especially considering that five “unknown” word tokens might be the same word or five whole separate unknown words.

The vocabulary that is used to train word tokenisation determines how accurate it is. For these models to be as accurate and efficient as possible, loading words must be balanced. Although incorporating all of the vocabulary from a dictionary might improve the accuracy of an NLP model, this is frequently not the most effective approach. This is particularly valid for models being trained for specialised applications. When text is broken up into individual words, this is the most used way. Languages like English, which have distinct word borders, benefit greatly from it. For instance, the statement “Machine learning is fascinating” turns into:

Input: "Tokenization is an important NLP task."

Output: ["Tokenization", "is", "an", "important", "NLP", "task", "."]Character Tokenization

In order to solve some of the problems associated with word tokenisation, character tokenisation was developed. Rather of dividing text into words, it breaks text into characters entirely. This makes it possible for the tokenisation procedure to preserve OOV word information that word tokenisation is unable to.

Since the amount of the “vocabulary” is limited to the number of characters required by the language, character tokenisation does not suffer the same vocabulary problems as word tokenisation. A character tokenisation vocabulary for English, for instance, would have around 26 characters.

Character tokenisation resolves OOV problems, although it has drawbacks of its own. The output’s length is significantly boosted by dividing even basic statements into characters rather than words. Our earlier example, “what restaurants are nearby,” is divided into four tokens using word tokenisation. Character tokenisation, in contrast, divides this into 24 tokens, increasing the number of available tokens by a factor of 6.

Understanding the connection between the characters and the meaning of the words is another step that character tokenisation adds. Indeed, character tokenisation might draw extra conclusions. For example, the text above has five “a” tokens. But this tokenisation approach takes NLP’s goal of meaning interpretation one step further. This approach divides the text into discrete characters. This is especially helpful for languages with ambiguous word borders or for activities like spelling correction that need for in-depth examination. For example, “Natural Language Processing” would be tokenized as follows:

["N", "L", "P"]Subword Tokenization

Similar to word tokenisation, subword tokenisation uses certain linguistic principles to further deconstruct individual words. Breaking off affixes is one of their primary tools. Because prefixes, suffixes, and infixes alter a word’s intrinsic meaning, they can also aid programs in comprehending the purpose of a word. This can be particularly helpful for words that are not in the dictionary since figuring out an affix can help a software understand how unknown words work.

These subwords will be found by the subword model, which will then dissect words that include them into separate components. The phrase “What is the tallest building?” is broken into “what,” “is,” the,” “tall,” “est,” and “ing.” By dividing text into units that are bigger than a single character but smaller than a complete word, this reconciles the tokenization of words and characters. For instance, “Chatbots” may be tokenized as:

["Chat", "bots"]Subword tokenization is particularly helpful for languages that combine smaller units to produce words and for managing terms that are not in the NLP task’s vocabulary.

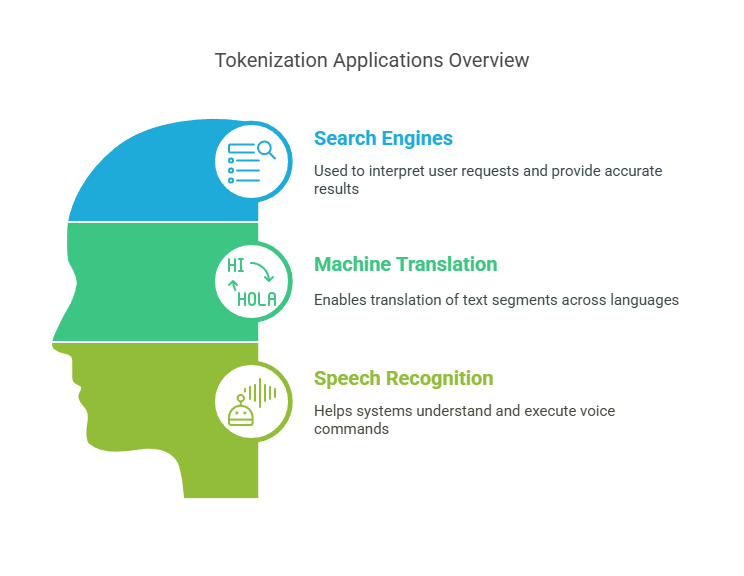

Tokenization Use Cases

There are several applications where tokenization is essential, such as:

Search Engines: Tokenization is used by search engines to interpret and comprehend user requests. Search engines can match relevant content more quickly and provide accurate search results by decomposing a query into tokens.

Machine Translation: In order to translate sentences across languages, programs such as Google Translate use tokenization. Tokenization allows text segments to be translated and rebuilt in the target language without losing meaning.

Speech Recognition: Siri and Alexa tokenize speech to understand it. A command is first translated into text and then tokenized when a user says it, allowing the system to comprehend and correctly carry out the command.

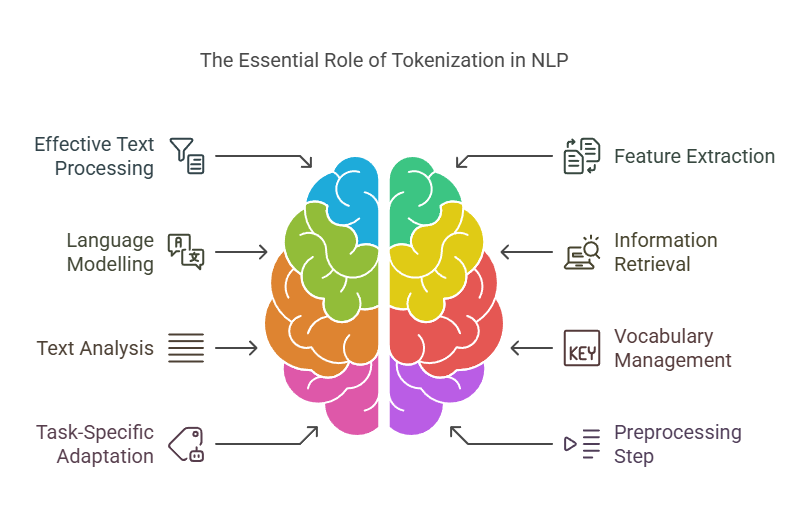

Needs of Tokenization

In text processing and natural language processing (NLP), tokenization is an essential step for a number of reasons.

Effective Text Processing: By reducing the quantity of raw text, tokenization makes it easier to handle for processing and analysis.

Feature extraction: Machine learning models can use tokens as features to numerically represent text data for algorithmic understanding.

Language Modelling: In NLP, tokenization makes it easier to create structured representations of language, which is helpful for tasks like language modelling and text production.

Information Retrieval: For indexing and searching in systems that effectively store and retrieve information based on words or phrases, tokenization is crucial.

Text Analysis: Sentiment analysis and named entity recognition are two NLP tasks that employ tokenization to ascertain the purpose and context of individual words in a phrase.

Vocabulary Management: Tokenization aids in vocabulary management by producing a list of unique tokens that represent words in the collection.

Task-Specific Adaptation: Tokenization is ideally suited for applications like summarization and machine translation since it can be tailored to the requirements of specific NLP jobs.

Preprocessing Step: This crucial preprocessing stage converts raw text into a format suitable for further computational and statistical analysis.

Relationship with Other NLP Tasks

A prerequisite for many later NLP activities is tokenization:

Text Normalization: Text normalization, which also entails lowercasing, stemming, and lemmatization to transform text into a standard form, contains tokenization as a crucial component.

Sentence Segmentation: Sentence segmentation, often known as sentence boundary detection, separates the stream of tokens into sentences and is frequently used in combination with tokenization.

Part-of-Speech (POS) Tagging: One of NLP’s most fundamental jobs is POS tagging, or just tagging. Each word in a phrase must have a tag assigned to it that indicates its POS function (noun, verb, adjective, etc.). The tagger’s job is to utilise the word’s lexical and syntactic characteristics to identify the most likely tag for that specific usage of the word in the sentence, as many words have several POS tags. Since morphological information is a crucial signal of syntactic function and is absent from Chinese words, the issue of POS ambiguity is particularly acute for Chinese words.

Named Entity Recognition (NER): The first stages in identifying and labelling named entities (people, locations, organizations, etc.) are POS tagging and tokenization. NER is closely related to tokenization, which handles multiword phrases.

Parsing: Pre-tokenization and sentence segmentation of the incoming text are commonly assumed for tasks such as statistical parsing. By either choosing one optimum analysis or offering a ranked list of potential analyses, a statistical parser’s job is to translate natural language sentences to their preferred syntactic representations. We will assume that the output is always a ranked list without sacrificing generality because the latter case may be thought of as a special case of the former (a list of length one).

We shall use Y for the set of potential syntactic representations, and X for the set of possible inputs, where each input x ∈ X is presumed to be a sequence of tokens x = w1,…, wn. Furthermore, although many of the methods discussed in this chapter may be applied to situations where the input does not take the form of a string, we will not address these situations directly. One example of this would be word-lattice parsing for voice recognition.

Morphological Analysis: Tokenization and word structure, including morphemes, are connected, especially for multi-part or agglutinative words.

In conclusion, tokenization is a well-known and accepted technique for artificial languages, including programming languages. Artificial languages, on the other hand, may be precisely specified to remove lexical and structural ambiguities; natural languages, on the other hand, do not provide us this luxury, as a single character might have several meanings and their syntax is not precisely defined. A given natural language’s tokenization complexity can be influenced by a variety of variables. There is a primary distinction between tokenization methods for languages with space boundaries and methods for languages without them.

Some word boundaries in space-delimited languages, including the majority of European languages, are signalled by the addition of whitespace. Because of the variety of tokenization protocols required by various applications and the ambiguity of the writing systems, the character sequences delimited are not always the tokens needed for additional processing.