What are N-grams in NLP?

N-grams are defined in Natural Language Processing (NLP) as groups of one or more words or characters. Often employed in text preparation, they are a basic idea and a key NLP approach. Local word dependencies are shown in N-grams.

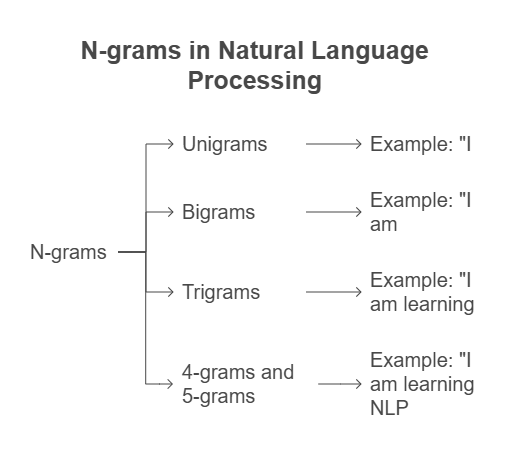

The phrase “N-gram” describes a series of “n” things. There are names for specific lengths:

Unigrams: Words in a sequence. A sentence contains these special terms.

Bigrams (or 2-grams): Groups of two words. A two-word string of words, such as “please turn” or “turn your,” is called a bigram.

Trigrams (or 3-grams): Word sequences of three. “Please turn your” is an example of a trigram, which is a three-word sequence.

4-grams, 5-grams, and so on are the terms used for n larger than 3. Because of sparsity concerns, 4-gram and 5-gram letters are occasionally utilized but words are rarely.

Using the statement “I am learning NLP,” for instance:

- These are unigrams: “I,” “am,” “learning,” and “NLP.”

- “I am,” “I am learning,” and “learning NLP” are bigrams.

- The trigrams “I am learning” and “am learning NLP” are used.

With the “gramme” component originating from Greek, the naming practice of N-grams has historical origins. While the terms “digram” and “trigram” combine Latin and Greek prefixes, “n-gram” is the most often used.

Purpose and Usage

Numerous NLP applications depend heavily on N-grams. They record word order information and context.

In Language Models (LMs), N-grams are used often. Word sequences in language models are given probability. The N-gram model is the most straightforward language model. Assigning probabilities to whole sequences and estimating the likelihood of an N-gram’s last word given its preceding words are two applications of N-gram models.

How N-grams are used in Language Models

The likelihood of a word sequence is estimated by an N-gram language model using the likelihood of a word given the n-1 words that come before it. By multiplying the odds of each word given just the preceding word, for example, a bigram model can estimate the likelihood of a phrase. Pad the beginning and end of the text with special symbols to make it easier to use N-grams to calculate the probability of a whole phrase.

Estimating N-gram Probabilities

It is intuitive to use Maximum Likelihood Estimation (MLE) to estimate N-gram probabilities. This entails normalising the counts of N-grams obtained from a corpus, which is a collection of texts used for training. The MLE estimate is, for instance, the count of the bigram C(wn-1wn) divided by the count of the prefix C(wn-1) to determine the likelihood of a bigram (a word wn given a preceding word wn-1). We call this ratio a relative frequency.

Challenges and Solutions

Many statistical models, including N-gram models, rely on the training corpus. The fact that every corpus is small and may not include many completely suitable word sequences makes sparsity a major difficulty. Consequently, “zero probability N-grams” are produced, even though their likelihood should be non-zero. The sparsity issue is made worse by higher-order N-grams, which have bigger values of N but are more effective at replicating the training corpus.

The sparsity problem is solved by applying smoothing methods. In order to account for unseen N-grams, smoothing methods, such linear interpolation smoothing, slightly increase the zero counts or modify probability. It is noted that a common baseline for N-gram language modelling is Modified Interpolated Kneser-Ney.

Using big collections of N-grams to develop linguistic models also takes efficiency into account. One way to deal with this is to quantise probabilities and store words as hash numbers.

Other Uses and Tools

The following are some more uses for N-grams besides language modelling:

Other Applications and Tools

In Natural Language Processing situations other than language modelling, N-grams are helpful. NLP difficulties employ them as features. Trigrams and word-bigrams are thought to be effective feature combinations that capture phrases like “not good” or “New York.” A depiction with a bag of bigrams can be far more effective than one with a bag of words. Convolutional neural networks (CNNs) and recurrent neural networks (RNNs) are two examples of architectures that can implicitly learn ngram-like features, however n-gram features are still helpful even with non-linear classifiers like neural networks. For applications like Part-of-Speech (POS) tagging, N-grams can use character sequences like 2-letter and 3-letter suffixes and prefixes as characteristics. The topic of machine translation also brings up N-grams.

Numerous tools and libraries facilitate the use of N-grams. The package TextBlob is capable of producing N-grams. N-grams may also be worked with using the Natural Language Toolkit (NLTK), which includes nltk.bigrams and a dedicated index item for N-grams. The public toolkits SRILM and KenLM are designed especially for creating N-gram language models.