The Building Blocks Of Language

Sound (spoken language) and writing (text) are both essential modalities in Natural Language Processing (NLP), which computers are made to process and comprehend. Both of these symbolise underlying linguistic systems and meaning, although having different physical forms. These two kinds are frequently separated by NLP systems.

Sounds in NLP

Human communication primarily occurs through spoken language. Processing it entails being aware of the physical characteristics of spoken sounds.

- Speech synthesis, which converts text to audio, and speech recognition, which converts audio signals to text, are examples of speech processing tasks in natural language processing.

- Simple Sound Units: It is believed that spoken language is made up of smaller sound components. Consonants, vowels, and intonation are among the physical sounds that are studied in phonetics. The study of language’s sound systems is known as phonology.

- Sound Production: The study of how the vocal organs alter lung airflow to produce speech sounds is known as articulatory phonetics. Air is expelled during this procedure via the larynx, or voice box, and out of the mouth or nose. Sounds are categorised as vowels (less blockage) or consonants (airflow restriction or blocking).

- Acoustic qualities, such as frequency and amplitude, which are interpreted as pitch and loudness, are used in acoustic phonetics to characterize speech sounds. A shift in air pressure produces sound waves.

- Using characteristics like pitch (F0), energy (loudness), and duration, prosody addresses the intonational and rhythmic elements of language and is used to communicate affect, mark discourse structure, convey meaning, show saliency, and control turn-taking.

- Speech Recognition (STT) produces text from the audio signal. Statistics are frequently used in modern systems. In order to create the waveform, they might employ a source-channel model in which the speaker’s intended words travel via a noisy channel. Acoustic modelling is an essential element that describes the statistical characteristics of sound events and the relationships between basic sound units and bigger linguistic units like words. Assuming that a Markov chain generates the acoustic data sequence for every syllable, speech recognition employs Hidden Markov Models (HMMs). In order to increase accuracy and forecast likely word sequences, STT systems also depend heavily on language models, which are frequently generated from text data.

- Speech Synthesis (Text-to-Speech) is the process of turning text into audio. Organising the material into prosodic units and giving words phonetic transcriptions are two possible steps in this procedure.

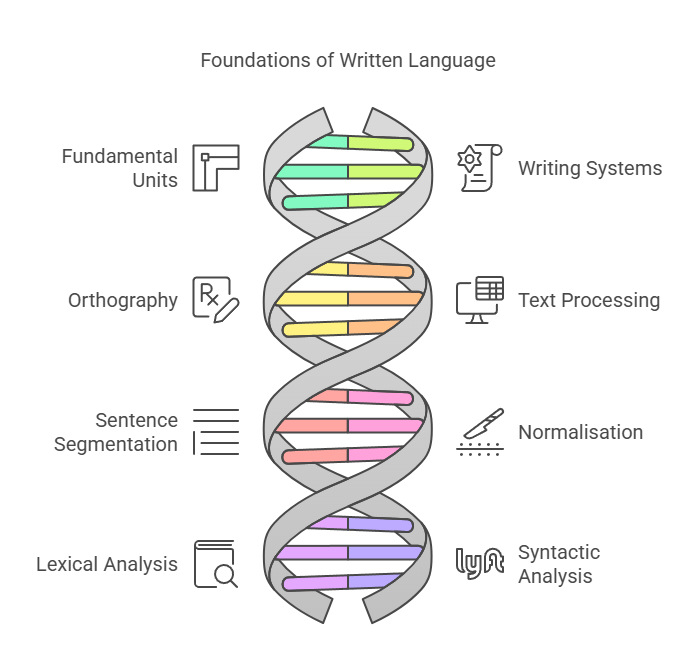

Using NLP on written language

Written language is a symbolic representation of language that usually consists of a linear arrangement of words and punctuation.

- Fundamental Units: Characters, which are frequently regarded as a theoretical model of the components of human speech sounds, are the ultimate building blocks of written texts. Words are the fundamental units of most texts in natural language.

- Writing Systems: Modern writing systems are frequently a combination of syllabic (symbols for syllables), alphabetic (symbols for sounds), and logographic (symbols for words). English uses logographic symbols but is mostly an alphabetic language. The first systems were logographic, but logo-syllabic systems gave rise to sound-based ones.

- The term “orthography” describes writing conventions, such as guidelines for marking linguistic units like words and sentences. English marks borders with punctuation and whitespace, though this isn’t always clear.

- Text Processing: The first step in NLP systems for written language is text preprocessing, which includes dividing unprocessed text into manageable chunks and normalising the text. The following are important preprocessing steps:

- Tokenization: Breaking text up into tokens, usually words and punctuation.

- The process of recognising and dividing sentences is known as sentence segmentation.

- The normalization method involves lemmatization, stemming, and case folding to standardize text.

- Lexical analysis scans the character stream and creates lexemes to break text into paragraphs, phrases, and words.

- Syntactic analysis, sometimes called parsing, is the process of determining sentence grammar, a core unit of meaning analysis in many NLP methodologies.

NLP essentially sees writing and sound as distinct manifestations of the same underlying language, with tasks like text-to-speech and speech-to-text serving as links between the two and specific methods and models for each modality. One modality’s knowledge, such statistical language models constructed from massive text corpora, can frequently be used to enhance processing in another modality.