The Article Define Syntactic Structure, Concept of Syntax and its role in NLP.

The study of sentence construction and internal organization is the basic definition of syntax in natural language processing. It looks at the rules that establish whether word sequences are grammatically correct as well as how words are put together to create phrases and clauses. Sýntaxis, which means “setting out together or arrangement” in Greek, is where the word “syntax” itself comes from.

Syntax role in NLP

In the past, NLP research has frequently seen language analysis as a sequence of steps, usually reflecting theoretical linguistic differences. Syntax is a fundamental approach in this traditional toolset and often follows basic text processing processes such as normalization (including stemming and lemmatization) and tokenization. Semantic and pragmatic analysis come next. According to the straightforward perspective, syntactic analysis gives sentences structure and makes them more receptive to semantic analysis later on.

The Sentence as the Basic Unit

The idea that the phrase is the fundamental unit of meaning analysis is a prevalent one in NLP. A proposition, idea, or concept about the universe is thought to be expressed in a phrase. One of the main problems is figuring out what a statement means. Sentences are more than just a series of words, though. It is well acknowledged that meaning extraction requires examining a phrase to ascertain its structure.

Syntactic Analysis (Parsing)

Syntactic analysis, often known as parsing natural language, is the process of figuring out a sentence’s syntactic or grammatical structure. Finding the appropriate syntactic structure for a given sentence using a certain formalism or grammar is the aim of syntactic parsing. It include proofreading for grammatical errors, arranging words correctly, and illustrating word relationships. A syntactic analyser of English would reject a statement such as “The school goes to boy”. This study’s result, which offers syntactic analysis beyond part-of-speech identification, may be shown as a parse tree. In particular, syntactic parsing associates a word string with its parse tree.

Define Syntactic Structure

In NLP, syntactic structure is represented using two main formalisms:

Phrase Structure Grammar (PSG): Also referred to as Constituency Grammar, this method dissects a sentence into its component phrases or pieces, including prepositional phrases (PP), verb phrases (VP), noun phrases (NP), and so forth. After that, these components are decomposed recursively into smaller components. Constituent boundaries are explicitly represented via phrase structure. Usually, the structure is shown as a rooted tree with internal nodes covering consecutive word spans and leaves representing the original words. The rules for building these structures are often defined by Context-Free Grammars (CFGs). But it would be far too complicated to list every syntactic rule for a natural language in terms of a CFG.

Dependency Grammar (DG): Syntactic structure is a collection of lexical objects connected by binary asymmetric connections known as dependencies. In dependency grammar, the grammatical relationships between specific words are of special significance. DG suggests a network of relations in contrast to PSG’s recursive component structure. Head-dependent relations (directed arcs), functional categories (arc labels), and maybe structural categories (POS) are all explicitly represented via dependency structures. With other words relying on it and normally having precisely one head, the primary verb in a phrase is usually the dominating word and the root of the dependency tree. A directed graph can be used to illustrate dependency structure.

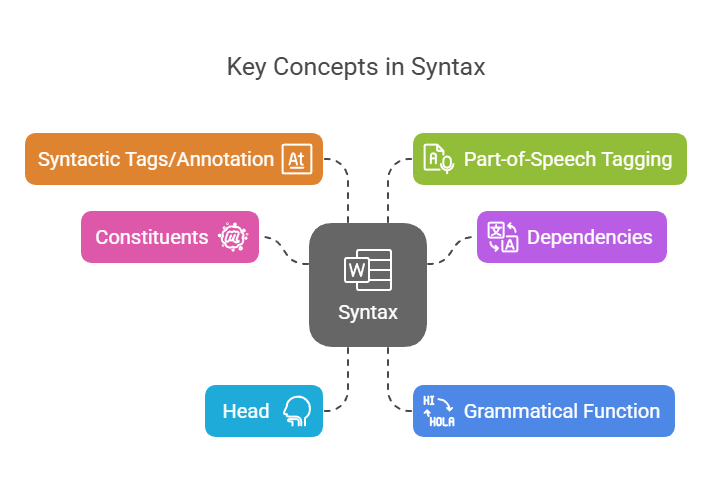

Concepts in Syntax

Constituents: A group of words that, in a certain grammar, work as a single entity. The foundation of phrase structure grammar is the recognition and representation of these elements.

Dependencies: Dependency Grammar uses binary asymmetric relations to show how one word depends on another. Labels identifying the sort of grammatical relationship may be attached to these ties.

Head: The most crucial component of a sentence. Every dependent word in dependency grammar identifies its head word.

Grammatical Function: The function of words or phrase components like subject, complement, or auxiliary. These have to do with ideas like adjuncts and arguments. A lexical characteristic called sub categorization shows how many arguments a verb or other head can have.

Syntactic Tags/Annotation: During analysis, words or components are given information. Dependencies, grammatical functions, and component boundaries are examples of syntactic tags.

Part-of-Speech (POS) Tagging: putting words into grammatical categories such as noun, verb, adjective, etc. A fundamental syntactic idea, POS tagging is frequently the initial stage of analysis. POS tags are classified as morphosyntactic categories, which describe the internal architecture of words (such as suffixes) and how they pattern together.

Data-Driven Approaches: Treebanks

The data-driven method using Treebanks is a significant approach to syntax analysis, especially when it comes to tackling the difficulty of listing every rule. A treebank is a group of sentences (a corpus of text) in which every sentence has been thoroughly analyzed for syntax, usually determined by a human expert to be the most likely analysis. Instead of depending just on handwritten rules, models may learn syntactic structures from instances with treebanks, which provide parsers the knowledge they need. The Arabic Treebank and the Penn Treebank are two examples.

Parsing Algorithms and Ambiguity

Recursive descent, shift-reduce parsing, chart parsing (such as CYK parsing), and statistical parsing are some of the methods used for syntactic parsing. Structural ambiguity is a common feature of natural language, where a word sequence might have more than one correct syntactic structure. One approach for parsing ambiguity resolution is Probabilistic Context-Free Grammars. One such parsing task is syntactic disambiguation.

Connection to Other NLP Areas

Other layers of language analysis are strongly related to syntax:

Morphology: The study of morphemes and word structure. Creating morphosyntactic representations for syntactic analyses is one aspect of lexical analysis, which studies word structure and lemmas. Morphosyntactic annotation integrates syntactic and morphological data.

Semantics: The investigation of meaning. Semantic analysis can be built on the structural basis that syntax gives. It is simpler to grasp systematic elements of meaning when one is aware of sentence structure, or syntax. The idea behind compositional semantics is that a phrase’s meaning depends on the grammatical combination of its constituent components and their respective meanings.

Pragmatics and Discourse: Pragmatics and discourse address meaning in context and across several phrases, whereas syntax and semantics focus mostly on individual sentences.

NLP’s basic field of syntax offers the essential structural analysis required for a more thorough comprehension and processing of natural language.