Raft in Blockchain

Raft in Blockchain

The goal of the consensus method Raft, which was created for distributed systems, is to guarantee that several nodes can concur on shared data even in the event of failures. In order to make Paxos more understandable, Diego Ongaro and John Ousterhout created it in 2013. Its main objective is to increase the accessibility of consensus so that better consensus-based systems can be created.

Core Purpose and Fault Tolerance

Because Raft is a Crash Fault-Tolerant (CFT) consensus method, it will continue to work even if machines or other parts malfunction or network connections are lost. It is made especially to deal with node crashes, which are thought to be simpler to handle than Byzantine faults. Its goal is to get several replicated servers in a distributed system to agree so that every node keeps the same state. Raft is frequently used to preserve the consistency and integrity of distributed ledgers in permissioned blockchain networks.

Key Components (Node States/Roles)

There are three possible states for every server in a Raft system:

- Leader: The main node in charge of processing client requests, maintaining the log, and sending log entries to followers.

- Followers: Passive nodes that reply to queries and duplicate the leader’s log. They anticipate a consistent heartbeat from the leader.

- Candidates are nodes with the potential to rise to the position of leadership during an election.

You can also read Benefits Of Ethereum Blockchain & Types Of Ethereum Accounts

How Raft Works (The Raft Process)

Raft breaks down the consensus problem into two primary, stand-alone subproblems: log replication and leader election.

Leader Election

Nodes are originally Followers when the system first boots up. A random timeout method is used to choose a leader.

- Initiation: Failing to receive a leader heartbeat message within 150–300 ms makes a Follower node a Candidate and starts an election.

- Voting: A candidate votes for themselves, sends messages to other servers asking for their votes, and increments its term counter, which indicates the current election round. On a first-come, first-served basis, a server will only cast one vote every term.

- Winning: The person who gets the most votes from the network is crowned the new Leader.

- Failure Resolution:

- A candidate’s election is deemed unsuccessful if it gets a message from another server with a term number greater than its own. In this case, the candidate recognises the new leader and reverts to the Follower status.

- A new term begins and a new election is held if there is a split vote, meaning no candidate wins a majority. Raft reduces the possibility of numerous servers becoming candidates at the same time by using randomised election timeouts to swiftly fix split vote issues.

Raft guarantees election safety, allowing for the election of no more than one leader in a single term.

Log Replication

The management of log replication to the followers is entirely the responsibility of the elected leader.

- Client Requests: Each client request that the leader receives consists of a command that the cluster’s replicated state machines are to carry out.

- Appending to Log: The leader first appends the client request as a new entry to its own durable local log.

- Replication to Followers: The leader then uses AppendEntries messages to send these log entries to the followers. The leader attempts forever until all followers have stored the log entry if followers are not available.

- Commitment: The leader applies the entry to its local state machine and the request is deemed committed once at least half of its followers confirm that it has been copied. Additionally, this commits all of the leader’s log’s earlier entries.

- Follower Application: A follower applies a log entry to its local state machine after learning that it has been committed (often through the leader’s heartbeats or AppendEntries messages). Log consistency across all servers is guaranteed by this procedure.

- Inconsistency Handling: Logs throughout the cluster may become inconsistent in the event of a leader crash. In order to deal with this, the new leader makes followers replicate their own log. In order to restore consistency, it compares its log with each follower’s log, locates the final entry that agrees, removes any further entries from the follower’s log, and replaces them with its own log entries.

You can also read Intel Software Guard Extensions SGX In Blockchain Technology

Safety Properties

Raft ensures a number of safety features:

- Election safety: During a given period, no more than one leader may be chosen.

- Leader append-only: A leader cannot remove or overwrite existing entries from its logs; it can only add new ones.

- Log matching: All entries up to that index are the same if two logs have an entry with the same word and index.

- Leader completeness: A log item that is committed during a term will appear in the logs of every leader that follows for that term.

- State machine safety: No other server may apply a different command for the same log if a server has already applied a specific log entry to its state machine. The requirement that a candidate’s log must contain all committed entries in order for them to win an election serves to guarantee this. Candidates with fewer recent logs are denied votes by voters.

Key Characteristics and Advantages

Raft provides a number of advantages:

- Simplicity: Compared to Paxos, it is intended to be simpler to comprehend and use. It solves real-world system requirements in a tidy and well-organised manner.

- Leader-centric Design: Raft streamlines the consensus process and lowers the number of participants in decision-making at any given time by having a committed leader.

- Efficiency and Performance: Because of its leader-centric architecture, it may be quicker than Paxos. Its efficiency is aided by its minimal processing power needs and absence of cryptographic challenges. It can offer on-demand block creation and quicker block timings.

- Immediate Finality: Due to its leader-follower model, Raft has immediate finality, meaning there are no temporary divergences or forks.

- Robustness: It is resilient to crash faults and can tolerate node failures.

- Dynamic Membership Changes: Raft’s methodology for adding and removing group members makes it ideal for distributed system topology changes.

- Strong Consistency: Raft upholds strict consistency requirements, like single-key operations’ linearizability.

Disadvantages of Raft

Disadvantages of Raft

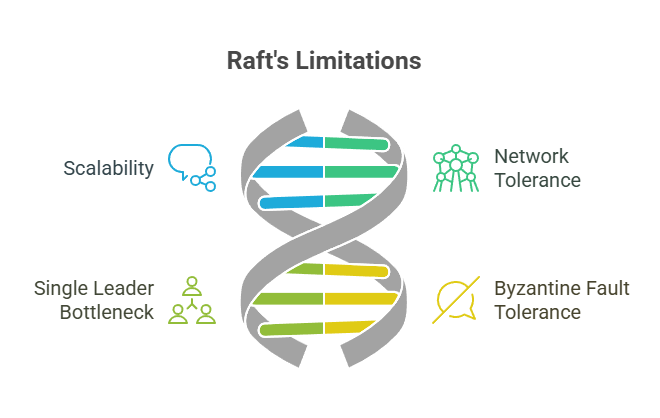

Despite its advantages, Raft has some limitations:

- Scalability: It may not be scalable because it requires most nodes to be online and communicate to obtain consensus.

- Network Tolerance: Timeouts and heartbeats maintain consistency and leadership, making it vulnerable to network failures and delays.

- Single Leader Bottleneck: One leader controls the cluster at all times. The system’s overall performance may be impacted by delay if there is a leader-level resource shortage.

- Not Byzantine Fault-Tolerant: Raft is a CFT algorithm, meaning it cannot handle malicious nodes, unlike Byzantine Fault-Tolerant (BFT) algorithms like PBFT.

You can also read What is Hyperledger Sawtooth Blockchain Applications

Implementations and Use Cases

Raft is extensively utilised in a variety of distributed systems, particularly those that favour easy comprehension and simpler implementation. It has become a top choice for creating distributed systems that are robust and highly consistent.

Among the notable implementations and use cases are:

- ETCD: Configuration management is the main use for this distributed key-value store.

- Consul: A utility for configuring and finding services.

- HashiCorp Vault: A system for managing secrets.

- Hyperledger Fabric: Raft is the latest addition for ordering services in Hyperledger Fabric, following a leader and follower model, considered simpler to work with than Apache Kafka.

- R3 Corda and ConsenSys Quorum: Platforms that use Raft.

- Raft is another consensus technique that Sawtooth supports.

- Raft is used in the replication layer of CockroachDB.

- Hazelcast: Offers a highly consistent layer for distributed data structures by utilising Raft as its CP Subsystem.

- MongoDB: Its replication set makes use of a Raft variation.

- Raft is used by Neo4j to provide reliability and security.

- RabbitMQ: Implements robust, replicated FIFO queues using Raft.

- In order to increase manageability and consistency, ScyllaDB is moving away from Paxos for the majority of its components and adding Raft (as part of Project Circe) for transactional schema and topology changes.

- Raft is used in a Search Head Cluster (SHC) by Splunk Enterprise.

- TiDB: Makes use of Raft and its TiKV storage engine.

- YugabyteDB: Applys Raft at the individual shard level for both data replication and leader election.

- ClickHouse: Provides an internal ZooKeeper-like service using Raft.

- Redpanda: Replicates data via Raft.

- Raft is used by Apache Kafka Raft (KRaft) to maintain metadata.

- Raft is used by NATS Messaging to manage Jetstream clusters and replicate data.

- Raft is used by Camunda to replicate data.

Raft is widely regarded as the de-facto standard for attaining consistency in contemporary distributed systems, with over 100 open-source implementations.

You can also read What Is FBFT? Knowing Federated Byzantine Fault Tolerance