What is Mutual Information in Machine Learning?

Mutual information Analysis is a basic idea in information theory that is becoming more and more important in machine learning. When we know something about something else, it helps us learn about something else. It turns out that this simple idea is very useful for finding patterns in data, picking out important features, and making smart systems that learn from their mistakes.

In machine learning, the goal is to find patterns in large amounts of complex and noisy data. Mutual information is a mathematically sound and flexible way to measure how two variables are related, even if the connection isn’t clear and follows a straight line. This article talks about what shared information is, why it’s important, how it’s used, and the problems that come with it.

Understanding the Concept of Mutual Information

Imagine you’re trying to guess if someone will like a movie by looking at what they’ve watched before. If you already know what kinds of music they like, that helps you. The point of mutual information is to find out how important a piece of information really is. There is a number that tells you how much knowing one variable makes you less unsure about another.

Mutual information is different from easier statistical tools like correlation because it finds all kinds of connections, not just straight-line trends. For two variables to have a strong relationship, that relationship doesn’t have to be linear. Mutual knowledge will still show this. In the real world, where data doesn’t always act in neat, predictable ways, this makes it very useful for machine learning tasks.

Why Mutual Information Analysis is Important in Machine Learning

Mutual knowledge is very important in machine learning because it can be used in many different situations. It’s so important because of the following:

- It Detects Non-linear Dependencies

A lot of material in the real world doesn’t follow simple patterns. Traditional measures like correlation may not be able to show how variables are linked in complex ways. Mutual information is much more reliable when looking at large, complicated datasets because it doesn’t assume a certain type of link. - It’s Model-Agnostic

There is no one model or algorithm that controls mutual knowledge. In other words, it’s just a way to measure how much information one variable gives you about another. That means it can be used with many types of machine learning, such as neural networks, decision trees, and more. - It Has Deep Theoretical Foundations

Information theory, which is a well-established area that covers everything from data compression to cryptography, is where mutual information comes from. Mutual information is not just a heuristic or a quick trick because it is based on a strong theory. It is a scientifically sound way to understand data.

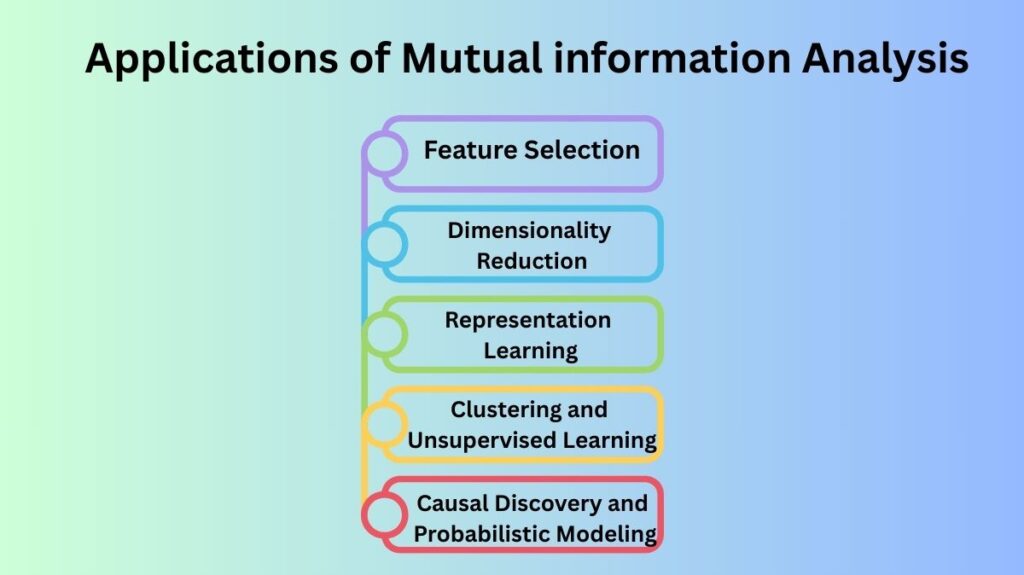

Key Applications in Machine Learning

In machine learning, mutual information can be used in a lot of different ways. I want to talk about a few of the most important ones.

- Feature Selection

When you use supervised learning for tasks like regression and classification, not all of the traits you give it are useful. Some of the functions are annoying, unnecessary, or don’t matter. Mutual information helps figure out which features really tell us something about the goal variable.

If you want to figure out how likely it is that a customer will leave, joint information can help you rank your features, such as account age, payment history, or customer service calls, by how well they help you guess if a customer will leave. You can make your models easier to use and often make them more accurate by keeping only the features that have the most mutual knowledge.

There are also more complex ways, like choosing features that are useful on their own and also work well with each other. This keeps you from choosing features that are very close, which could mean you have more than one of them.

- Dimensionality Reduction

Another way to cut down on the number of variables in your data without losing too much useful information is to use mutual knowledge. This is especially helpful for files with a lot of dimensions, where a lot of features may be useless or duplicates.

Some methods for reducing the number of dimensions try to fit as much information as possible into a smaller group of new variables from the original data. By putting variables that hold the most information about the result or the data structure at the top of the list, mutual information can help guide this process.

- Representation Learning

The main goal of deep learning, especially unsupervised or self-supervised learning, is to learn how to describe data in a way that makes sense. These are smaller forms of the input that still have the important parts that will be needed in the future.

Some new algorithms, like Deep InfoMax or Contrastive Predictive Coding, are based on the idea of making the most of the knowledge that different parts of the data share with each other. In one case, they might teach a model to store a picture internally so that it remembers all the important details needed to later reconstruct or classify the image. Making sure the internal model has as much useful information as possible is the goal.

- Clustering and Unsupervised Learning

If you have ground truth labels for a clustering job, you can use mutual information to see how well a clustering algorithm is doing. It checks how well the clustering results match up with the data’s real groups.

In a broader sense, mutual knowledge can help unsupervised learning by showing patterns in the data. It’s good for finding patterns in raw data and seeing how different factors are linked because it doesn’t depend on labels.

- Causal Discovery and Probabilistic Modeling

In more complex situations, mutual knowledge is used to find possible links between variables that cause them to change. In a medical dataset, for instance, mutual information could help show that a certain gene is highly linked to a disease outcome.

It’s also used to figure out which variables should be linked in a network in probabilistic graphics models. This helps make models, like Bayesian networks or Markov random fields, that show how the data is really structured.

How to Figure Out Mutual Information from Data?

It’s easy to understand what mutual knowledge means, but it’s harder to figure it out from data, especially when you’re working with continuous variables or spaces with a lot of dimensions.

- Discrete Variables

You can use frequency counts to determine mutual information if your variables are categorical or can be broken down into discrete sets. It’s easy and quick to use this method, but it doesn’t work well for ongoing data. - Continuous Data

It’s hard to figure out probability densities for factors that change over time. Kernel density estimation and methods based on nearest neighbors are two popular methods. Sometimes these are right, but it takes a lot of data and careful tuning to make them work right. - Neural Network-Based Estimators

More recently, deep learning has made it possible to predict mutual information in new ways. These techniques teach neural networks to get close to the link between variables by teaching them representations that let them figure out mutual information even in data that is very complicated or has a lot of dimensions. These days, researchers like to use methods like MINE (Mutual Information Neural Estimation).

Problems and limits

Mutual knowledge has many benefits, but it also has some important drawbacks:

- Hard to Estimate Accurately

Estimating mutual information can be hard to do on a computer, especially when there are a lot of factors. When predictions are wrong, the results can be misleading. - Symmetric but Not Directional

It’s possible for two variables to share information, but mutual information doesn’t tell you which variable has an effect on the other. That is, it doesn’t point in a certain way, so it’s not enough to draw a conclusion about cause and effect on its own. - Computational Complexity

It can take a lot of time and resources to estimate shared information, especially when neural methods are used. This can get in the way of real-time or large-scale apps. - Interpretation Challenges

This term tells you how much information is shared, but it doesn’t always describe what that information is. Mutual information maximization has taught deep models a lot, but it’s still not clear what that means.

How Mutual Information Will Change in the Future of Machine Learning?

As machine learning gets better, mutual knowledge becomes more and more important. It’s at the heart of a lot of the most interesting study areas, from self-supervised learning to AI that can be understood and causality.

What we can expect to see is:

- You can get better estimators that work faster and with less data.

- Models that use both probabilistic and deep learning methods, as well as input from each other.

- There are now new tools that make it easier to understand what shared information about neural networks and complex data shows.

- More widespread use in fields like genomics, healthcare, and banking, where understanding the connections between variables is very important.

In conclusion

Mutual information is one of the few machine learning tools that blends a very rigorous theory with a lot of different ways to use it. We can see deeper than simple trends and find the real structure of data, which helps us choose features, learn representations, or make better models.

Mutual information is a strong way to measure and control the flow of information through our models as the field continues to work on more difficult problems. Even though it has problems, it is still an important idea that everyone who works with machine learning should understand and have in their toolbox.