What is Bulk in MongoDB?

With MongoDB, bulk operations help you efficiently carry out a lot of write operations, such inserts, updates, and deletes, on a single collection. Bulk operations combine a number of operations into a single command rather than submitting separate requests to the server for each activity. By lowering the computational cost of sending separate update requests and iterating through big datasets, this greatly increases performance and efficiency.

Understanding Bulk Operations

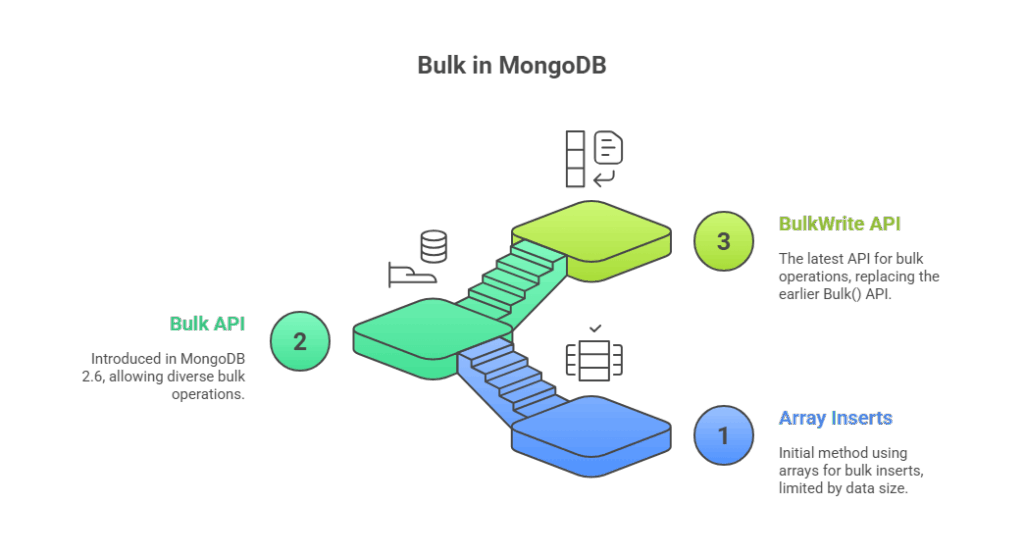

Several techniques for carrying out bulk write operations are available in MongoDB, and they vary according on the version:

- db.collection.insert() with an Array: The db.collection.insert() method can accept an array of documents for bulk inserts. This only requires one network round trip, making it far faster than individual insertion. The amount of data that can be added in a single batch is limited, but (current versions allow up to 48 MB). Larger batches may be automatically divided into smaller ones by drivers.

- Bulk API (MongoDB versions ≥ 2.6 and < 3.2): This API enables the bulk execution of several write operations, such as inserts, updates, and removals, for MongoDB versions ≥ 2.6 and < 3.2. You use db.collection to initialise a list of operations.initialise db.collection or UnorderedBulkOp().Set up Ordered Bulk Operations.

- Unordered Operations: MongoDB can parallelise operations. MongoDB processes the remaining operations if one fails.

- Ordered Operations: These carry out tasks in a sequential fashion. Any further activities in the list are stopped by MongoDB in the event of an error. Because each operation must wait for the preceding one to finish, ordered operations are typically slower for sharded collections.

- Bulk.execute() is used to execute operations after they have been added to the bulk object using Bulk.insert(), Bulk.find(), and Bulk.update().

- bulkWrite() API (MongoDB versions ≥ 3.2): For bulk operations, this is the most recent API that replaced the earlier Bulk() API. Write commands usually limit operations to 1000. This means grouping operations into batches and re-initializing the array when the 1000-operation limit is reached for larger sets.

Converting a Field to Another Type and Updating an Entire Collection in Bulk

Changing a field’s data type across a collection is typical in bulk operations. For instance, translating string number or date values like “57871” to 57871 (integer) or “1986-08-21” to ISODate(“1986-08-21T00:00:00.000Z”).

Inefficient Approach (for relatively small data): For smaller collections, one might use a cursor’s forEach() method to update each document.

db.test.find({

"salary": { "$exists": true, "$type": 2 }, // Type 2 is string

"dob": { "$exists": true, "$type": 2 }

}).snapshot().forEach(function(doc){

var newSalary = parseInt(doc.salary);

var newDob = new ISODate(doc.dob);

db.test.updateOne(

{ "_id": doc._id },

{ "$set": { "salary": newSalary, "dob": newDob } }

);

});This code does the job, however looping through vast amounts of data and sending each update action as a new server request reduces its performance for large collections.

Efficient Approach using Bulk() API (MongoDB versions ≥ 2.6 and < 3.2): Writing activities to the server in batches, usually every 1000 requests, using the Bulk() API improves performance significantly. Updates are faster and more efficient. In this example, initializeUnorderedBulkOp() executes write operations in parallel and non-deterministic batches.

var bulk = db.test.initializeUnorderedBulkOp(),

counter = 0; // counter to keep track of the batch update size

db.test.find({

"salary": { "$exists": true, "$type": 2 },

"dob": { "$exists": true, "$type": 2 }

}).snapshot().forEach(function(doc){

var newSalary = parseInt(doc.salary);

var newDob = new ISODate(doc.dob);

bulk.find({ "_id": doc._id }).updateOne({

"$set": { "salary": newSalary, "dob": newDob }

});

counter++; // increment counter

if (counter % 1000 == 0) {

bulk.execute(); // Execute per 1000 operations and re-initialise

bulk = db.test.initializeUnorderedBulkOp();

}

});

// Execute any remaining operations after the loop

if (counter % 1000 != 0) {

bulk.execute();

}Efficient Approach using bulkWrite() API (MongoDB versions ≥ 3.2): Using the bulkWrite() API (MongoDB versions ≥ 3.2) in an efficient manner Bulk() was deprecated in lieu of bulkWrite() in MongoDB 3.2 and later. The array of bulk write operations created by this method is sent to db.test.bulkWrite(). Batching actions into groups of no more than 1000 is essential, and the array should be reset when the limit is reached.

var cursor = db.test.find({

"salary": { "$exists": true, "$type": 2 },

"dob": { "$exists": true, "$type": 2 }

}),

bulkUpdateOps = [];

cursor.snapshot().forEach(function(doc){

var newSalary = parseInt(doc.salary);

var newDob = new ISODate(doc.dob);

bulkUpdateOps.push({

"updateOne": {

"filter": { "_id": doc._id },

"update": { "$set": { "salary": newSalary, "dob": newDob } }

}

});

if (bulkUpdateOps.length === 1000) {

db.test.bulkWrite(bulkUpdateOps);

bulkUpdateOps = [];

}

});

// Execute any remaining operations after the loop

if (bulkUpdateOps.length > 0) {

db.test.bulkWrite(bulkUpdateOps);

}In order to avoid problems with concurrent updates, the snapshot() cursor function is utilised in these instances to help guarantee that the cursor only processes documents that were present at the beginning of the query.

Performance Considerations

- Reduced Network Roundtrips: By reducing network communication between the client and server, bulk operations offer a number of benefits. Overhead and delay are decreased by making fewer, bigger requests.

- Server-Side Optimisation: MongoDB can optimise how writes are applied to disc by internally grouping and possibly rearranging unordered bulk operations to improve efficiency.

- Atomicity: Although a bulk write’s component actions are atomic for a single document, the bulk operation is not atomic for several documents. This implies that certain documents may update while others do not in the event of an error during an unordered bulk operation. Successful operations are not rolled back, but for ordered operations, the procedure ends at the first fault.

- Indexing Overhead: MongoDB must update all related indexes in addition to the data itself for each write operation (insert, update, and delete). This implies that a collection’s write performance overhead may increase if it has a large number of indexes. For example, full-text indexes are especially “heavyweight” since they need string tokenisation and stemming, which results in worse write performance.

- Sharded Clusters: Mongos routes write operations to the appropriate shards in sharded environments. Inserts may target a single shard if a shard key’s values rise or decrease monotonically, which would limit performance. If the shard key is not fully given in the query, multi-update actions are typically disseminated to all shards. To distribute data uniformly from the start and avoid bottlenecks, it is advised to pre-split the collection and insert via numerous Mongos instances running in parallel for big first data loads. Performance can also be affected by chunk migrations, which distribute data evenly among shards.

- Oplog Size: Multiple-document operations, including bulk deletions or multi-updates, are “exploded” into separate oplog entries for each impacted document. Secondaries may lag behind the primary as a result of this quickly filling the oplog, the operation log used for replication. When doing numerous multi-updates, a bigger oplog size may be required.