Gated Recurrent Unit (GRU)

A particular kind of Recurrent Neural Network (RNN) architecture called a Gated Recurrent Unit (GRU) is made to analyze sequential input, including speech, time series, and natural language. By making its structure simpler, it improves the speed performance of LSTM networks. GRUs may selectively update the hidden state at each time step, retain crucial information, and eliminate unimportant data by using gating techniques to regulate the flow of information over time.

History of GRU

Kyunghyun Cho et al. created the GRU network in 2014. As in the line “My mom gave me a bicycle on my birthday because she knew that I wanted to go biking with my friends,” where “bicycle” and “go biking” are linked but distant, it was created to address issues with lengthy sequences where contexts may be far apart.

How GRU Works

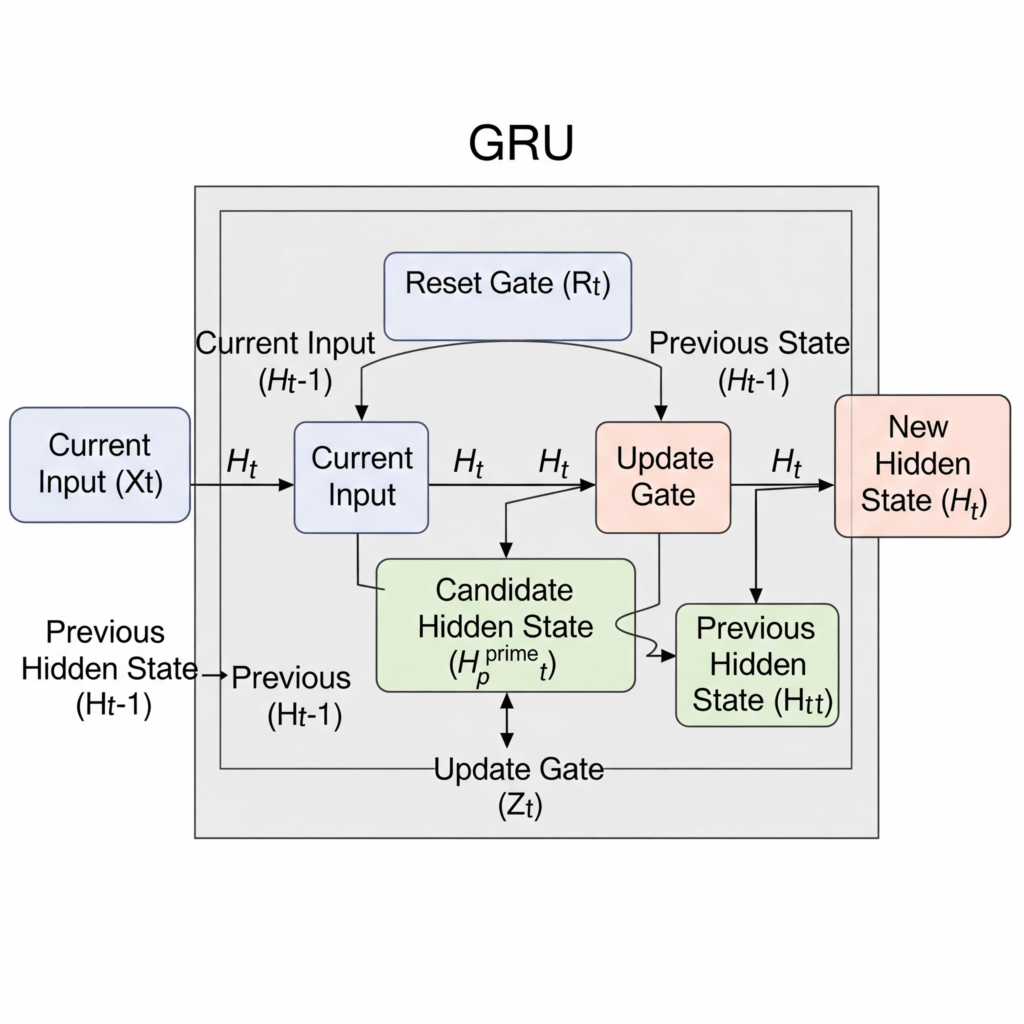

The core component of a GRU network is the Gated Recurrent Unit (GRU) cell. The network determines which historical data is pertinent to the present time step and which can be ignored by processing sequential data by passing the concealed state from one time step to the next. The input in the current timestamp and the prior concealed state are the two inputs that a GRU cell accepts.

Reset Gate

At a given timestamp, the reset gate (r_t) determines which unneeded data should be removed from the GRU network. In essence, it regulates the amount of the prior hidden state that should be forgotten. By applying a sigmoid function to a linear transformation of the current input and the prior hidden state, the reset gate produces an output value between 0 and 1.

Update Gate

The current GRU cell information that is sent to the subsequent GRU cell is determined by the update gate (z_t). It assists in remembering the most crucial details. The states of the candidate activation and the prior activation are balanced by this gate. In essence, it regulates the proportion of the newly acquired data that should be utilised to update the hidden state. Like the reset gate, it uses a sigmoid function to determine its output, which ranges from 0 to 1.

Candidate Hidden State

The reset gate’s output is used to compute the candidate hidden state (h_t’). It aids in identifying the data stored in the past and serves as a representation of the memory component in a GRU cell. Both the current input and a “reset” version of the prior hidden state are taken into account in this computation.

Hidden State

The GRU cell’s final output at the current time step is the new hidden state (h_t). The update gate determines the weights, which are a weighted average of the candidate hidden state and the prior hidden state.

Backpropagation

When the GRU network predicts wrongly during training, the error (loss) is determined by comparing it to the original label and distributed backward. Backpropagation modifies input, gate, and hidden layer weights and biases to reduce loss function. The Gradient Descent principle is used to determine the slope and alter the parameter weights and biases to lower the cost if the cost function is high. This iterative approach continues until the parameters reduce the cost function and enhance model prediction accuracy. It is crucial to remember that the weights of the current GRU are modified in accordance with the subsequent GRU cell.

Also Read About Stochastic Gradient Variational Bayes and its Advantages

Features of GRU

When compared to Long Short-Term Memory (LSTM) networks, GRUs are distinguished by their more straightforward construction. Important characteristics include:

- Less Parameters: Generally employing three weight matrices as opposed to four, a GRU has less parameters than an LSTM cell. This is because, unlike LSTMs, it does not have a distinct cell state or output gate.

- Two Main Gates: An update gate and a reset gate are the two main gates that make up a GRU cell’s core. The input, forget, and output gates are the three gates found in LSTMs.

- Direct Hidden State Storage: GRUs have a simpler structure than LSTMs because they store information directly in the hidden state rather than maintaining a distinct internal cell state.

- Vanishing Gradient Problem Solved: GRUs, like Long Short-Term Memory (LSTM), were created to solve the vanishing gradient issue that conventional RNNs frequently face. They lessen this by controlling the gradient flow during training, making sure that crucial data is maintained and gradients don’t diminish too much.

Gated Recurrent Unit Types

- Fully Gated Unit: This is the typical form mentioned above, in which gates are dependent on both the hidden state from before and the present input. By altering the computation of the update (z_t) and reset (r_t) gates, for example, by making them solely reliant on the bias or the previous concealed state, other versions of the fully gated unit can be produced.

- Minimal Gated Unit (MGU): The update and reset gate vectors are combined into a single forget gate in the Minimal Gated Unit (MGU), which is comparable to the fully gated unit. By lowering the number of gates to one, this further streamlines the architecture.

- Light Gated Recurrent Unit (LiGRU): This variant adds batch normalization (BN), swaps out the tanh activation function for ReLU, and completely eliminates the reset gate. A variation known as the Light Bayesian Recurrent Unit (LiBRU) is the result of LiGRU’s Bayesian analysis.

Advantages of GRU

- The simplified design and fewer parameters of GRUs make them computationally cheaper and faster to train than LSTMs. When processing large datasets fast, this is helpful.

- Like LSTMs, they manage long-term dependencies in sequential data well. They do better at forecasting and machine translation because their gating mechanisms allow selective recollection or forgetting.

- Less Prone to Gradient Issues: Standard RNNs are susceptible to vanishing/exploding gradient issues, which the gating techniques assist to alleviate. This results in more stable training and improved learning across lengthy sequences.

- Simplicity: They are made simpler by combining input and forget gates into a single update gate.

Disadvantages of GRU

- GRUs are effective, although their gating mechanism is simpler than LSTMs’ three gates. This may make it harder to spot long-term dependence or complex connections.

- Due to their simpler architecture and fewer parameters, GRUs may overfit, especially on smaller datasets, requiring careful hyperparameter optimisation.

- Limited Interpretability: GRU forecasts and decision-making processes are complicated, making them hard to understand.

Also Read About Advantages And Disadvantages Of Multilayer Perceptron (MLP)

Challenges of GRU

The primary difficulties with GRUs are frequently related to the intricacy of deep learning models and their comparison to LSTMs:

- Efficiency versus Ease Trade-off: Although GRUs’ simplified structure makes them computationally efficient, there is no obvious performance difference between GRU and LSTM. While speed-critical applications often favour GRUs, LSTMs may be chosen for activities where accuracy is crucial. This suggests that selecting the best architecture for a particular application and dataset can be difficult.

- Modelling Complex Systems: GRUs’ ability to simulate extremely complex systems may be limited by their simpler structure as compared to LSTMs.

- Hyperparameter adjustment: To prevent problems like overfitting, effective performance necessitates thorough hyperparameter tweaking, as is the case with many neural networks.

Uses for Gated Recurrent Units

Because GRUs can analyse sequential data, they are widely used in many different fields:

- NLP, or natural language processing:

- Machine translation: Producing fluid translations in another language by analysing context in the original.

- Text summarization: extracting important information from text passages to create succinct summaries.

- Chatbots: Capturing the context of a discussion and reacting organically. Sentiment analysis is the process of examining word sequences to ascertain the general sentiment.

- Speech Recognition: This technology effectively handles speech variances by converting audio information into text.

- Time Series Forecasting: Using their capacity to identify long-term dependencies, past data (such as sales and stock prices) is analysed to forecast future patterns.

- Finding odd patterns in data sequences to detect fraud or network intrusions is known as anomaly detection.

- Music Generation: Creating new musical compositions in a variety of styles by analysing chord and note patterns.

- Biomedical Applications: Used in fields such as ECG arrhythmia classification and brain tumour segmentation of MRI images.

Requirements for GRU Study

It is advised to have the following in order to comprehend Gated Recurrent Units:

- A basic understanding of deep learning and neural networks.

- Knowledge of ideas like backpropagation and gradient descent.

- Knowledge of the vanishing gradient issue that recurrent neural networks (RNNs) may encounter.

- The fundamentals of linear algebra, especially matrix operations and associated characteristics.

- Knowledge with Python programming and related libraries, like Pytorch, Keras, TensorFlow, etc.

LSTM vs GRU

| Feature | LSTM (Long Short-Term Memory) | GRU (Gated Recurrent Unit) |

| Structure | More complex structure with three gates (input, forget, and output gate) | Simpler structure with two gates (update and reset gate) |

| Parameters | More parameters (4 weight matrices) | Fewer parameters (3 weight matrices) |

| Cell State | Yes, it has a separate cell state (C_t) | No, information is stored directly in the hidden state |

| Training Speed | Slower to train due to complexity | Faster to train due to simpler architecture |

| Computational Load | Higher due to more gates and parameters | Lower due to fewer gates and parameters |

| Memory Resources | Might require more memory resources | Tend to use fewer memory resources |

| Performance | Generally performs well on many tasks | Generally performs similarly to LSTM on many tasks, sometimes outperforming |

| Specific Advantages | Advantages in natural language understanding and machine translation tasks | Better suited for large datasets or sequences where speed is critical |

Implementation in Python

Python deep learning libraries such as Keras and TensorFlow can be used to implement GRUs. In a typical implementation, the required libraries (such as numpy, pandas, and MinMaxScaler) are loaded, the dataset (such as time-series data) is loaded and preprocessed, the data is prepared for the GRU model by reshaping it into the expected 3D format ([samples, time steps, features]), the GRU model is built with the designated units and layers, the model is trained using model.fit(), predictions are made, and the predictions are inversely transformed back to their original scale.