Discover how the Application of FeedForward Neural Network offer powerful, efficient solutions across industries with unmatched simplicity and accuracy.

What is a FeedForward Neural Network?

One of the most basic kinds of artificial neural networks ever created is a feedforward neural network. Information in this network flows from the input nodes to the output nodes via the hidden nodes (if any) in a single direction: forward. The network is free of loops and cycles. Compared to their convolutional and recurrent neural network cousins, feedforward neural networks are simpler and were the first kind of artificial neural network to be created.

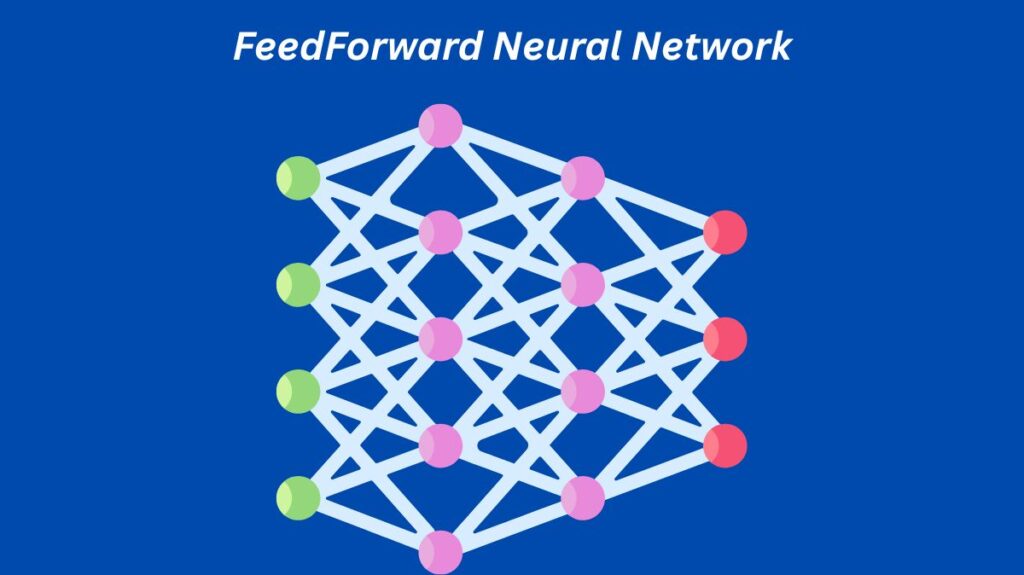

Feedforward Neural Network Architecture

Three different kinds of layers make up a feedforward neural network’s architecture: input, hidden, and output layers. The layers are connected by weights, and each layer is composed of units called neurons.

- Neurons that accept signals and forward them to the following layer make up the input layer. The dimensions of the input data dictate how many neurons are present in the input layer.

- Hidden Layers: These layers can be thought of as the neural network’s computational engine because they are not exposed to the input or output. The neurons in each hidden layer apply an activation function to the weighted sum of the outputs from the preceding layer before passing the information on to the subsequent layer. There may be zero or more hidden layers in the network.

- The last layer that generates the output for the supplied inputs is called the output layer. The number of potential outputs that the network is intended to generate determines how many neurons are used in the output layer.

- This network is fully linked since every neuron in one layer is connected to every other neuron in the layer above. Weights indicate how strongly neurons are connected, and learning in a neural network entails adjusting these weights in response to output mistake.

How does Feedforward Neural Networks works?

There are two stages in the operation of a feedforward neural network: the feedforward phase and the backpropagation phase.

- Feedforward Phase: During this stage, the network receives input data and uses it to propagate forward. The model becomes non-linear at each hidden layer when the weighted total of the inputs is computed and run through an activation function. Until the output layer is reached and a prediction is produced, this process keeps on.

- The backpropagation phase involves calculating the error, or the discrepancy between the expected and actual output, after a prediction has been made. The weights are then changed to minimise this inaccuracy after it has been spread back through the network. Usually, a gradient descent optimisation approach is used for weight adjustment.

Activation functions

An essential component of feedforward neural networks is activation functions. They give the network non-linear characteristics, which enables the model to pick up increasingly intricate patterns. Tanh, ReLU (Rectified Linear Unit), and sigmoid are examples of common activation functions.

Feedforward Neural Network Training

In order to train a feedforward neural network, the weights of the connections between neurons are changed using a dataset. The dataset is run through the network several times in an iterative process, and the weights are changed each time to lower the prediction error. Gradient descent is the term for this procedure, which keeps going until the network operates effectively on the training set.

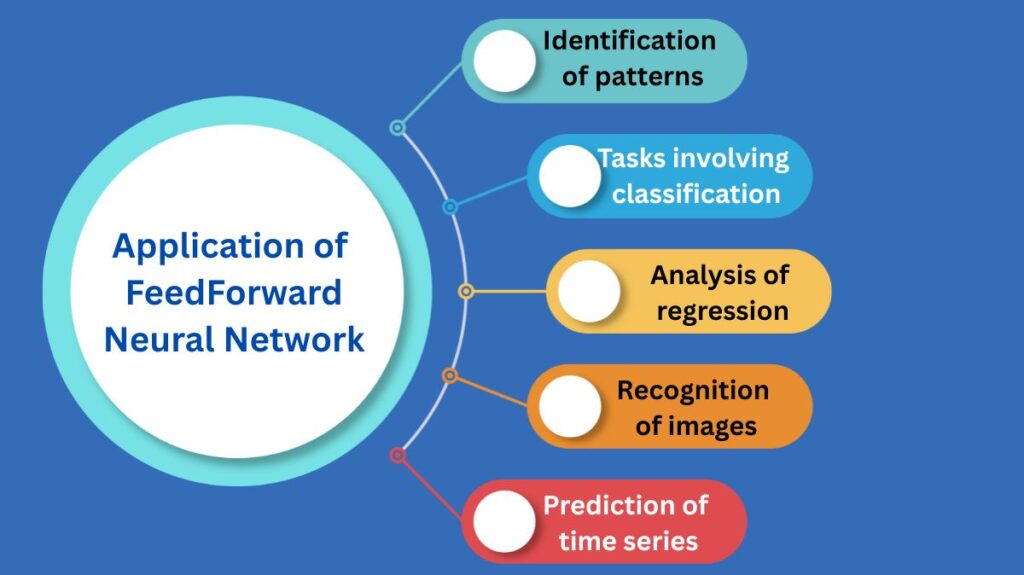

Application of FeedForward Neural Network

Numerous machine learning tasks employ feedforward neural networks, such as:

- Identification of patterns

- Tasks involving classification

- Analysis of regression

- Recognition of images

- Prediction of time series

Feedforward neural networks, despite their simplicity, have served as the basis for more intricate neural network architectures and are capable of modelling intricate relationships in data.

Obstacles and Restrictions

Feedforward neural networks have downsides and limitations despite their strength. Selecting the number of hidden layers and neurons in each layer is a major challenge because it affects network performance. When the network overfits, it performs poorly on new data because it learnt the training data including noise too well.

Feedforward neural networks underpin deep learning and neural networks. They have cleared the path for more sophisticated neural network architectures utilized in contemporary artificial intelligence applications and offer a simple method for modelling data and generating predictions.

FeedForward Neural Network Diagram

The Feedforward neural network Function

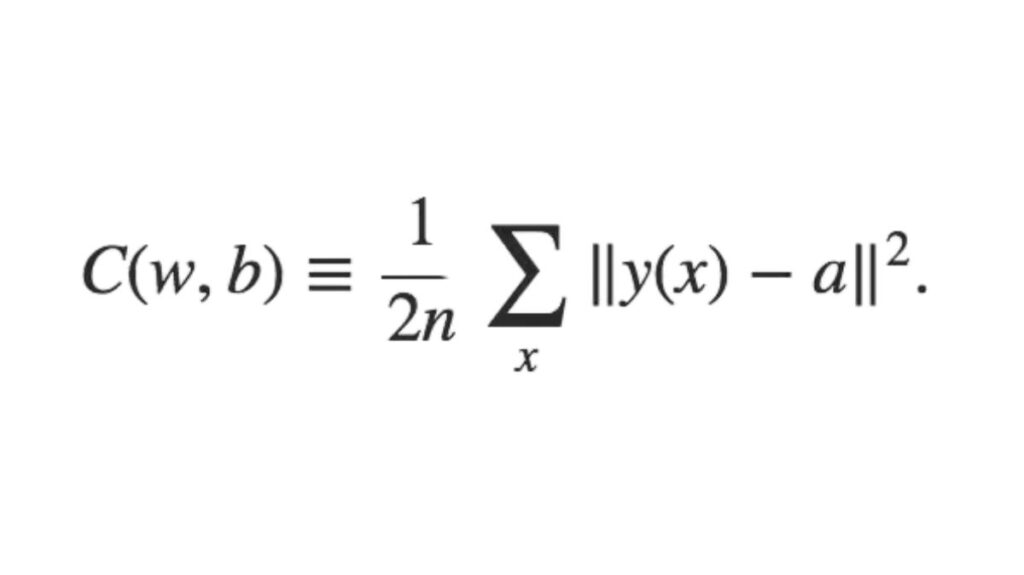

Cost function

The cost function is crucial to a feed forward neural network. Small changes to weights and biases have little effect on the categorized data points.

Thus, a strategy of modifying weights and biases to enhance performance can be found using a smooth cost function.

The mean square error cost function is defined as follows:

Where,

w = the weights gathered in the network

b = biases

n = number of inputs for training

a = output vectors

x = input

‖v‖ = vector v’s normal length

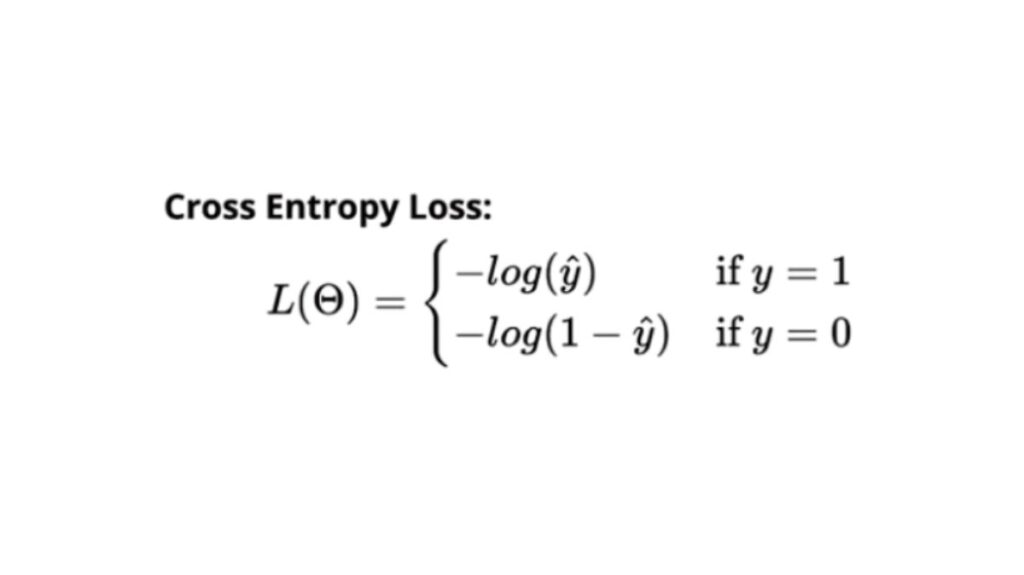

Loss function

A neural network’s loss function is used to assess whether the learning process needs to be modified.

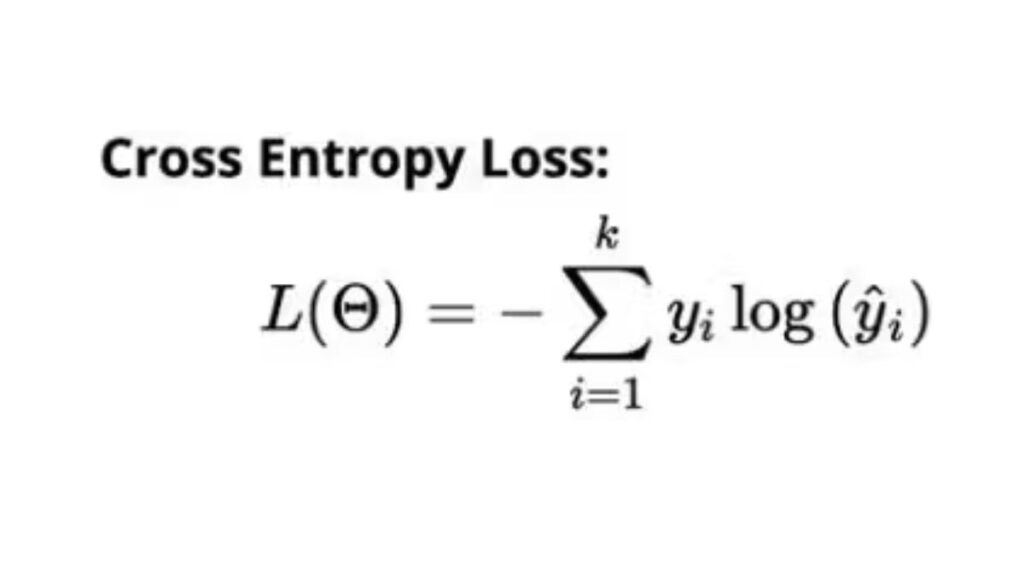

The number of classes is equal to the number of neurons in the output layer. displaying the variations between the actual and anticipated probability distributions. The cross-entropy loss for binary classification is shown below.

Multiclass categorization results in a cross-entropy loss:

Gradient learning algorithm

The gradient at the current position is scaled by a learning rate in the gradient descent process to determine the next point. then deducted the obtained value from the current position.

It subtracts the value to lower the function (it would add to increase). Here’s an illustration of how to write this procedure:

The step size is determined by the parameter η, which also modifies the gradient. In machine learning, learning rate has a big impact on performance.

Output units

Output units in the output layer are those that perform the task required of the neural network by producing the intended output or prediction.

The cost function and the selection of output units are closely related. In a neural network, any unit that can function as a hidden unit can also function as an output unit.

Advantages of FeedForward Neural Networks

- The simpler architecture of feed forward neural networks helps improve machine learning.

- With a regulated middleman, many networks function autonomously in feed forward networks.

- Multiple neurons are required in the network for complex tasks.

- In contrast to perceptrons and sigmoid neurons, which are otherwise complex, neural networks can handle and analyze nonlinear input with ease.

- Decision boundaries are a complex problem that a neural network handles.

- The design of a neural network can change based on the data.

- For instance, recurrent neural networks (RNNs) are good at processing text and voice, whereas convolutional neural networks (CNNs) are very good at processing images.