Neural Turing Machines (NTMs)

A class of neural network models called Neural Turing Machines (NTMs) was created to overcome the drawbacks of conventional recurrent neural networks (RNNs) by incorporating external memory capabilities.

Core Concept and Architecture:

- Neural networks enhanced with a type of permanent memory are called NTMs. The network’s temporary hidden states are not the same as this memory.

- The architecture consists of an external memory matrix and a controller, which is usually implemented as a recurrent neural network (such as an LSTM).

- Read and write heads are used by the controller to communicate with the memory. Through the output of a weight distribution across memory regions, these heads decide where in the memory to write to and read from.

- Because NTMs’ memory operations (reads and writes) are made to be differentiable, gradient-based techniques like backpropagation can be used to train the network.

Motivation and Advantages over RNNs:

- Conventional neural networks, such as LSTMs, tightly combine computations and data storage (hidden states), creating a temporary memory that is challenging to accurately regulate. By clearly separating memory from computation, NTMs hope to facilitate more regulated memory access.

- Even though RNNs are technically Turing complete, that is, they can simulate any algorithm given enough resources, they frequently have trouble performing in real-world scenarios and generalising to jobs involving lengthy sequences. Despite being technically Turing complete as well, NTMs have advantages in practice because of their structured memory access.

- Because of its obvious memory separation and regulated access, NTMs are able to carry out calculations more closely resembling how human programmers work with data structures. Better generalisation power may result from this, particularly when working with datasets that are different from the training data for example, sequences that are longer than those used in training.

- One may also consider the natural way NTMs manage memory updates to be a type of regularisation.

You can also read Types of Matrix factorization in Natural Language Processing

Relation to Memory Networks and Attention:

- NTMs are regarded as a particular kind of memory network.

- One way to think about NTMs’ selective memory access processes is as an internal kind of attention applied to the memory.

Training and Learning:

- Backpropagation and continuous optimisation are used to train NTMs. The key to this is the memory operations’ differentiability.

- By continuously optimising algorithmic stages, they may be able to imitate general classes of algorithms.

Variants

- NTMs are said to be improved upon by the Differentiable Neural Computer (DNC), which adds further structures for tracking the temporal sequence of writing and controlling memory allocation.

Applications and Tasks:

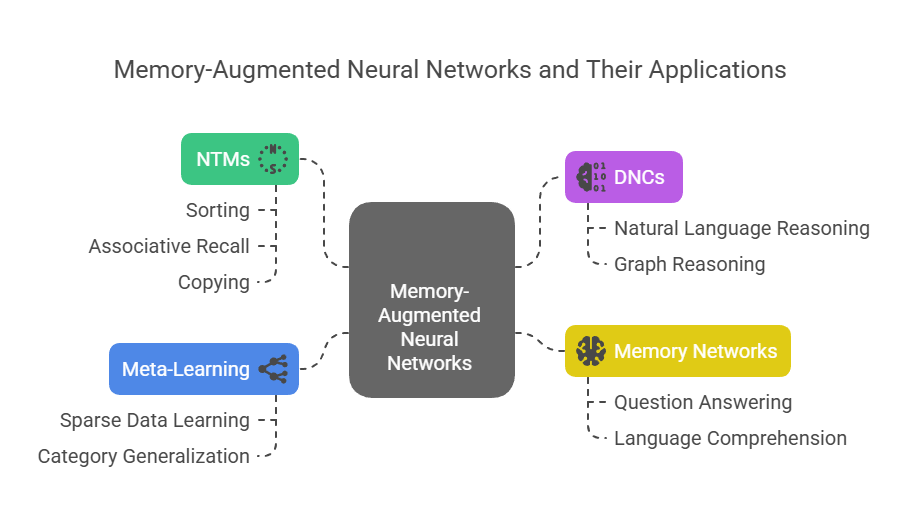

- Relatively easy tasks like sorting, associative recall, and copying have been used to test NTMs.

- DNCs and other related models have been used for more difficult tasks including natural language and graph reasoning.

- A roughly related architecture called memory networks has been employed for tasks like question answering and language comprehension.

- Meta-learning, or learning across categories from sparse data, has also been investigated with NTMs.

You can also read How Does Latent Dirichlet Allocation Work And What Is It?

In conclusion, the development of neural network models that can better utilise external memory is represented by Neural Turing Machines. These models offer real-world learning and generalisation benefits over standard RNNs, especially for tasks involving structured or long-term memory dependencies.

Neural Turing Machines Examples

Copying Input:

Remarkably difficult for conventional recurrent networks, NTMs can learn to replicate an input sequence.

Algorithm Extrapolation:

Variable output is possible because NTMs can learn algorithms that map input to output and extrapolate based on the learnt algorithm.

Language Modeling (Autocomplete):

NTMs can perform well on datasets intended to assess general cognitive capacities and are particularly good at language modelling tasks like autocomplete.

Balanced Parentheses Recognition:

A stack-like memory structure is necessary for NTMs to learn and execute algorithms, as evidenced by their capacity to recognise balanced brackets.

You can also read An Introduction To Restricted Boltzmann Machines Explained