The definition, methods, Text Preprocessing Steps In NLP and difficulties of text preparation in natural language processing are covered in detail in the following article.

What is text preprocessing?

Text Pre-processing is a fundamental and important initial stage in Natural Language Processing (NLP) systems. It involves converting unstructured, noisy, and inconsistent raw text data into a format that is easy to understand and may be utilized for further research. One of the main tasks of this step is to get the raw text data ready for further processing.

Early NLP systems that dealt with tiny, manually prepared, monolingual texts with consistent orthographic standards occasionally ignored or oversimplified the text preparation problem. Fully automated preprocessing is now required, though, due to the emergence of massive corpora gathered from sources such as the Internet, which can include billions of words per day. According to the “Garbage in – Garbage out” principle, algorithms developed on noisy data are unlikely to be useful without preprocessing.

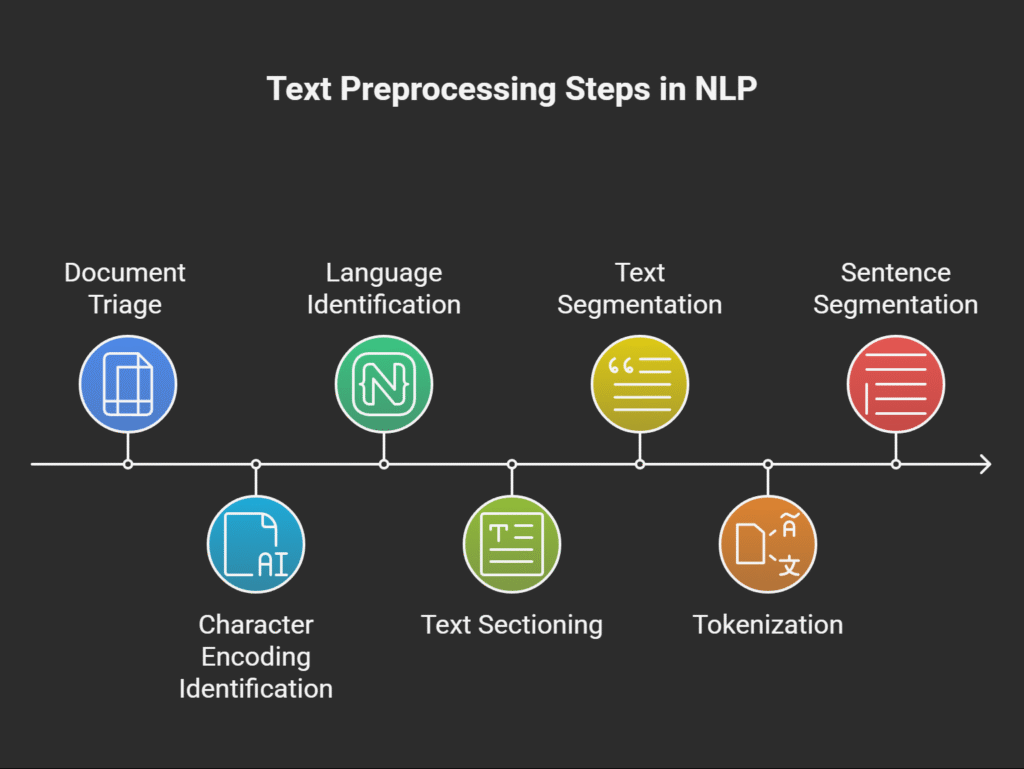

Text preprocessing steps in NLP

Text preprocessing can generally be divided into two steps:

Document Triage:

The process of turning a collection of digital files into distinct text documents is known as document triage. This calls for a completely automated procedure for contemporary, sizable corpora.

Among the steps in document triage are:

- Identifying a file’s character encoding and, if necessary, switching between encodings to make sure the document is machine readable is known as character encoding identification.

- Language identification: Identifying a document’s natural language so that language-specific algorithms can be applied.

- Finding the real information of a file while removing extraneous components including graphics, tables, headers, links, browser scripts, SEO keywords, and HTML style is known as text sectioning. This is especially crucial for texts that have been taken from the internet. A well-defined text corpus is the result of this step.

Text Segmentation:

The process of text segmentation entails breaking the text up into a series of linguistically significant parts.

Important jobs consist of:

- Cutting a string into recognizable linguistic units, such words, is known as tokenization. One essential step that must be completed before moving on to subsequent language processing stages is tokenization. Deterministic algorithms based on regular expressions built into finite state automata are frequently used in the conventional approach. Tokenizers are included in NLTK, and regular expressions can be used for this. As seen by the way the English possessives are handled in various corpora, tokenization output can occasionally rely on subsequent processing steps. One illustration of the difficulties in this field is the segmentation of Chinese words.

- Finding the borders between sentences is known as sentence segmentation.

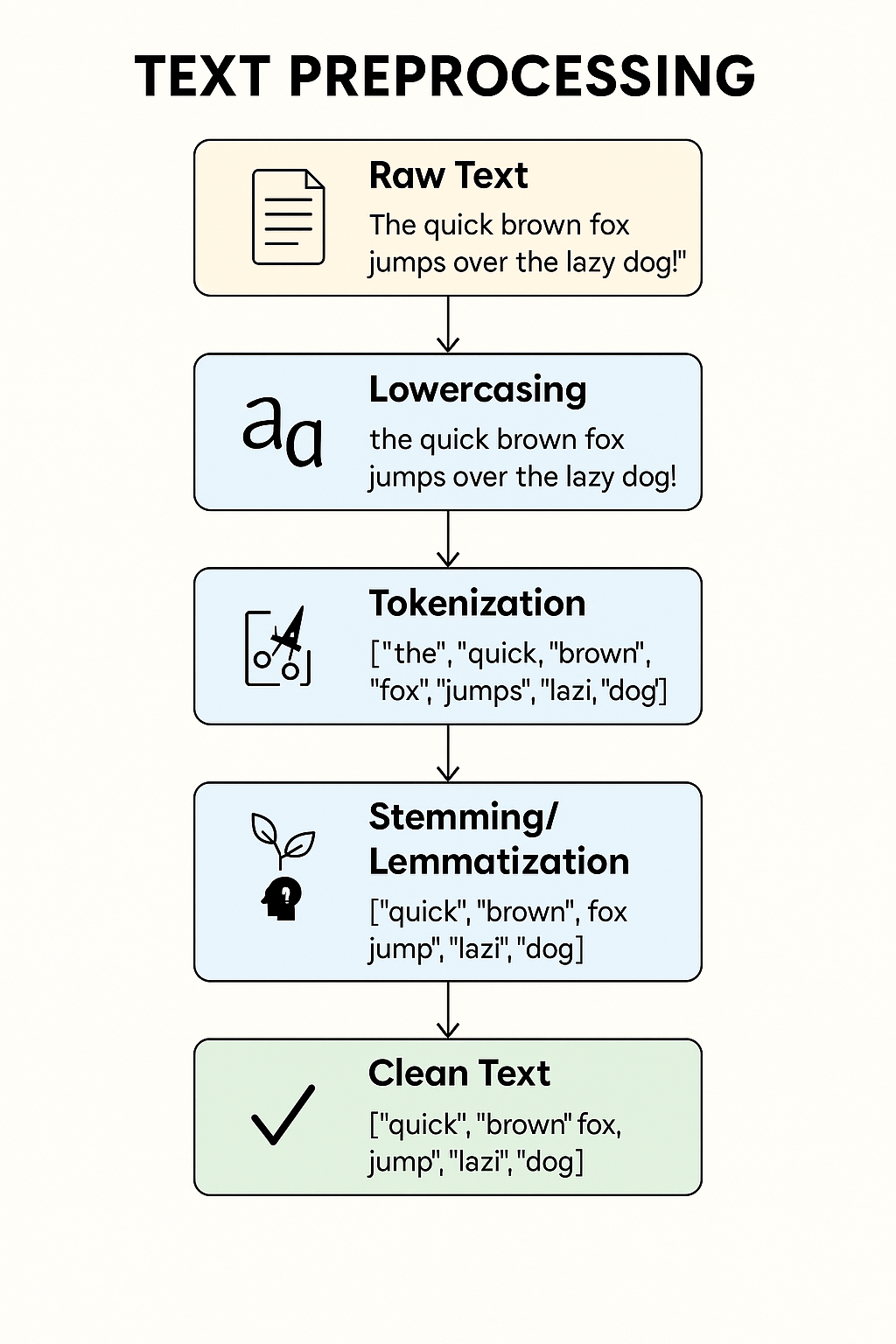

Text preprocessing techniques in NLP

A number of additional standard preparation procedures are regularly used:

- Text extraction and cleanup: This covers things like Unicode normalization and HTML parsing and cleanup. This also includes eliminating unnecessary symbols.

- Lowercasing text ensures that “The” and “the” are treated consistently. This is a straightforward technique that makes use of techniques like lower() in Python.

- Taking off the punctuation.

- Eliminating frequent words that might be filtered out prior to analysis, such as “is,” “and,” “the,” and “a,” is known as “stop words removal.”

- Finding and fixing spelling mistakes is known as spelling correction.

- Stemming is the process of removing affixes from words to reduce them to their base or root form. Sometimes the resulting stem isn’t a meaningful word. Over-stemming can occasionally result from aggressive stemming, which can have detrimental effects.

- Lemmatization is the process of transforming words into their dictionary or canonical form (lemma), typically according to their part of speech. The resulting lemma is a meaningful and well-known word. Morphological inflection issues can be lessened via lemmatization.

- Text normalization is the process of standardizing text by transforming symbols, variants, or abbreviations such as numerals and money amounts in TTS into their whole forms or written words. The conversion of out-of-vocabulary (OOV) words to an unknown word token () is another aspect of this.

- Removing unnecessary spaces, tabs, or newline characters is known as “whitespace removal.”

- Deleting special characters and HTML tags.

- Identification and possible masking of named entities is known as entity recognition and masking.

Challenges of text preprocessing in NLP

Dealing with online text abnormalities, handling contractions, and the fact that modern writing systems sometimes blend symbol kinds (logographic, syllabic, and alphabetic) are just a few of the many difficulties that come with text preparation. The type of writing system, the language, the character set, the application for which the NLP system is intended, and the properties of the corpus being processed are all factors that frequently influence the optimal strategy. It is crucial to keep in mind that errors made during the preprocessing phase might have an impact on every outcome that follows in the NLP pipeline.

Morphological analysis, POS tagging, syntactic parsing, semantic analysis, information retrieval, machine translation, and feeding data into machine learning models are just a few of the NLP jobs and model building processes that require preprocessing. Before generating word embeddings in neural networks, preparatory operations such as lemmatization or POS tagging are occasionally carried out on the corpus. An additional preprocessing step for language models is vocabulary creation and word conversion to integer IDs. Another strategy to expedite this procedure is to build an end-to-end preprocessing pipeline. Another first stage in Automatic Speech Recognition (ASR) systems is audio preprocessing, which handles the audio signal before turning it into text.