This article gives an overview of Topic Modeling Use Cases, Disadvantages, Models and Methods

Topic Modeling

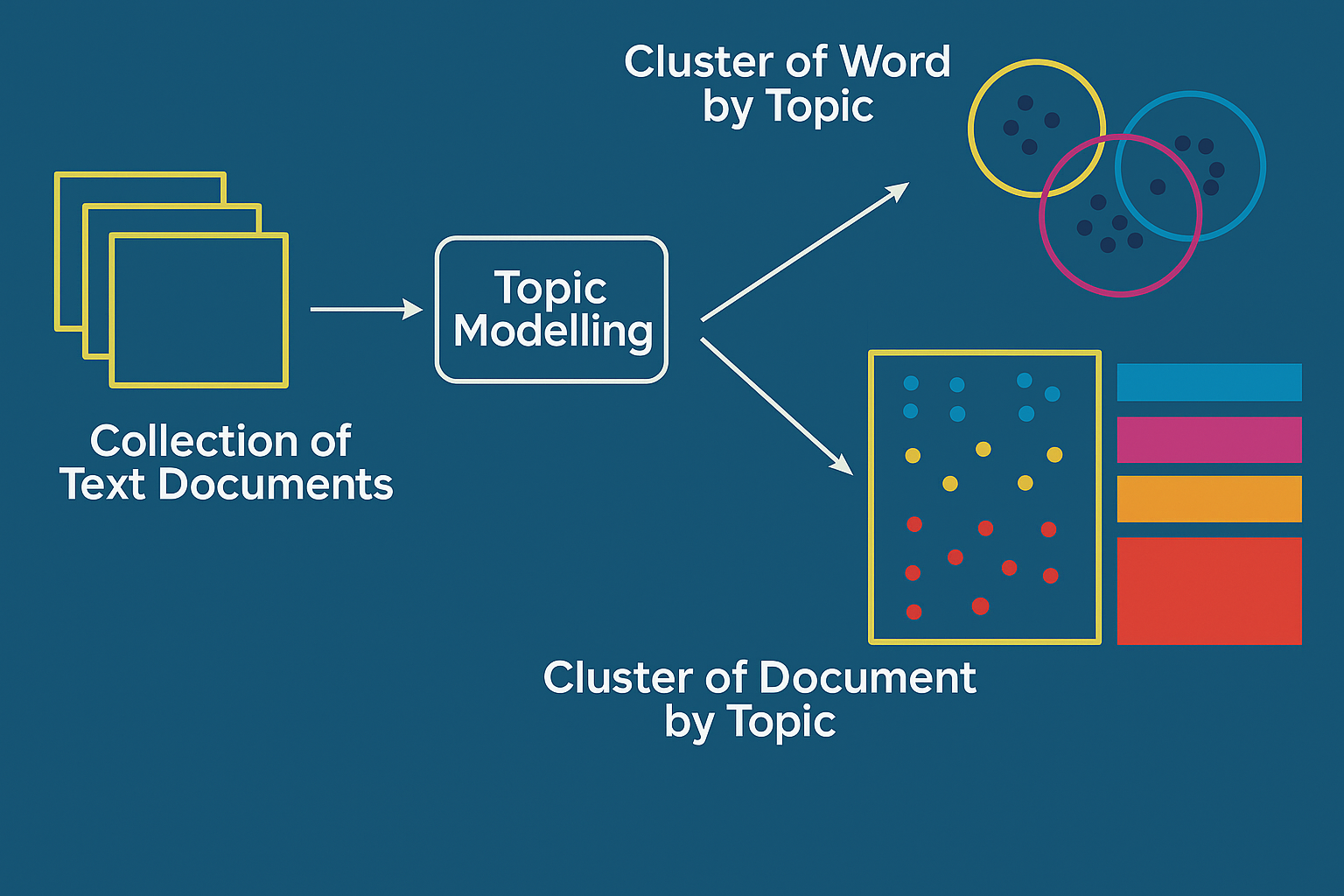

In Natural Language Processing (NLP), topic modelling is a method for locating or extracting themes from a set of documents or text data. It is regarded as a type of dimensionality reduction that is especially used with textual data.

Finding abstract “topics” in vast amounts of text is the main objective of topic modelling. Through combining qualitative and quantitative evaluations of text data, it enables users to obtain insights. As a technique for document tagging and clustering, topic modelling groups documents according to the subjects they contain. Topic models and semantic fields are regarded as helpful resources for identifying topical organization in documents.

From a conceptual standpoint, Topic Modelling frequently functions by depicting documents as collections of subjects and topics as collections of words. In order to comprehend word associations and create subjects, techniques usually investigate patterns of word co-occurrence. Words that are commonly used together in relevant settings are typically regarded as linked and might form a subject. Each document has a probability distribution of subjects and a probability distribution of words assigned to it by the probabilistic models used in topic modelling.

Topic Modeling Models and Methods

For topic modelling, a variety of models and methods are employed:

- One popular probabilistic model for topic modelling is Latent Dirichlet Allocation (LDA). Each document is given a probability distribution and themes are identified. An LDA model may be made with the gensim package.

- Another method for modelling themes in documents and calculating similarity based on topic distributions is Latent Semantic Analysis (LSA). Word co-occurrence statistics are used by LSA to extract semantic information.

- Another method for topic modelling is non-negative matrix factorization (NMF).

- One early topic model that is mentioned is Probabilistic Latent Semantic Analysis (PLSA).

- Subject modelling, in which the likelihood of a hidden unit being active given the words in a text is correlated with the intensity of a subject in the document, can be done with restricted Boltzmann machines (RBMs).

- A probabilistic model known as the “topic-sentiment mixture,” which is based on clustering, is another technique used to identify themes or characteristics in reviews.

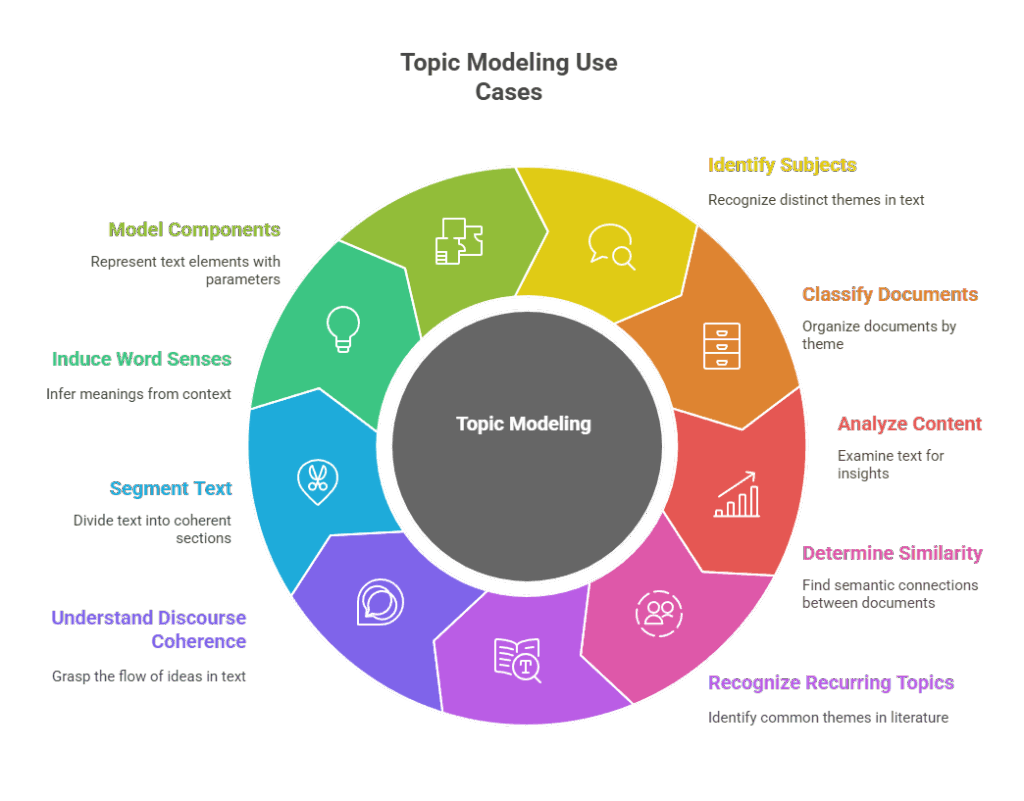

Topic Modeling Use Cases

Among the many uses for topic modelling are:

- Identifying distinctive subjects within a collection of papers.

- Recognizing subjects in written materials.

- Classification and administration of documents.

- Analysis of content.

- Figuring out semantic similarity at the document level.

- Identifying recurring topics in literature, such gene-related papers.

- Describing the semantics of words particular to a given topic.

- By recognizing topical coherence, one may comprehend discourse coherence.

- To ensure that only the pertinent portions of a query are returned, it can be used in information retrieval to divide lengthy documents into smaller, thematically coherent chunks.

- Text summarization is another application for it.

- It has to do with topic segmentation, which is the process of identifying the beginning and ending points of a subject in a voice or text sequence in order to identify the points at which topics shift. The TextTiling technique, which calculates the smoothed cosine similarity between neighbouring text blocks, is a typical example of subject segmentation utilizing lexical coherence. For topic segmentation, probabilistic models may also be developed.

- Word Sense Induction and Topic Modelling are connected. Based on the context of neighbouring words, clusters may correlate to word senses.

- A latent component may parametrise the independent modelling of components within a class based on varying language use in multi-component modelling for classification.

The main purpose of topic models on sample data is familiarization; on big datasets, however, meaningful results and insights are usually produced.

Topic Modeling Disadvantages

A few disadvantages of topic modelling or similar methods:

Difficulty with documents of varying lengths: One major issue with some sophisticated Restricted Boltzmann Machine (RBM) models used for topic modelling with a Poisson distribution is that they struggle to handle documents of different lengths.

Computational expense: One type of dimensionality reduction that is unique to text data is topic modelling. Low-rank approximation methods, such as Singular Value Decomposition, are related dimensionality reduction approaches that can be computationally costly. For example, the SVD algorithm’s cost is cubic in the vocabulary size dimension. The overall goal of dimensionality reduction for large text corpora might encounter enormous computing demands, even while specialized topic models such as Probabilistic Latent Semantic Analysis (PLSA) differ from SVD (e.g., needing non-negative basis vectors and altered representations).

Lack of interpretability: It can be challenging to comprehend the generated themes, and occasionally they may not be immediately interpretable. This is frequently a problem in dimensionality reduction techniques used to natural language and is mentioned as a disadvantage for representation learning utilizing Word2vec and low-rank approximation, which are similar approaches for learning word representations from text.

Conflation of word senses: Word2vec and the low-rank approximation combine all of a word’s senses into a single numerical representation. For instance, whether the term “bank” refers to a riverbank or a financial organization, it only gets one representation. Although the sources do not specifically mention this as a disadvantage of topic modelling, topic models frequently use word co-occurrence patterns to generate themes. By combining several usages of a polysemous term, the conflation of many meanings for a single word, as observed in related text representation approaches, may have an impact on the accuracy or quality of the detected subjects.