Transformers Explained NLP

A cutting-edge deep learning architecture that has taken center stage in Natural Language Processing (NLP) is transformer networks. Transformers are non-recurrent networks that rely mostly on self-attention, in contrast to conventional recurrent neural networks (RNNs), which process input in a sequential fashion. Compared to LSTMs, which are challenging to parallelize, they are more effective to deploy at scale due to their non-recurrent nature. Transformers are capable of efficiently handling remote information. Usually, the popular transformer architecture serves as the foundation for the building of large language models (LLMs). Using transformer models and enormous datasets, LLMs are a kind of AI model that has been trained on a large quantity of text data to comprehend and produce prose that is human-like.

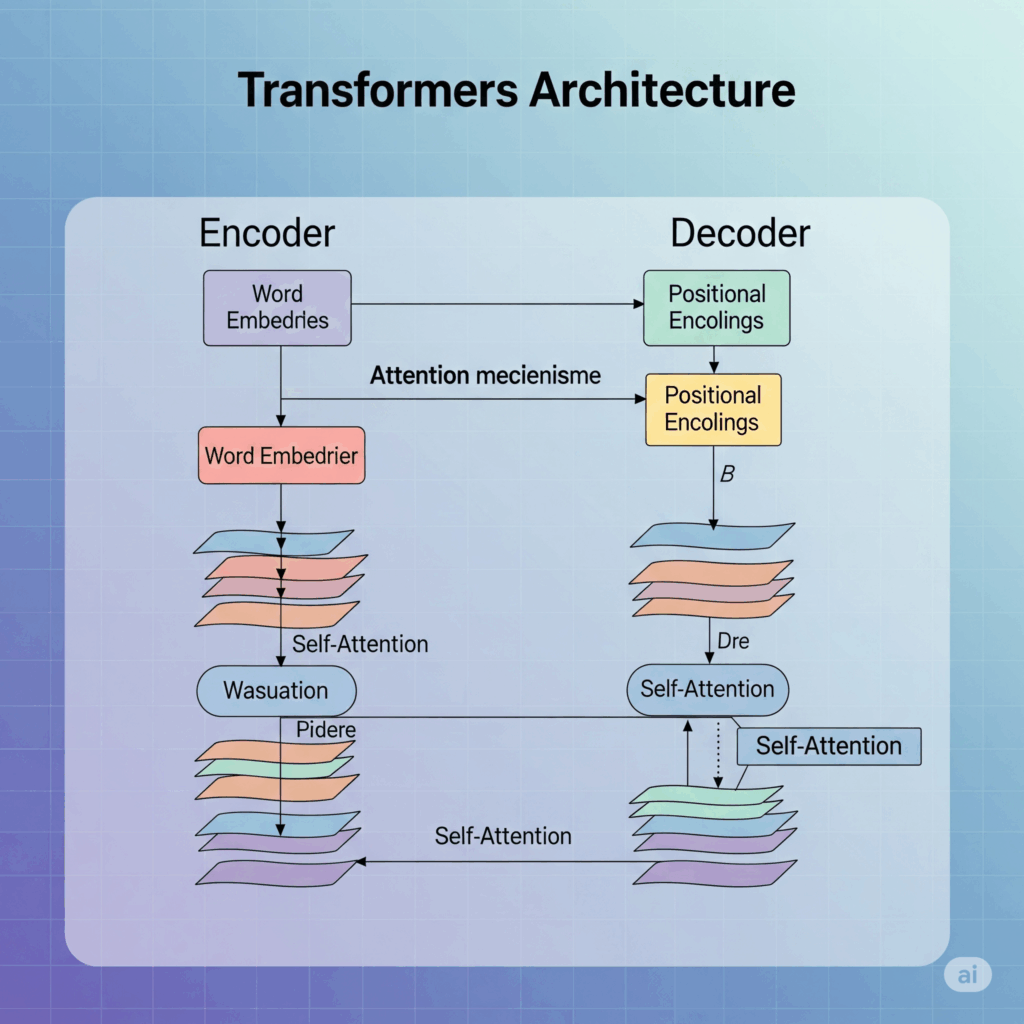

Transformers Architecture

An encoder-decoder design, which is frequently employed for sequence-to-sequence applications like machine translation, is the foundation of the original Transformer. There are two parts to this architecture:

Encoder: Creates a sequence of embedding vectors from an input sequence of tokens; this is sometimes referred to as the hidden state or context. The primary function of the encoder stack is to “update” the input embeddings in order to generate representations that encode the sequence’s contextual information.

Decoder: Generates an output sequence of tokens sequentially, one token at a time, using the encoder’s hidden state and previously created tokens.

The encoder and decoder blocks were first created for sequence-to-sequence operations, but they were quickly modified to function as stand-alone models. The majority of contemporary transformer models fall into one of three categories:

Encoder-only: For tasks involving Natural Language Understanding (NLU), such as named entity recognition (NER) or text categorization, these models transform an input sequence into a rich numerical representation. A token’s representation uses bidirectional attention and is dependent on both left and right contexts.

Decoder-only: By repeatedly anticipating the most likely next word, these algorithms auto-complete text. Tasks involving text creation are their forte. Using causal or autoregressive attention, a token’s representation is only dependent on the left context.

Encoder-decoder: These are employed for intricate sequence mappings, making them appropriate for summarisation and machine translation applications.

Encoders Branch

Several encoder layers are placed on top of one another to form the encoder. The following sublayers are present in each encoder layer:

- A layer of self-attention with several heads.

- An entirely interconnected feed-forward layer.

Additionally, each sublayer employs layer normalization and skip connections, which are common methods for efficiently training deep neural networks. The encoder stack’s primary function is to “update” the input embeddings in order to generate representations that encode the sequence’s contextual information.

Self-Attention Mechanism: One essential component of Transformer networks is the self-attention layer. It enables the model to determine the relative relevance of various words in a phrase by considering their context. Compared to conventional recurrent or convolutional architectures, this allows the model to better capture dependencies and long-range interactions between words.

For every word, the self-attention layer employs three vectors to create output embeddings: a value vector (v), a key vector (k), and a query vector (q). The input vector is projected into these vectors. A weighted average of the value vectors of every word in the input text is used to calculate the output embedding for a given word. By comparing the current word’s query vector to the key vectors of every other word (including itself), the weights for this average are established.

The self-attention mechanism has a major obstacle in that its time and memory complexity are quadratic in the length of the input sequence. Because of this, processing extremely long documents becomes computationally costly, and the majority of programs must restrict the input length. Research on improving the efficiency of self-attention is ongoing, and techniques such adding sparsity, as demonstrated in BigBird, are being used. Transformer layers employ multi-head attention, which enables the model to concurrently attend to data from several representation subspaces at various locations.

Decoders Branch

The decoder is made up of stacked layers, much as the encoder. The addition of an additional cross-attention layer to the feed-forward and self-attention layers is a significant change in the decoder layer. The decoder may access data from all of the encoder’s concealed states with cross-attention, also known as encoder-decoder attention. The next token in the output sequence is repeatedly created by the decoder using the encoder’s output and previously generated tokens.

Tokenization: Instead of using whole words, transformer networks usually function with subword units. Byte Pair Encoding (BPE) and other techniques are frequently used to automatically produce these subword units. By dividing unknown and low-frequency words into more common subword components, this technique offers a sophisticated method of handling them while preserving frequently recurring words as stand-alone tokens.

Methods of Training in Transformers

Pre-training and fine-tuning are typically the two primary processes in the Transformer network training process. This is an example of transfer learning, in which pre-training information is applied to subsequent tasks.

Pre-training: This process is unsupervised. Masked Language Modelling (MLM) is a typical goal for models such as BERT. The model is trained to predict the original, masked tokens based on the surrounding context (from both left and right) after tokens in the input text are randomly masked in MLM. BERT also uses Next Sentence Prediction (NSP) as an aim. Rich linguistic representations are learnt by pre-training on large datasets.

Fine-tuning: Following pre-training, the model is adjusted for certain downstream NLP tasks, such text categorization, question answering, and named entity tagging. This entails further training the previously trained network, frequently by overlaying the transformer’s output with lightweight, task-specific classifier layers. Based on the contextualized representations generated by the transformer, many tasks may be formulated as classification problems (e.g., utilizing the output vector for a specific [CLS] token for sequence classification). Higher performance on smaller, task-specific datasets is made possible by fine-tuning, which makes use of the information gained during the extensive pre-training phase.

Transformer-based Models

There are several transformer models, most of which fit within one of the three primary architectural categories. Among the well-known models are:

- Transformer-Based Bidirectional Encoder Representations, or BERT:

- A model with just an encoder.

- Underpins a lot of models.

- Trained using Next Sentence Prediction (NSP) and Masked Language Modelling (MLM) goals. MLM uses both left and right context to anticipate masked tokens.

- Used for NLU tasks such as question answering, NER, and text categorisation.

- The original BERT had 12 levels of transformer blocks with 12 multihead attention layers each, hidden layers of size 768, and a subword vocabulary of 30,000 WordPiece tokens.

- Several NLP tasks can benefit from BERT embeddings.

- XLNet, BART, ELECTRA, BERT-based Multilingual Models, SpanBERT, XLM-RoBERTa, ALBERT, DeBERTa, DistilBERT, and RoBERTa are some of the variations.

- Generic Pre-trained Transformer, or GPT:

- Merely a decoder model.

- Adept at anticipating the subsequent word in a series.

- Mostly employed for activities involving the creation of text.

- Relies solely on the left context when using causal or autoregressive attention.

- GPT-Neo and GPT-J-6B are GPT-like models that attempt to replicate the GPT-3 scale models, which are part of the GPT family of models.

- Lite Bert, or ALBERT:

- Listed as a BERT variation.

- A model with just an encoder.

- Despite being computationally demanding, it is mentioned in relation to reaching state-of-the-art performance on the SQuAD 2.0 QA benchmark.

T5 (Text-to-Text Transfer Transformer), an encoder-decoder model trained on various NLP issues defined as text-to-text tasks, and BART, which integrates BERT and GPT pretraining processes inside the encoder-decoder architecture, are two more noteworthy Transformer-based models discussed.

These models are frequently worked with using the Hugging Face transformers library, which offers pre-trained models and fine-tuning tools.

With applications in fields like Visual Question Answering (VQA) and scanned document analysis (LayoutLM), transformers are also expanding beyond text. Frequently, encoder-only designs are used in conjunction with other modalities.

Transformers are now widely used in NLP applications, such as:

- Modelling Languages

- Modelling of Masked Language

- Text Production

- Understanding Language

- Machine Translation

- Text Classification

- Sequence Labelling (such as Named Entity Recognition and Part-of-Speech tagging)

- Questions Answering (QA)

Summarization

LLMs, or large language models: One kind of AI model that has been trained on enormous volumes of text data to comprehend and produce text that is human-like is called a large language model. The term “large” refers to the fact that LLMs are learnt using enormous datasets and usually employ Transformer models. Gemini AI and GPT-4 are two instances of such LLMs.

Libraries: These models are frequently worked with using the Hugging Face transformers library, which offers pre-trained models and resources for optimising them for jobs further down the line.