Matrix factorization

In Natural Language Processing, matrix factorization techniques are employed for feature extraction, dimensionality reduction, and the discovery of latent structure in textual data. These techniques entail breaking down a matrix that depicts the connections between words and situations (such as documents) into smaller matrices. Capturing the most significant underlying patterns in a smaller area is the aim.

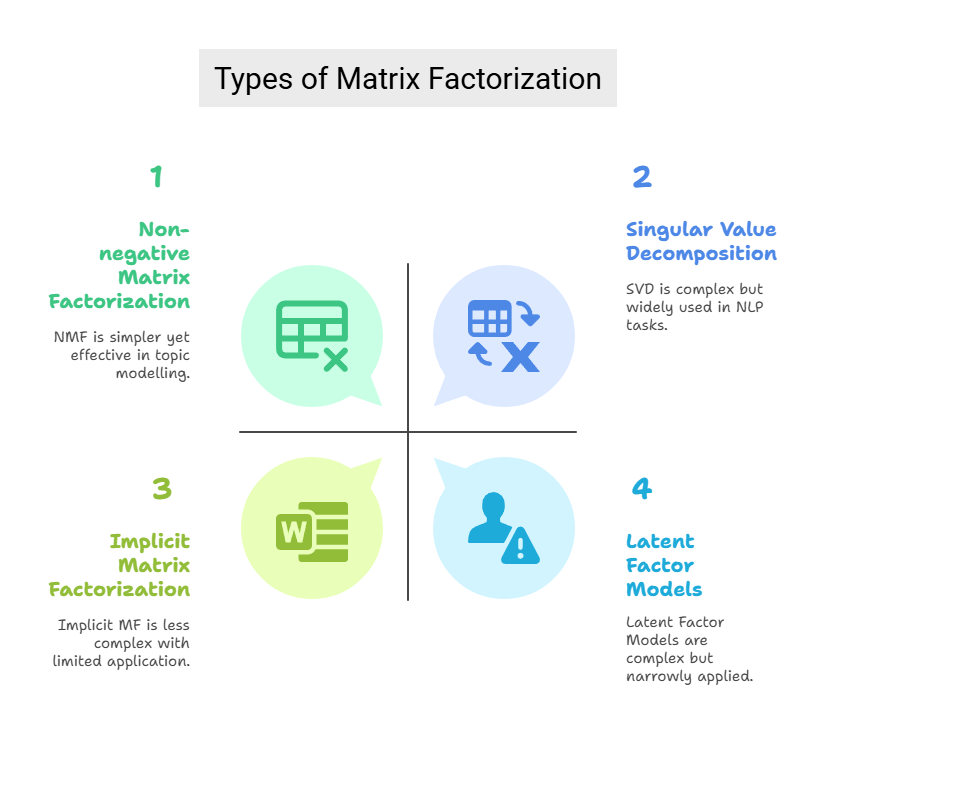

Types of Matrix Factorization

- Singular Value Decomposition (SVD): A popular method for breaking down matrices into smaller ones, which is helpful for tasks like latent semantic analysis (LSA) and dimensionality reduction in natural language processing. Because it makes it possible to efficiently represent text data and discover hidden patterns, SVD is very helpful when processing huge text datasets.

- Non-negative Matrix Factorization (NMF): A technique that ensures all values stay non-negative while dividing big datasets into more manageable, meaningful chunks. It is frequently employed in topic modelling, which aids in locating themes or subjects within a group of documents.

- Implicit Matrix Factorization: Latent semantic patterns in lengthy texts are revealed by methods such as PMI, PPMI, and Shifted PMI. By producing dense word representations, these techniques aid in the understanding of word relationships.

- Latent Factor Models: These models, which are frequently employed in collaborative filtering, provide predictions based on item qualities and user preferences by representing individuals and objects with latent factors. One kind of latent component model is a matrix factorisation model, which breaks down a rating matrix into user and item matrices and then combines these matrices to forecast missing ratings.

you can also read Bidirectional LSTM vs LSTM Key Differences Explained

Matrix Factorization Techniques

The following summarizes the information found in regarding matrix factorization techniques:

- Core Idea: The basic idea is to depict words or documents as vectors in a space that records associations (such as co-occurrence). Matrices are created, such as word-context matrices (rows are words, columns are contexts, and entries quantify association) or term-document matrices (rows are words, columns are documents, and entries are counts or weights). These possibly sparse and massive matrices are then broken down into products of smaller matrices using matrix factorization. This produces lower-dimensional vector representations for the original matrix’s rows (like words) and columns (like documents or contexts), which are frequently referred to as embeddings.

- Motivation (Resolving Sparsity): Data sparsity may arise when words are represented by explicit counts of the contexts in which they appear. This is lessened via matrix factorization, which reduces dimensionality while identifying a low-rank representation that captures the most crucial information.

- Particular Matrix Factorization Methods Presented:

- One common algebraic method in Latent Semantic Analysis (LSA) is Singular Value Decomposition (SVD). Three matrices are produced by SVD factoring a matrix: V^T, Σ (a diagonal matrix of singular values), and U. Only the greatest singular values are retained when a truncated SVD is used for dimensionality reduction. The dense, lower-dimensional representations (embeddings) are found in the rows of the resulting matrices (U and V^T). The matrices U and V^T must be orthonormal for SVD to work. When used on a mean-centered matrix, Principal Component Analysis (PCA) is said to be the same as SVD.

- PLSI (Probability Latent Semantic Indexing) and PLSA (Probability Latent Semantic Analysis): These are referred to be alternate matrix factorization models and older topic models that came after SVD-based LSA. The basis vectors in PLSA are not always orthogonal, in contrast to SVD, and both the basis vectors and the modified representations must be non-negative.

- Not a negative Matrix Factorization (NMF): NMF is also recognised as an alternate matrix factorization model and early topic model. Similar to PLSA, it requires that the basis vectors and the representations that are produced (such as document-topic weights) be non-negative. Semantically, this non-negativity is thought to help express a topic’s strength. It is noticed that NMF and PLSA are connected.

- Latent Dirichlet Allocation (LDA): Listed with PLSI and NMF as alternatives that surfaced following the original SVD study, LDA is also categorised as an early topic model and a latent variable model.

- A complex matrix factorization technique that works well with binary data, like that seen in recommender systems with implicit feedback, is logistic matrix factorization. It’s interesting to note that the Word2vec algorithm’s Skip-Gram with Negative Sampling (SGNS) variation is specifically stated to be viewed as carrying out logistic matrix factorization on a word-context matrix.

- Multinomial Matrix Factorization: The introduction of a softmax layer is said to recast the “vanilla” Skip-Gram model (without negative sampling) as a multinomial matrix factorization model.

- Relation to Neural Networks (Autoencoders): According to autoencoders are basic neural network topologies that are employed in matrix factorization and dimensionality reduction.

- Traditional matrix factorization techniques like SVD can be simulated with a straightforward single-layer autoencoder.

- By changing the activation functions or adding layers, neural network topologies offer a versatile method of implementing matrix factorization variations, such as logistic matrix factorization, probabilistic latent semantic analysis (PLSA), and non-negative matrix factorization (NMF).

- Multi-layer autoencoders with non-linear activation functions can be used to do matrix factorization and nonlinear dimensionality reduction.

- The link arises from considering the weights of the network as defining the basis vectors or the other factor matrix, and the hidden layer of the autoencoder as generating the reduced representation (one factor matrix).

You can also read What Is Mean By NLP With LSTM, Applications & Types?

Matrix factorization approaches are essentially a collection of methods for breaking down relationship matrices to find lower-dimensional representations of text data. Neural network architectures, especially autoencoders, provide a flexible framework to simulate, generalise, and find variations of these factorisation techniques, whereas more conventional approaches such as SVD, NMF, and PLSA rely on particular mathematical constraints (orthogonality, non-negativity).