Text summarization

Discover the main types of text summarization in NLP and why this AI-driven technique is essential in today’s fast-paced digital world.

Text summarization is the process of creating a concise and coherent summary of a longer text while retaining its key information and main points. It’s considered a task within information retrieval and document analysis, helping users to quickly understand the essential content without needing to read the entire original text. Work on text summarization has notably developed over the last 10 years.

Why text summarization is important?

- Summaries reduce reading time

- They make the selection process easier when researching documents.

- Automatic summarization can improve the effectiveness of indexing.

- Automatic summarization algorithms are less biased than human summarization.

- Personalized summaries are useful in question-answering systems as they can provide tailored information.

- Using automatic or semi-automatic systems allows commercial abstract services to increase the number of text documents they can process.

- Summaries make information more accessible to a broader audience, including those with limited time, attention span, or reading abilities.

- It can process text in multiple languages, enabling cross-language summarization.

- Summarization aids decision-making by presenting important information in a condensed format.

- Summaries allow readers to skim through the main ideas and concepts without needing to read every detail.

- Summaries act as a quick reference point for finding relevant information within documents.

- Another motivation for parsing is text summarization, where multiple documents on the same topic are condensed. It can also involve compressing individual sentences to include only relevant portions.

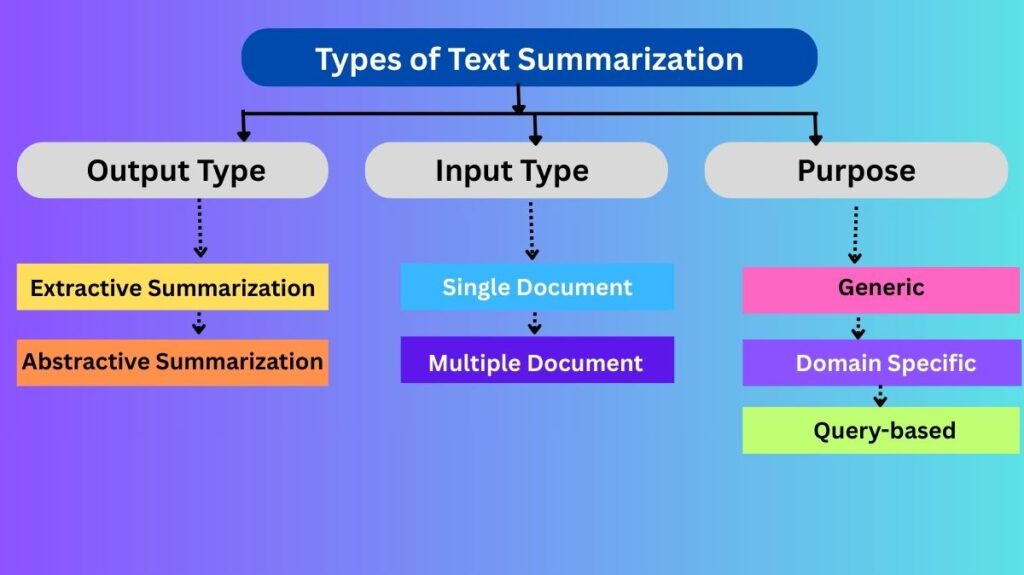

Types of Text Summarization

Based on Output Type:

Extractive Summarization: This involves selecting and combining important sentences or phrases directly from the original text to create a summary. It does not generate new sentences but rather extracts existing ones.

Abstractive Summarization: This involves understanding the text’s meaning and creating new sentences that convey the main ideas. It often uses natural language generation techniques. Neural abstractive summarization can be approached using encoder-decoder architectures.

Based on Input Type:

Single Document

Multiple Document (where the goal is to produce a summary that covers the content of several documents). Sentence fusion can be used in multi-document summarization to combine related sentences into one.

Based on the Purpose:

- Generic

- Domain Specific

- Query-based

Techniques for Text Summarization

Extractive Summarization Techniques:

- Graph-based Methods: Use graph algorithms like TextRank and PageRank to identify important sentences based on their connections in the text graph. TextRank extracts topics, creates nodes, and captures relations between nodes to summarise text. The summarize function in the Gensim Python package can be used for this.

- Machine Learning Models: Supervised or unsupervised machine learning algorithms (e.g., Support Vector Machines, Clustering) can rank sentences based on features like sentence length, word frequency, and semantic similarity. One could develop a simple extractive summarization tool that prints the sentences with the highest total word frequency.

- Feature-based text summarization

- LexRank: TF-IDF with a graph-based algorithm.

- The TextTiling algorithm searches for parts of a text where the subtopic vocabulary shifts. Segmentation points are identified at local minima in smoothed similarities between adjacent blocks of text.

- Discourse structure can be exploited in summarization. In many discourse relationships, one phrase depends on the other (nucleus and satellite). The subsidiary phrases (satellites) might be dropped in a summary, as the main phrase (nucleus) is more critical. Discourse depth and unclarity can be incorporated into extractive summarization using constrained optimisation.

Abstractive Summarization Techniques

Deep Learning Models: Recurrent Neural Networks (RNNs) and transformer models like BERT or GPT can generate summaries by understanding the context, semantics, and relationships within the text. This often involves using an encoder-decoder model. Pointer-generator networks are also used for summarization. Sentence summarization can be treated as a machine translation problem using an attentional encoder-decoder model.

Examples and Applications

- Generating summaries of news articles.

- Summarizing product reviews to understand customer sentiment. A summary might show positive and negative opinions on different features.

- Creating summaries of large time-series data sets where graphical displays are impractical. Projects like SumTime and BT-45 have investigated this. Sum Time-Turbine generates short summaries of large turbine sensor datasets, while BT-45 summarises physiological signals and events in a neonatal intensive care unit, even inferring temporal and causal relationships.

- Generating textual summaries to orient users in information visualisation before they interact with it. Systems like SIGHT generate text summarising the main communicative goal of bar charts for visually impaired users.

- Summarization is an application of Natural Language Generation (NLG). Lexicalization, the mapping of semantic units onto words, plays a prominent role in NLG for summarization, especially in report domains.

- It can be used to condense information from multiple documents about the same topic.

- It can involve compressing individual sentences.

- Text summarization is relevant to recommender systems for textual data.

In the context of discourse coherence, the nuclei of a text are presumed to express more important information than the satellites, which might be dropped in a summary. Discourse depth and nuclearity can be incorporated into extractive summarization using constrained optimisation. Semantic analysis and ontologies can also play a role in text summarization.