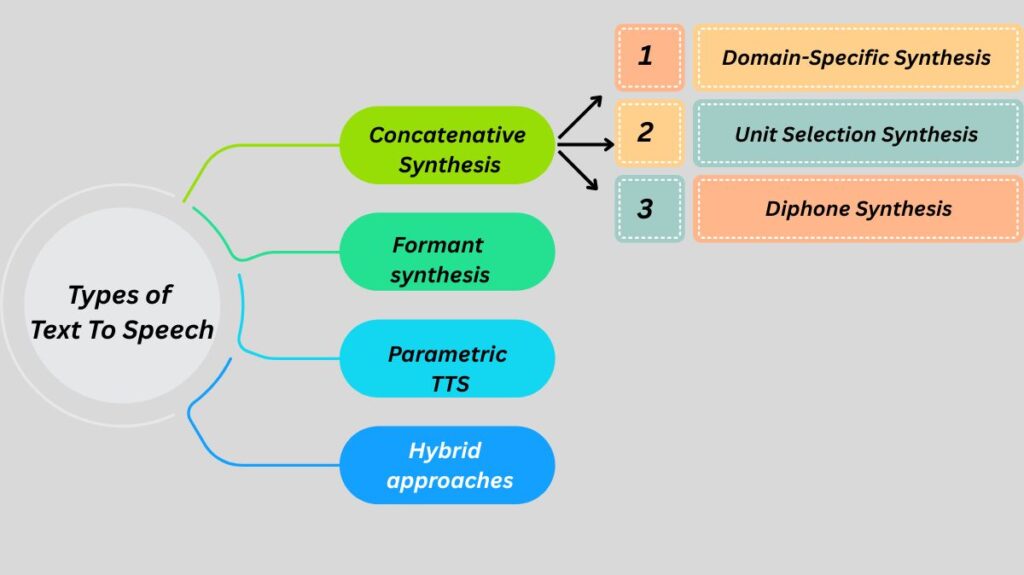

Types of Text To Speech

- Concatenative Synthesis: Concatenative TTS uses recordings of high-quality audio clips that are then merged to create speech. In order to create a vast database, voice actors are first recorded uttering a variety of speech units, such as entire sentences and syllables, which are then further labelled and divided by linguistic units, such as phones, phrases, and sentences. In order to create an audio file, a Text-to-Speech engine looks through such a database for speech units that correspond to the input text. The following lists the three main subtypes of concatenative synthesis:

- Domain-Specific Synthesis: Prerecorded words and phrases are concatenated to form entire utterances through domain-specific synthesis. Applications such as weather reports or announcements of transit schedules use it when the range of texts the system may produce is restricted to a specific domain. In gadgets like talking clocks and calculators, the technology has long been used in commerce and is incredibly easy to apply.

- Unit Selection Synthesis: The synthesis of unit selection makes use of extensive speech recording databases. Individual phones, diphones, half-phones, syllables, morphemes, words, phrases, and sentences are some or all of the segments that are separated out of each recorded utterance throughout the database development process. Waveforms and spectrograms are examples of visual representations that are typically used to divide speech into segments using a specially modified speech recogniser that is set to a forced alignment mode with some manual correction afterwards. Following that, an index of the speech database’s units is produced using segmentation and acoustic properties such as the fundamental frequency (pitch), duration, syllable position, and nearby phones. Unit selection is the process of selecting the optimal chain of candidate units from the database to construct the intended target utterance at runtime. A specifically weighted decision tree is usually used to accomplish this operation.

- Diphone Synthesis: In diphone synthesis, every diphone (sound-to-sound transition) that occurs in a language is included in a minimum speech database. Spanish contains roughly 800 diphones, while German has about 2500. The amount of diphones is determined by the phonotactics of the language. The voice database for diphone synthesis only contains one instance of each diphone. These minimal units are superimposed with the target prosody of a sentence at runtime using digital signal processing methods like MBROLA, PSOLA, or linear predictive coding.

- Formant synthesis: An example of a rule-based TTS technology is the formant synthesis method. By using a set of predetermined rules to generate fake signals that imitate the formant structure and other spectral characteristics of natural speech, it generates speech segments. Using an acoustic model and additive synthesis, the synthesised speech is created. Noise levels, fundamental frequency, voicing, and other factors are used in the acoustic model and change over time. With a few prosodic and intonation modelling techniques, formant-based systems may manipulate every element of the output speech, generating a vast array of moods and tones.

- Parametric TTS: An more statistical approach was created to overcome the drawbacks of concatenative TTS. It is based on the notion that can train a model to produce all types of speech if it can approximate the parameters that constitute speech. Speech is produced using the parametric technique, which integrates parameters such as fundamental frequency, magnitude spectrum, etc. In order to extract linguistic elements like phonemes or duration, the text is first processed. Extracting vocoder features such as cepstra, spectrogram, fundamental frequency, etc. that are utilised in audio processing and reflect some intrinsic aspects of human speech is the second phase. A mathematical model known as a Vocoder receives these hand-engineered qualities in addition to the linguistic data. Phase, speech rate, intonation, and other speech properties are estimated and transformed by the vocoder while it creates a waveform. The method makes use of Hidden Semi-Markov models, which are Markov at that level and still allow for transitions between states, but not Markov for the explicit duration model within each state.

- Hybrid (Deep Learning) approaches: The inefficiency of decision trees employed in HMMs to describe complicated context dependencies is addressed by the DNN (Deep Neural Network) based approach, which is another variety of statistical synthesis techniques. Giving machines the ability to create features without human input was a step forward and ultimately a breakthrough. Humans have created features based on comprehension of speech, which may or may not be accurate. In DNN techniques, a DNN models the link between input texts and their aural realisations. Pathway smoothing with maximum likelihood parameter generation is used to construct the acoustic features. Although features acquired using Deep Learning are not accessible by humans, they are legible by computers and serve as the data needed for a model.

Conclusion

The field of text-to-speech synthesis is one that is developing quickly and is having a big impact on how people use computers. Numerous tasks and procedures have been recognised as part of the text-to-speech synthesis process.

Read more on What Are Speech To Text Use Cases From Healthcare To Media