This article gives an overview of RNN NLP Examples, Types, Applications and so on.

Recurrent Neural Networks

A basic type of neural network designs, Recurrent Neural Networks (RNNs) are especially well-suited for processing sequential input, like text or time series. RNNs are made to directly handle the sequential nature of language, in contrast to feed-forward networks, which usually handle fixed-size inputs or ignore the order of items in variable-length inputs.

From the writings supplied, the following are some salient features of RNNs:

Architecture

- Because an RNN has a cycle in its network connections, a unit’s value may be influenced by its own previous outputs. The network can now handle sequences one element at a time with this.

- A type of memory or context that encodes prior processing and informs choices at the present time step is provided by the hidden layer from the previous time step.

- The fundamental design consists of an output (yt), a hidden state (ht), and an input vector (xt) at time t. The current input (xt) and the prior hidden state (ht-1) are used to calculate the hidden state at time t (ht). The current hidden state (ht) is then used to calculate the output (yt).

- All time steps share the weight matrices that link the input to the hidden layer (W), the output layer to the hidden layer (V), and the prior hidden layer to the current hidden layer (U). This weight sharing aids in processing variable-length inputs with a set number of parameters and is essential to their domain-specific insights.

- One way to think of an RNN processing a finite sequence is as an unrolled, extremely deep feed-forward network with common parameters among layers.

Purpose

- RNNs are made to pick up on minute regularities and patterns in sequences. They can condition on the sequence’s whole history, going beyond the Markov assumption that has been a mainstay of classical NLP for many years.

- They may attend to organised qualities while representing sequential inputs of any size in fixed-size vectors.

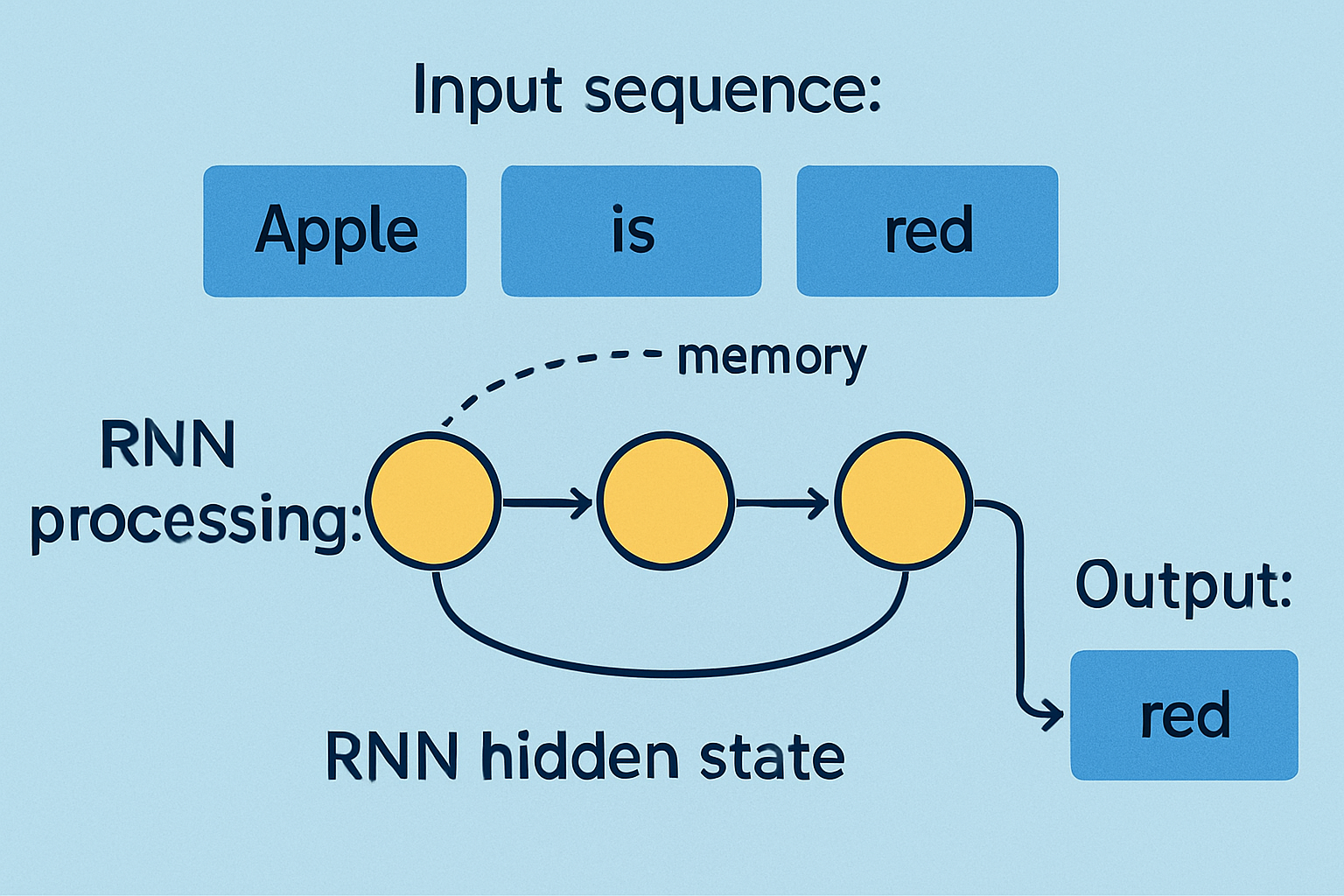

RNN NLP Example

Input Sequence: Imagine the input sequence is “Apple is red.”

RNN Processing: The RNN processes each word one by one.

- When it sees “Apple,” it stores a copy of this information in its “memory” (hidden state).

- When it sees “is,” it recalls “Apple” from memory, allowing it to understand the context of the sentence.

- Finally, when it sees “red,” it predicts the following word using context (“Apple is”) and memory.

Output: The RNN predicts “red” more often than other words because it knows the association between “Apple,” “is,” and “red.”

Applications of Recurrent Neural Networks

Typical uses consist of:

- Language Modeling: Using the previous words in a series to predict the subsequent word.

- Sequence Tagging: Applying a label, such as Named Entity Recognition or Part-of-Speech (POS) tagging, to every element in a sequence.

- Sequence Classification: Categorizing a whole sequence, such as spam detection or sentiment analysis. This frequently entails summarizing the sequence using the last concealed state.

- Sequence-to-Sequence Learning: Machine translation frequently uses this technique to map an input sequence to an output sequence. An encoder RNN and a decoder RNN are frequently used in this.

- Other applications: Speech recognition, handwriting recognition, picture captioning (in conjunction with CNNs), conversation state tracking, and stack representation are some of the other uses that were discussed.

Training

- RNNs are trained using gradient-based optimisation, just as other neural networks.

- Backpropagation through time is the typical training algorithm (BPTT). This entails backpropagating the error gradients across the unrolled computational graph, calculating the loss, and unrolling the network over time.

- Updates are made across parts of the sequence rather than the full length when truncated BPTT is applied to exceptionally lengthy sequences.

Challenges

- The disappearing and ballooning gradient difficulties are the main obstacle. It is challenging for the network to learn long-range relationships because error signals can grow unreasonably huge (exploding) or shrink towards zero (vanishing) when they are backpropagated across several time steps. This is especially problematic for deep networks such as RNNs.

- Because RNNs are sequential, they might be challenging to parallelise.

- In reality, they could become “fuzzy far away” and have trouble remembering details over extended periods of time.

Different types of RNN

RNNs may be divided into four categories according on the network’s input and output counts:

One-to-One RNN: This kind of neural network design, which has only one input and one output, is the most basic. It is applied to simple classification problems without sequential data, such binary classification.

One-to-Many RNN: The network of a One-to-Many RNN uses a single input to generate several outputs over time. Tasks where a single input sets off a series of predictions (outputs) benefit from this. One image, for instance, can be used as input to create a string of words for a caption in image captioning.

Many-to-One RNN: The Many-to-One RNN produces a single output after receiving a series of inputs. When a single prediction requires the entire context of the input sequence, this type is helpful. The sentiment analysis model generates a single output, such as positive, negative, or neutral, after receiving a string of words, such as a phrase.

Many-to-Many RNN: A succession of inputs is processed and a sequence of outputs is produced by the Many-to-Many RNN type. A language translation task involves taking a string of words in one language as input and producing a matching string in another language as output.

Alternatives and Remedies

- Gated Architectures: Created in order to address the issue of the disappearing gradient. Differentiable “gates” are used in these to regulate the information flow.

- Long Short-Term Memory (LSTM): In order to control information flow and maintain gradients, this popular gated variation has explicit memory cells as well as input, forget, and output gates. In order to prevent vanishing gradients, LSTMs update cell states using an additive method.

- Gated Recurrent Unit (GRU): With fewer gates and no independent memory cell, this gated architecture is more straightforward than LSTM but shares the fundamental concept of managing information flow to deal with vanishing gradients.

- Bidirectional RNNs (biRNN): Utilizing two distinct RNNs, process the input sequence both forward and backward. At each time step, the outputs are usually concatenated to offer historical and future context. For situations where future context is important, this is advantageous.

- Stacked RNNs: Consist of many RNN layers, with the input to the layer above being the output of the one below. frequently outperform single-layer networks.

- Recursive Neural Networks (RecNN): RNNs should be extended to tree structures rather than linear sequences. They use their children’s representations to create vectors for tree nodes.

- Echo-State Networks: Simplified RNNs in which only output weights are taught and hidden-to-hidden weights are set at random. beneficial for initialization and time-series forecasting.

- Neural Turing Machines: Add an external memory component to RNNs (which serve as a “controller”) to enable more regulated data access and maybe improved generalization for intricate algorithmic tasks. NTMs provide useful advantages in processing lengthy sequences and generalizing to varying input sizes, even if RNNs are technically Turing complete.

Common Usage Patterns

- Acceptor: For sequence classification tasks, the supervision signal is usually applied just at the conclusion of the sequence, or the final output vector.

- Encoder: Another network may utilize the final output vector as a fixed-size encoding or summary of the complete input sequence.

- Transducer: At every time step in the sequence, a signal for supervision or prediction is applied. For jobs involving sequence labelling, this is typical.

- Generator: The network may produce sequences (like text) by using the output from one time step as the input for the subsequent one. The foundation of autoregressive generation is this.

Historical Context

- Elman networks (Elman, 1990) and the creation of BPTT (Werbos, 1974, 1990; Rumelhart et al., 1986) are examples of early work.

- Hochreiter and Schmidhuber first presented LSTMs in 1997.

- Graves (2008) and Schuster and Paliwal (1997) both suggested bidirectional RNNs.

- Around or about 2010, RNNs gained popularity and strength in the deep learning space with algorithmic breakthroughs, bigger datasets, and hardware upgrades. Before the introduction of Transformer networks in the late 2010s, they were widely used in NLP applications that required sequence modelling.