Let us discuss the Text-to-Text Transfer Transformer, T5 Applications, Architecture, and How It works.

A well-known language model architecture called T5 (Text-to-Text Transfer Transformer) was created to manage a broad range of Natural Language Processing (NLP) operations using a single text-to-text framework. This implies that every operation it carries out is seen as generating text as output and receiving text as input.

Core Concept

- Natural Language Understanding (NLU) and Natural Language Generation (NLG) are two of the NLP activities that T5 unifies by transforming them all into a text-to-text format.

- In text classification issues, for instance, the model is trained to produce the class label as regular text after receiving the input text.

- T5 adds a distinct prefix to each input (such as “translate English to German:”) in order to differentiate across various NLP challenges. The job that the model is expected to do is specified by this prefix.

Architecture

- An encoder-decoder design is used by T5.

- It closely adheres to the original Transformer architecture.

- One significant architectural distinction between T5 and the “vanilla” Transformer network is that T5 uses relative position embeddings instead of absolute position embeddings. Instead of capturing a token’s exact location within a phrase, relative position embeddings record the distance between them. By translating each offset value to a learnt scalar parameter that is added to the attention score between the key and the query, this method is integrated into the self-attention mechanism. For many NLP applications, where relative location is more informative than absolute position, this makes intuitive sense.

How Does T5 Work?

T5 operates on the straightforward yet efficient tenet that all NLP issues should be converted into text-to-text format. Similar to Transformer-based sequence-to-sequence models, the model has an encoder-decoder architecture. It functions by:

- Task Formulation as Text-to-Text: It reformulates each NLP job into a text-based input and output rather than handling them independently.

- Encoding the Input: SentencePiece is used to tokenize the input text before it is transmitted to the encoder, which creates a contextual representation.

- Decoding the Output: Using the encoded representation, the decoder produces the output text in an autoregressive fashion.

- Model Training: T5 is pre-trained with a denoising aim, in which the model learns to recreate masked text passages. After that, it is adjusted for different jobs.

Training

- A wide range of NLP challenges are used to pre-train T5. It was trained on eighteen different text-to-text transfer NLP challenges.

- These activities involve reading comprehension, assessing sentence grammaticality, translating from English to French, and translating from English to Romanian.

- With the same vocabulary, model, and loss function (cross-entropy across the decoded tokens), all of these issues are handled inside the same encoder-decoder architecture.

- This multi-task training process records language modelling information (from all activities) and cross-language alignment information (from translation tasks).

- Furthermore, T5 incorporates an unsupervised pre-training phase that uses autonomously produced English text data (such as the C4 dataset). This entails using sentinel tokens to randomly mask consecutive text passages. Using the decoder, the model is trained to produce these masked spans that are defined by the sentinel tokens. Prior to the multi-task training phase, an unsupervised stage takes place.

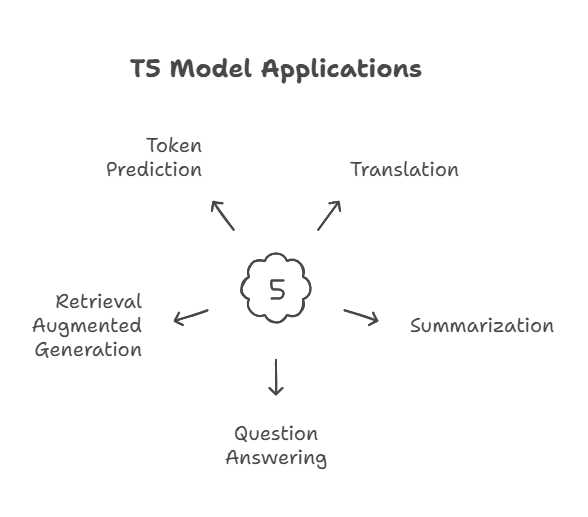

T5 Applications

- Translation and summarization are two of the many jobs that T5 is intended to help.

- For some downstream tasks, including answering questions and translating new language pairings that weren’t specifically included in its pre-training data (like Romanian to English), it can be optimized.

- T5 may be improved in Question Answering (QA) by giving it a query and teaching it to produce the response text. While not always as well as systems made especially for the job, the biggest T5 model (11 billion parameters) performs competitively in QA.

- T5 extends the conventional retriever-reader paradigm by serving as a generator in Retrieval Augmented Generation (RAG) designs for QA. In RAG, a retriever (such as DPR) sends latent vectors of documents to T5, which then uses the query and these documents to provide replies. Because T5 is differentiable, the entire RAG process can be optimised from start to finish.

- Tokens are predicted one at a time using techniques like greedy decoding, where the next token is chosen by utilising the argmax() of the logits. This is how inference is implemented using T5 for sequence generating jobs like translation. Until a maximum length is achieved or an end-of-sequence token is generated, the generation process keeps going.

Considerations

- Even if they are strong, language models like T5 that have been optimized for QA may not be as interpretable as typical QA systems since they may not provide context, such as the section from which the response originated.

- To lower processing needs, smaller variations are available, such as t5-small.

To sum up, T5 is innovative because it uses a Transformer encoder-decoder architecture with relative positional embeddings and a multifaceted training regimen that combines supervised multi-task learning with task-specific prefixes and unsupervised span masking to unify text-to-text across a variety of NLP tasks.