Introduction

Natural language processing (NLP) includes machine translation (MT), which mechanically translates text or speech between languages. From rule-based systems to neural network-based models, MT has progressed. Phrase-Based Statistical Machine Translation (PBMT) has helped link rule-based systems to neural machine translation.

Phrase-based statistical machine translation (PBMT) improved translation accuracy and fluency. Before Neural Machine Translation (NMT), it dominated MT in the 2000s and underpinned many commercial translation systems.

What is PBMT?

PBMT is a statistical machine translation method that uses phrases instead of words. This definition of “phrase” refers to continuous word sequences in bilingual texts, not grammatical phrases. The premise is that phrases in one language are more likely to match phrases in the target language than words alone. This produces more accurate and fluid translations than word-based models.

How PBMT Works?

In order to identify patterns in phrase translations, PBMT examines big bilingual corpora. The model gives probability to phrase pairings after learning how phrases in the source language are usually translated into the target language. The following steps are part of the core process:

Training Phase

A parallel corpus of sentence-by-sentence aligned texts in two languages is used to train PBMT systems.

Word Alignment

Using algorithms like IBM Models or the HMM (Hidden Markov Model), the initial step is to identify which words in the source sentence match which terms in the target phrase. The purpose of this is to map the relationships between terms in the two languages.

Learn more about Example Based Machine Translation (EBMT) And Its Advantages

Phrase Extraction

The method extracts phrase pairs from the aligned word pairs. These are word sequences that match those in the target sentence and are continuous in the source sentence.

Phrase Table Creation

All of the phrase pairings and the corresponding probabilities that are based on how frequently they occur in the training data are stored in a phrase table.

Language Modeling

To make sure the translated phrases are grammatically and syntactically fluent in the target language, a language model usually an n-gram model is employed. The likelihood that a word sequence will appear in the target language is provided by this model.

Decoding Phase

When it is necessary to translate a new sentence:

- The original sentence is divided into potential phrases by the decoder.

- It looks for translations that match in the phrase table.

- Using the language model, it maximizes both the phrase translation probability and fluency by putting the translated phrases together into the most likely target sentence.

- The sequence of translated sentences can also be changed using reordering models to better match the grammar of the destination language.

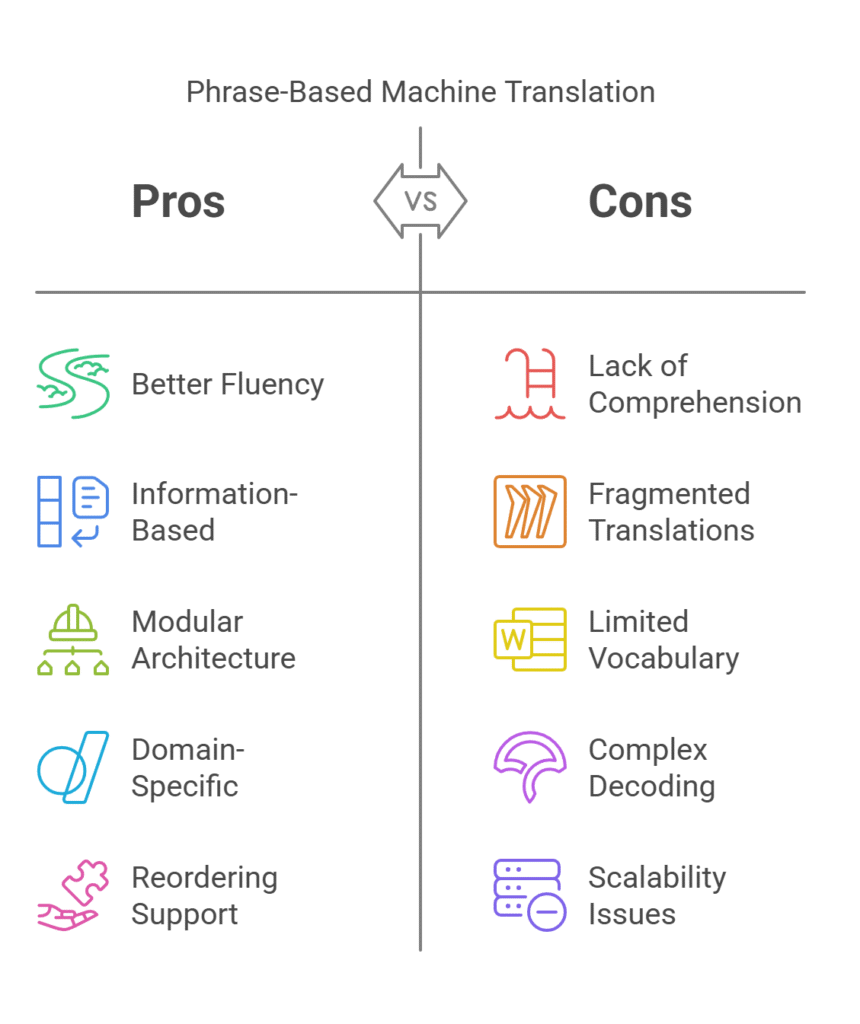

Benefits and drawbacks of PBMT

Benefits of PBMT

- Better Fluency Compared to Word-Based SMT: Because PBMT takes into account multi-word sequences and their context rather than translating words alone, it generates more fluid translations than word-based SMT (Statistical Machine Translation).

- Information-Based: Compared to rule-based systems, the system requires less manual intervention because it learns from bilingual corpora. The quality of the translation improves with the amount of data available.

- Architecture in Modules: Flexibility and experimentation are made possible by the separate development, tuning, and updating of each component (phrase table, alignment, and language model).

- Effective for Domain-Specific Text: It works for domain-specific When trained on pertinent corpora, text PBMT works well on technical or domain-specific content.

- Excellent Assistance with Reordering: Limited word and phrase reordering is supported by PBMT, which is helpful for language pairs with different syntax.

Drawbacks of PBMT

- A lack of profound comprehension: At the surface level, PBMT relies more on patterns and probabilities than on meaning. Its inability to comprehend grammar and semantics may result in inaccurate translations.

- Piecemeal Translations: The result may occasionally seem fragmented or disjointed since PBMT translates by piecing sentences together, particularly if the phrase segmentation isn’t perfect.

- Use of Limited Vocabulary: The machine might not translate a word or phrase or make inaccurate estimates if it hasn’t been seen in the training data.

- A Difficult Decoding Procedure: Numerous potential segmentations and reordering’s must be explored throughout the decoding process, which can be computationally costly and necessitate complex optimization.

- Scalability and Storage: With very large datasets, the system may become inefficient due to the significant storage requirements of phrase tables and language models.

Challenges in PBMT

- Sparsity of Data: Large, aligned bilingual corpora are necessary for high-quality translation. The performance of PBMT is poor for low-resource languages.

- Mistakes in Alignment: Word alignment mistakes made during training may spread throughout the model, resulting in subpar translations and inadequate phrase extraction.

- Managing Languages with Rich Morphology: Accurate translation is made more difficult by languages with complex morphology, such as Finnish and Turkish, which provide challenges in phrase extraction and alignment.

- Ambiguity in Phrase Segmentation: It’s not easy to decide how to break a sentence up into phrases. Translations can vary greatly depending on the segmentation.

- Complexity Reordering: PBMT still has trouble with long-distance word order changes between structurally dissimilar languages, such as English and Japanese, even though it can manage some reordering.

Applications of PBMT

Even though Neural Machine Translation (NMT) has mostly taken its place in many academic and commercial contexts, PBMT is still used in:

- Older Systems: Because of legacy integration and pre-existing infrastructure, PBMT is still used by many enterprise translation tools and software solutions.

- Low-Resource Language Translation: When bilingual data is scarce, PBMT can perform better than neural models, particularly when linguistic pre- and post-processing is done correctly.

- MT Specific to a Domain: PBMT trained on relevant data can be quite effective for domains with formulaic or repetitive material.

- Learning Resources: Because of its interpretability and the openness of its decision-making process, PBMT is utilized in research tools and language learning apps.

- Systems that are hybrid: To capitalize on the advantages of each method, some contemporary machine translation (MT) systems combine PBMT with rule-based or neural approaches. For example, they may use neural models for fluency and PBMT for alignment.

In conclusion

One significant development in the growth of machine translation systems was phrase-based statistical machine translation. PBMT greatly increased translation accuracy, consistency, and fluency by changing the translation unit from words to phrases. This data-driven and modular architecture made it versatile and efficient in many industries.

Even though neural machine translation has a deeper understanding of language and produces better results, PBMT is still useful in some situations, especially when data availability, interpretability, or legacy systems are involved.

PBMT Vs NMT

| Feature | PBMT | NMT |

|---|---|---|

| Translation Unit | Phrases (multi-word segments) | Entire sentence (sequence-based) |

| Fluency | Moderate | High |

| Context Handling | Limited to local phrases | Full sentence context |

| Data Requirements | Moderate | Very High |

| Interpretability | High (transparent phrase tables) | Low (black-box nature) |

| Performance on Rare Words | Better | Weaker |

| Scalability | Moderate | High (with GPU/TPU support) |