Loading Database in PostgreSQL

PostgreSQL database loading involves restoring data from a backup or importing data into tables. There are various main ways for different backup formats and data quantities.

To restore plain-text SQL backup files, which are SQL scripts with CREATE and INSERT commands, the psql command-line utility is often used. This script can be generated using pg dump or dumpall. Creating the database from template0 before running psql -f backup.sql is common when restoring. The 1f or single transaction option in psql wraps the whole restore procedure in a single transaction, ensuring it completes or rolls back if an issue occurs.

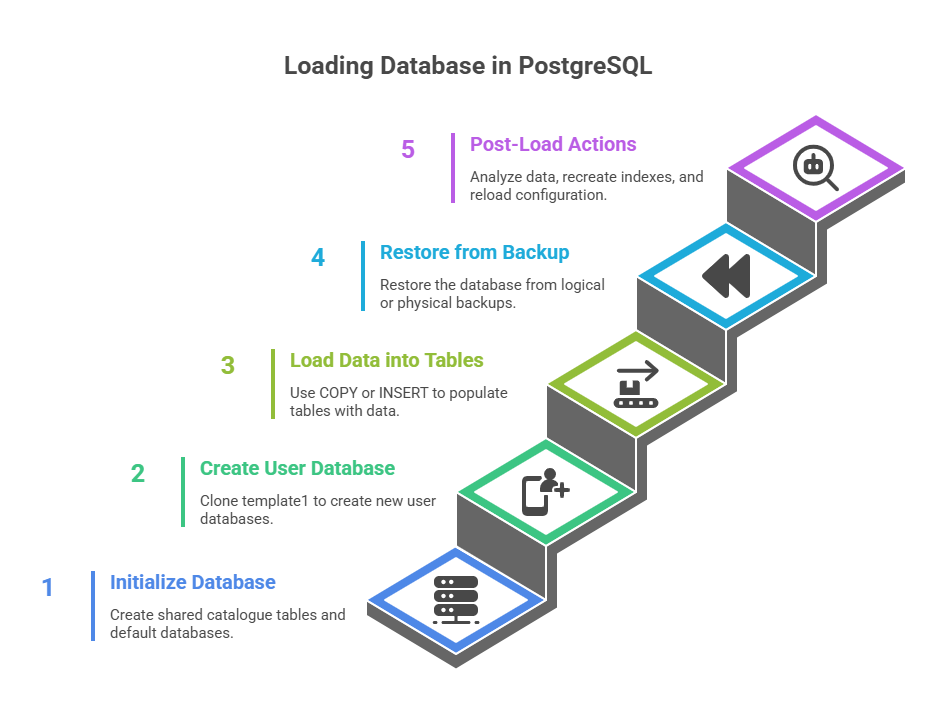

It is necessary to initialise and build a PostgreSQL database or cluster before any data can be loaded. A group of databases under the management of a single server instance is called a database cluster. This procedure usually entails:

Initdb: This tool creates shared catalogue tables, default databases such as template1, template0, and postgres, and the appropriate directories to initialise a database storage area on disc.

Createdb: This tool, which usually clones the template1 database, can be used to generate new user databases after initdb. It is possible to alter the template1 database to include objects or extensions that ought to be present in all freshly constructed databases.

Loading Database Methods

Following the creation of a database, there are several main methods to introduce data:

Loading Data into Tables:

COPY Command: PostgreSQL’s bulk-insert method, the COPY command, is made to transport huge volumes of data between files and tables or between a table and standard input/output in an efficient manner. Compared to INSERT, it is substantially faster for rows of thousands or more.

Syntax and Options: COPY supports CSV and other formats. Personalise header rows, NULL values, and delimiters. CSV HEADER ‘,’ Copy table_name FROM ‘/path/to/file.csv’

Server-Side Operation: The COPY command and SQL statement are server-based, so the input file must be on a PostgreSQL service account-accessible path.

psql \copy: The client-based \copy command is also available in the psql client tool. This makes it possible to import or export files from the client computer running SQL.

Performance Tips: If wal_level is small, using COPY to optimise bulk loading during the same transaction as a previous CREATE TABLE or TRUNCATE operation can minimise WAL (Write-Ahead Log) writes.

INSERT Statement:

- A table can have additional rows added by using the INSERT command.

- Individual entries, many records using the VALUES clause, or the output of a SELECT operation can all be inserted.

- Due to the substantial overhead associated with each successive commit, efficiency can be enhanced while inserting numerous rows by turning off autocommit and performing a single COMMIT at the end.

- By reversing all insertions in the event that a single row fails, this also ensures transactional integrity.

- For repeated insertions, using PREPARE and EXECUTE with INSERT can minimise planning and parsing cost, even if COPY is typically faster for very big databases.

Restoring Entire Databases or Clusters from Backups

Another way to load a database is to restore it from a backup that has already been made:

SQL Dumps (pg_dump and psql): The PostgreSQL database’s logical dump is produced by pg_dump, which can produce an archive or plain-text SQL script file. This script contains SQL statements to recreate database data and structureTo restore a plain-text dump, use psql -X dbname < dumpfile and create an empty target database (usually template0) for a pristine state. PostgreSQL pg_dumpall dumps all databases, global elements like roles, and tablespace definitions. Psql restores SQL commands and output. Psql -f infile postgres regularly connects to Postgres. Pg_dumpall restoration needs superuser access for roles and tablespaces.

pg_restore Utility: Directory, compressed, TAR, and custom backups from pg_dump are restored using pg_restore. Using pg_restore, you can reorder objects, selectively restore tables, and even restore just the schema (–section=pre-data) without data, which is useful for creating a new database template.

File System Level Backups: This approach copies PostgreSQL files directly. Before and after data directory transfer, use pg_start_backup() and pg_stop_backup() for consistent active database backup. Postgresql.conf and pg_hba.conf are not backed up by pg_dump

Post-Load and Post-Restore Actions

After data import or database restoration, several administrative actions must be done to maximise speed and functionality:

ANALYZE: Run ANALYSE on databases for table and column statistics. The query optimiser creates good execution plans using this data.

Recreate Indexes and Foreign Keys: Although it doesn’t drop or recreate indexes and typically doesn’t touch foreign keys, a data-only dump will still employ COPY. One Therefore, if you want to use indexes and foreign keys, you will need to drop and regenerate them while importing a data-only dump. Increasing max wal size during data loading is still helpful, but don’t bother increasing maintenance work mem; instead, do it while manually recreating foreign keys and indexes later.

Reload Configuration: PostgreSQL issues a SIGHUP signal or performs SELECT pg_reload_conf() to read updated configuration files like postgresql.conf or pg_hba.conf.

Foreign Data Wrappers: Foreign Data Wrappers (FDWs) allow PostgreSQL to access external data as if it were stored locally in foreign tables, even if they are not “load” actions. This is a powerful data integration and analysis tool. Flat files, NoSQL, and relational databases can be queried by FDWs. FDWs provide access to external data, not PostgreSQL storage.

Conclusion

In conclusion, depending on the size of the data and the backup format, loading a PostgreSQL database entails either recovering the complete database or cluster from backups or importing data into tables. The INSERT command is more appropriate for minor or transactional data updates, while the COPY command (also known as client-side \copy) offers the fastest performance for bulk table populating.

Filesystem-level backups for physical consistency and pg_dump with psql or pg_restore for logical backups are commonly used for full database recovery. Optimal performance is ensured after loading by performing ANALYSE, reloading configuration, and, if necessary, recreating indexes or foreign keys. These tools and approaches work together to give PostgreSQL high-performance, dependable, and adaptable ways to initialise, restore, and maintain databases.